In today’s environment, you’re aware that data security and governance are critical to prevent data breaches that can put your company in jeopardy of being fined, sued, and possibly shut down. This kind of data security is especially important in cloud data platforms like Snowflake where lots of sensitive data can be consolidated into a single data pool. A surefire way to outsmart bad actors who attempt to compromise your data is through a “switcheroo” tactic otherwise known as Snowflake Data Tokenization. If you’re unfamiliar with it, then think of data tokenization (metaphorically) as valet service offered at an upscale restaurant or formal event that you might have attended. Think of your car keys that the attendant exchanged for the valet ticket you were given, as how data tokenization works. If someone were to steal your valet ticket, it would be useless to them because they aren’t the actual keys to access your car. The ticket only serves as a substitute for your keys and ‘marker’ to help the valet attendant (who has your keys) identify which car to return to you.

This blog provides a high-level explanation of what Snowflake tokenization is, the benefits it offers, how Snowflake tokenization can be done manually, and the steps to follow if you use ALTR to automate the process. There are other ways to secure data including Snowflake data encryption, but Snowflake tokenization with ALTR provides many benefits encryption cannot.

What is Data Tokenization and Why is It Important?

Data tokenization is a process where an element of sensitive data (for example a social security number) is replaced by a value called a token. This technology adds an extra means of securing your sensitive data. Here’s the most simplistic way to describe Snowflake Tokenization. Your data is replaced with a ‘substitute’ of non-sensitive data —that has no value and serves as a marker —to map back to your sensitive data when someone queries it. The ‘substitute’ is in the form of a random character string that is a ‘token’. For example, your customer’s bank account number would be replaced with this ‘token’ to make it impossible for someone among your staff to make purchases with their information. As a result, this added safeguard will help your company minimize data breaches and remain compliant to data governance laws.

Benefits of Data Tokenization

- Maximized security: Data tokenization substitutes your original data with a randomly generated token for increased security. If your tokens become compromised, then they are completely useless to bad actors and cannot be deconstructed to figure out. This helps to maintain your customer’s trust that their information will not be compromised.

- Highly Operational: Tokenization offers determinism, which allows people to perform accurate analytics on the data in the cloud. If you provide a particular set of inputs, then you get the same outputs every time. Deterministic tokens enable you to perform SQL operations (such as joins or where clauses) without the need to detokenize the data. As a result, this protects consumer privacy without interrupting analyst operations.

- Scalability and less overhead costs: ALTR lowers your overhead costs by eliminating the need for your company to automatically scale to meet ever-changing compliance requirements. We take care of this for you through our highly scalable Vaulted Tokenization solution that fits with Snowflake when you might need to tokenize or detokenize datasets that contain millions or billions of values at a time.

Why Data Tokenization is Better than Encryption for Many Use Cases

Sometimes you might hear data tokenization and data encryption used interchangeably; however, while both technologies help to secure data, they are two different approaches to consider as part of your data security strategy. Tokenization replaces your sensitive data with a ‘token’ that cannot be deconstructed whereas encryption converts your data into a format (done by an encryption algorithm and key) that is impossible to read and understand.

A benefit of data tokenization is that it can be more secure than encryption because a token represents a value without being a function or derivative of that value. Another benefit it offers is by being simpler to manage because there are no encryption keys to oversee. However, when deciding if you should use tokenization or encryption, consider your specific business needs. Due to the benefits that tokenization offers in today’s environment, businesses in different industries are using it for a wide array of reasons. A few examples are commerce transactions to accept credit and debit card payments, the sale and tracking of certain assets such as digital art that’s recorded on a blockchain platform, and the protection of personal health information.

How Snowflake Tokenization Works if You DIY

If you’re wondering how to tokenize your data manually inside of Snowflake, then the answer is, “You can’t.”

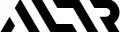

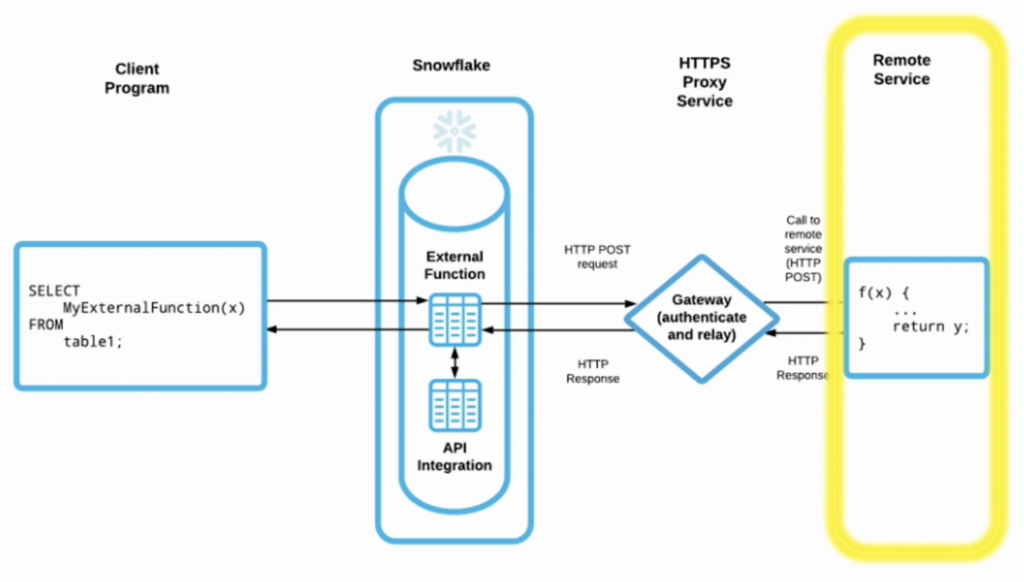

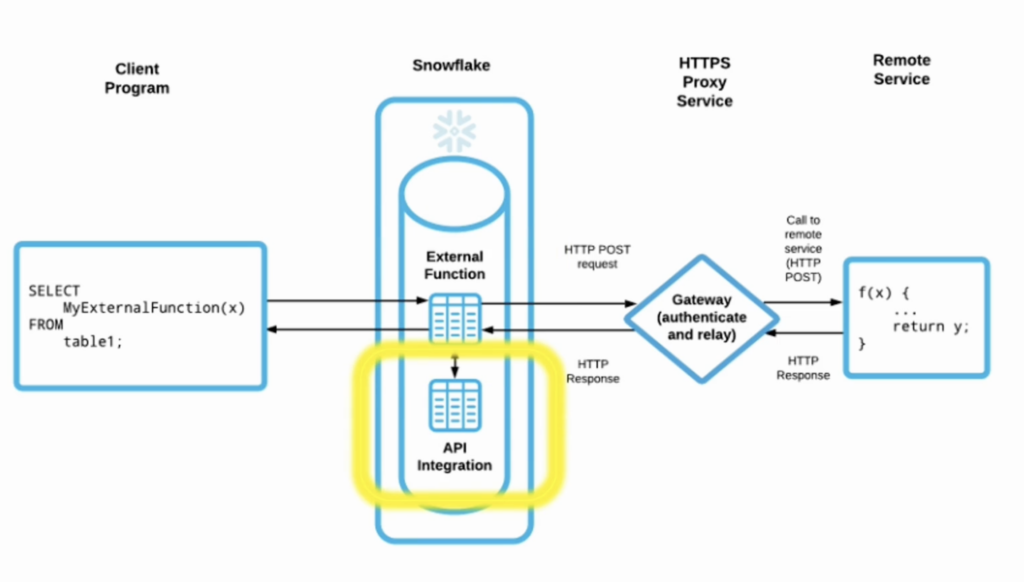

Snowflake does not have native built in tokenization capabilities, but it can support custom tokenization through its external function and column level security features as long as you have the resources available to write the code needed to implement tokenization, storage, and detokenization.

Lets take a look at what that would entail:

Implementing a remote Snowflake tokenization service

1) First you will need to write and deploy a remote service that can handle tokenization, storage and detokenization.

This service will need to be implemented in Amazon Web Services, Microsoft Azure, or Google Cloud Platform depending on which of those cloud providers you chose for your Snowflake instance.

There is a significant amount of effort required in this step that will require not just programming expertise but also expertise in how to use the storage, compute and networking capabilities of your cloud provider.

Also Snowflake expects data passed to and received from external functions to be provided in a specific format. Thus you will need to invest time in understanding this format and how to architect a solution that optimizes the exchange of a large amount of data.

2) Next you will need to configure a gateway endpoint in your cloud provider to receive the HTTP requests and responses required by Snowflake for external functions.

This layer is also where you implement authentication to ensure that only valid requests from your Snowflake instance are processed.

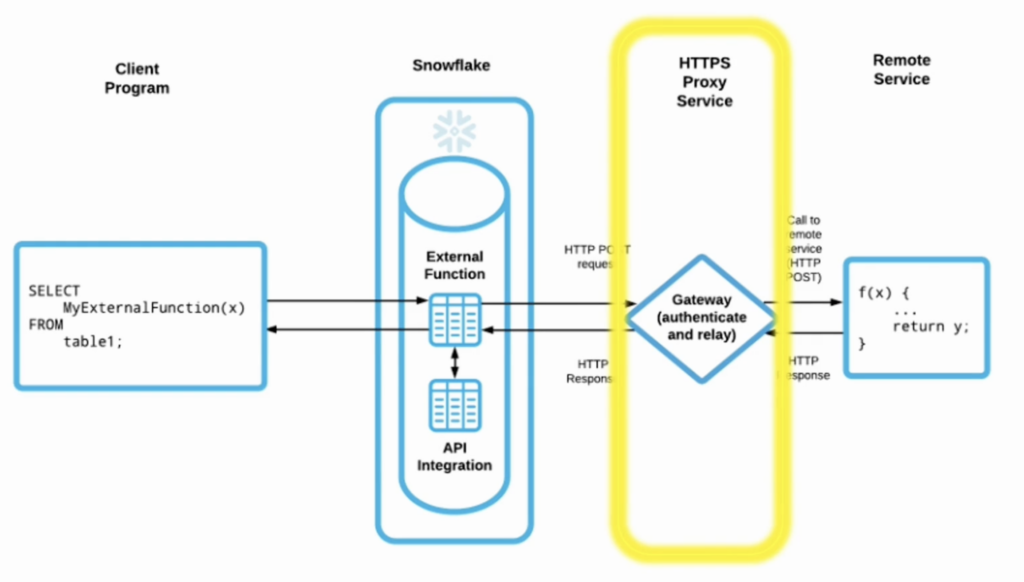

3) After implementing your external tokenization function you will need to create two objects in your Snowflake instance.

One is a user defined function which will be called from within your SQL statements to tokenize / detokenize data.

And the other is an Integration object that holds the credentials allowing your snowflake instance to connect and make a call to the EFs implementation in your cloud providers environment. These two objects can be created using SQL.

Fig 3. User defined external function

4) After these three steps then you will be able to call your detokenize / tokenize function from your Snowflake client.

As you can see that’s a lot of extra time and effort. Let’s see how you can avoid that by utilizing ALTR’s tokenization solution.

How Snowflake Tokenization Works Using ALTR

NOTE: A tokenization API user is required to access our Vaulted Tokenization. Enterprise Plus customers can create tokenization API users on the API tab of the Applications page.

Let’s compare this with using ALTR. If you use ALTR and Snowflake together tokenization is much easier because ALTR has done all the implementation work for you.

To use tokenization in ALTR you only need to create the Snowflake Integration object that points to our service and define an External Function in your database. We provide a SQL script that does this work for you with just a single SQL command.

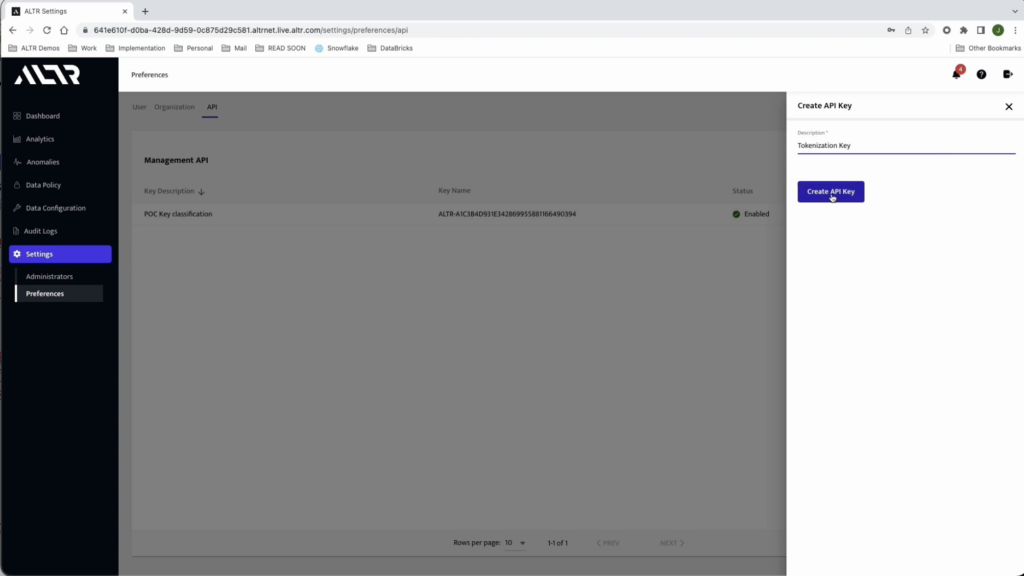

You will need to generate an API key and secret from the ALTR portal.

This key and secret value are inputs to the SQL script we provide. Just run the script to create a Snowflake Integration object that represents a connection to ALTR’s external tokenization/detokenization implementation in the cloud. This script also creates two external functions that use this service. One to tokenize data, and another to detokenize.

Fig. 5 Create a Tokenization Key

Now we can look at tokenization in action.

As mentioned previously a best practice is to have sensitive values tokenized at rest in the database, preferably before they land in Snowflake. ALTR supports this through a library of open-source integrations to data movement tools like Matillion, Big ID and others.

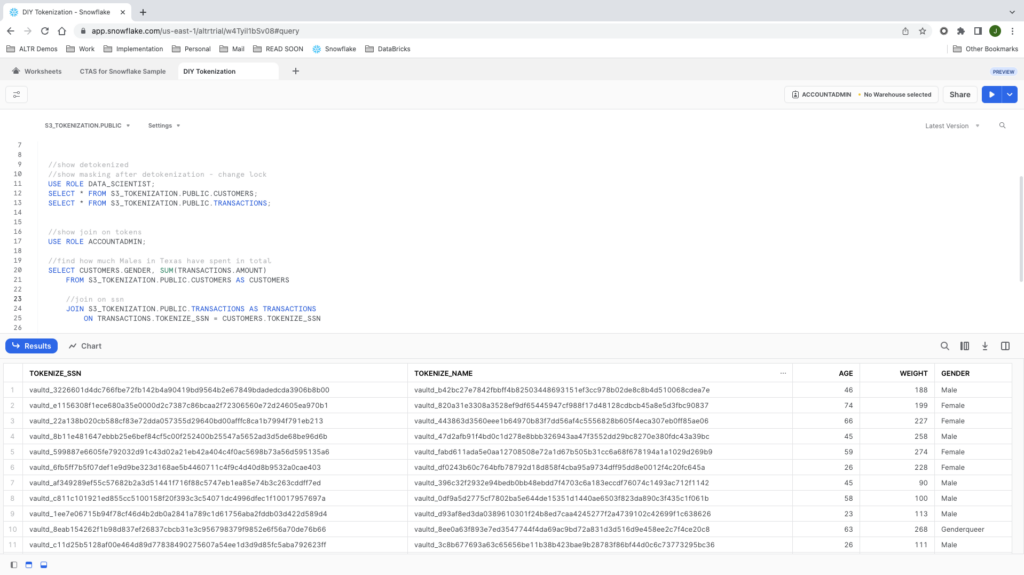

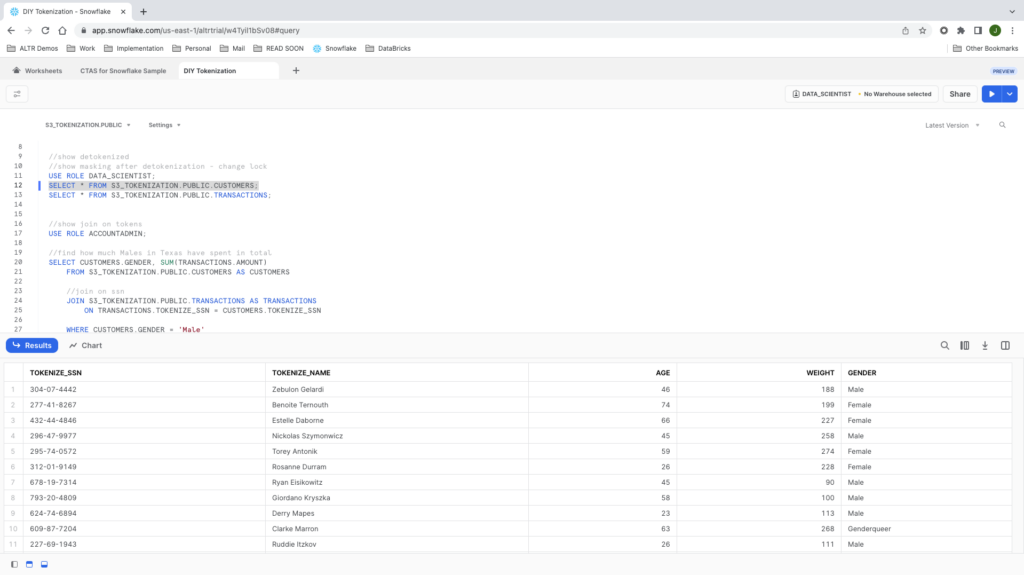

If we run this query as an account admin we can see that we have two columns tokenized. The NAME and SSN columns. The tokens that you see here are the values on the disk within Snowflake in the cloud.

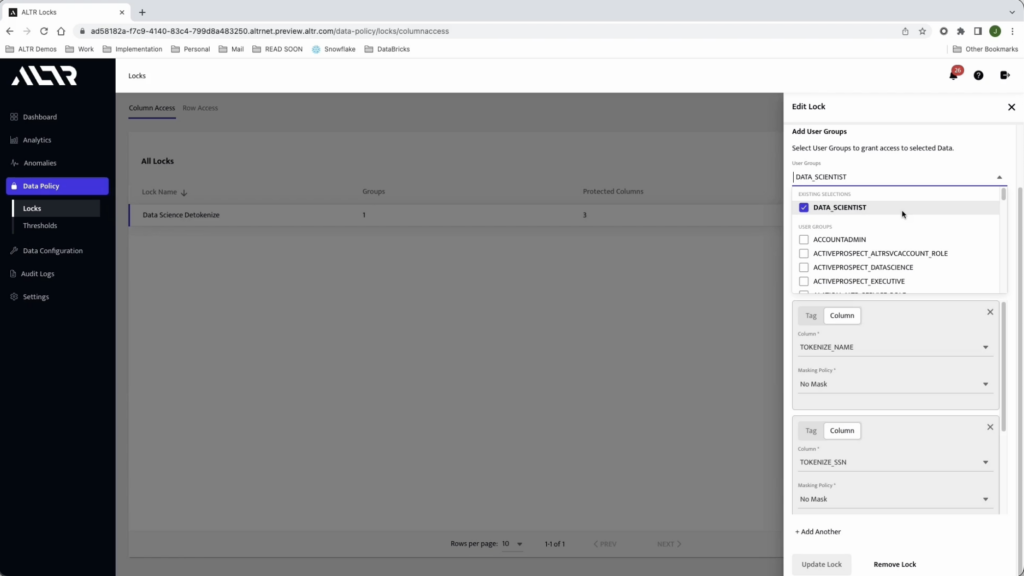

When it comes to detokenizing we want to only detokenize a value “on the fly”, when the data is queried, and we only want to detokenize the value for roles that are allowed to see the values.

With ALTR tokenization we do this for you automatically.

If we run this next query with the DATA_SCIENTIST role then we will see the values are de-tokenized and we see the original sensitive values instead of tokens. This is because in the ALTR portal, we have allowed the Data Scientist role to see these values.

If you use ALTR for tokenization you do not need to write any code or invest in developing a solution.

We can ensure your data is tokenized before it lands in Snowflake with our open source integrations for your ETL/ELT pipelines and we can automate detokenization to only users who you authorize through the ALTR portal.

ALTR Snowflake Tokenization Use Cases

Here are a couple of use case examples where ALTR’s automated data tokenization capability can benefit your business as it scales with Snowflake usage.

Use Case 1. Your new research company needs to conduct a clinical trial.

A pharmaceutical company wants your new research company to conduct clinical trials on their behalf. The personal identifiable data and research information from clinical study participants needs to be secure to stay in compliance with HIPAA laws and regulations. ALTR’s Data Tokenization would be the ideal method to incorporate as part of your compliant data governance strategy.

Use Case 2. Your new retail store needs to accept credit cards as a payment method.

Your newly launched store accepts credit cards as one of your methods of payment from shoppers. To remain compliant to Payment Card Industry (PCI) standards, you need to ensure that your customer’s credit card information is handled securely. Data Tokenization would help you save administrative overhead costs and satisfy the PCI standards while storing the data in Snowflake.

Automate Snowflake Tokenization with ALTR

As your business collects and stores more sensitive data in Snowflake, it is critical that Snowflake data tokenization is included as part of your data governance strategy. ALTR helps you with this process by providing our Vaulted Tokenization, BYOK for Vaulted Tokenization, and other capabilities to leverage.