“Today is the day!” you exclaim to yourself as you settle into your desk on Monday morning. After months of meticulous planning, the migration from Teradata to Snowflake begins now. You have been through all the back-and-forth with leadership on why this migration is needed: Teradata is expensive, Teradata is not agile, Snowflake creates a single source of data truth, and Snowflake is instantly on and scales when you need it. It’s perfect for you and your business.

As you follow your meticulously planned checklist for the migration, you’re utilizing cutting-edge tools like DBT, Okta, and Sigma. These tools are not just cool, they’re the future. You’re moving your database structure, loading the initial non-sensitive data, repointing your ETL pipelines, and witnessing the power of modern technology in action. Everything is working like a charm.

A few weeks or months of testing go by, your downstream consumers of data are still using Teradata but are starting to give thumbs up on the Snowflake workloads that you have already migrated. Things are going well. You have not thought about CPU or disk space for the Teradata box in a while, which was the point of the migration. You finally get word from all stakeholders that this trial migration was a success! You call your Snowflake team, and tell them to back up the truck, you are clear to move the remaining workloads. Life is good. But then, comes a knock at the door.

It’s Pat from Security & Risk. You know Pat well and enjoy Pat’s company, but you also do as much as possible to avoid Pat because you are in data and, well, we all know the feeling. Pat tells you, “Heard we are finally getting off Teradata; that’s awesome! Do you have a plan for the PII and SSNs that are kept in that one Teradata database that we require using Protegrity for audit and compliance reasons?” You nod, “I do, but I couldn’t do it without your expertise. I’ve been reading the Snowflake documentation, and I’m in the process of writing a few small AWS Lambdas to interface with Protegrity. Your input is crucial to this process.” Pat smiles, gives a non-assuring hand on your back and walks out. Phew, no more Pat.

Four weeks later, you’re utterly exhausted. You’ve logged over 50 hours in Snowflake with fellow data engineers, and tapped into the expertise of one of the cloud ops team members who knows Lambda inside out. You have escalated to Snowflake support, but your external function calls from Snowflake to AWS keep timing out. AWS support is unable to help. Now, you have memory limits being hit with AWS Lambda. Suddenly, the internal network team does not want to keep the ports open to hit Protegrity from AWS, and you need to use a Private Link connection with additional security controls. You are behind on the Teradata migrations. There is no end in sight of the scale problems. Shoot, this is not working.

Don’t worry, you are not alone. This is the same experience felt by hundreds of Snowflake customers, and it stems from the same problem: everything about your Snowflake migration was planned for the new architecture of Snowflake except for one thing: data protection. You followed all the blogs and user guides, and your stateless data pipeline feeding Snowflake with a Kafka bus is perfect. Sigma is running without limits. The team is happy, but they want that customer data now. Except, you can’t use it until you solve this security problem.

Snowflake and OLAP workloads, generally, turned data protection on its head. OLTP workloads are easy to secure. You know the access points and the typical pattern of user behavior, so you can easily plan for scale and up-time. OLAP is widely unpredictable. Large queries, small queries, ten rows, 10M rows, it’s a nightmare for security. There is only one path forward: you must get purpose-built data protection for Snowflake.

You need a data protection solution that matches Snowflake’s architecture, just like when you matched Protegrity to Teradata. If Snowflake is going to be elastic, your data protection needs to be elastic. If Snowflake is going to be accessed by many downstream consumers, you need to be able to integrate data protection into the access policies in Snowflake. Who is going to do that work? Who will maintain this code? How can you control costs? The answer to all those questions is ALTR.

ALTR’s purpose-built native app for data protection is an easy solution for Snowflake. You can install it on your own. You can use your Snowflake committed dollars to pay for the service. ALTR’s data protection scale is controlled by Snowflake and nothing else. It’s the easiest way to get back on track. Call your Snowflake team, ask them about ALTR. It will feel good walking back into Pat’s office with your head held high and your data migration back on track.

Whether your team currently has Protegrity or Voltage, you will face the same problems. Do not waste your time trying to get these solutions to scale, just call ATLR.

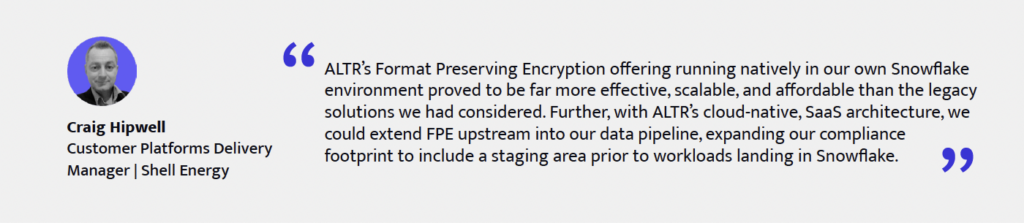

Don’t just take my word for it…