If you haven’t noticed the wave of Generative AI sweeping across the enterprise hardware and software world, it certainly would have hit you within 5 minutes of attending Big Data London, one of the UK’s leading data, analytics, and AI events. Having attended last year’s show, I can confidently say AI wasn’t nearly as dominant. But now? It’s everywhere, transforming not just this event but countless others. AI has officially taken over!

As a data security focused person, it is exciting and terrifying to see all the buzz. I’m excited because it feels like we’re on the verge of a seismic shift in technology—on par with the rise of the web or the cloud—driven by GenAI. And I get to witness it firsthand! But it is terrifying to see all the applications, solution consultants, database vendors and others selling happy GenAI stories to customers. I could scream into the loud buzz of the show floor, “We have seen this movie before! Don’t let the development of GenAI applications outpace the critical need for data security!” I’m thinking about the rush to web, the rush to mobile, the rush to cloud. All of these previous shifts suffer from the same thing: security is boring and we don’t want to do it. What definitely wasn’t boring was using a groundbreaking mobile app from 1800flowers.com to buy flowers—that was cool! Let’s have more of that! Who cares about security, right? That can wait…

Cyber security, and data security in particular, have had the task of keeping up with the excitement of new applications for decades. The ALTR engineering office is in beautiful Melbourne, FL just a few hours away from Disney. When I see a young mother or father with a concerned look racing after their young child who couldn’t care less that they are about to get run over by a popcorn stand, I think “Application users are the kids, security people are the parent, and GenAI is whichever Disney character the kid can’t wait to hug.” It’s cute, but dangerous. This is what is happening with GenAI and security.

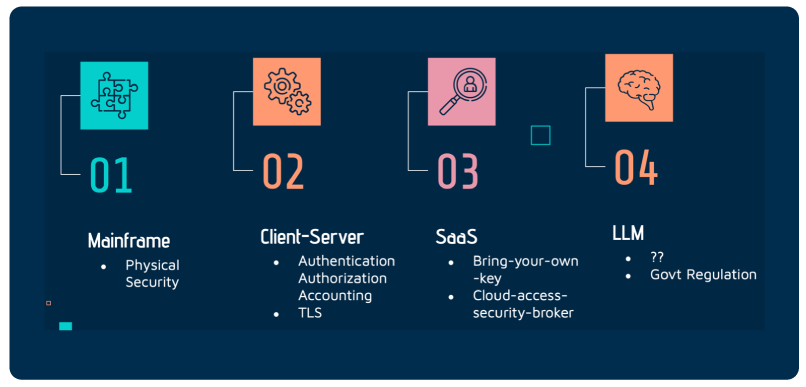

As applications have evolved so has data security. Below is an example of these application evolutions and how security has adapted to cover the new weaknesses of each evolution.

What is Making Generative AI Hard to Secure?

The simple answer is: we don’t fully know. It’s not just that we’re still figuring out how to secure GenAI (spoiler: we haven’t cracked that yet); it’s that we don’t even fully understand how these Large Language Models (LLMs) and GenAI systems truly operate. Even the developers behind these models can’t entirely explain their inner workings. How do you secure something you can’t fully comprehend? The reality is—you can’t.

So, what do we know?

We know two things:

1. Each evolution of applications and data products has been secured by building upon the principles of the previous generation. What has been working well needs to be hardened and expanded.

2. LLMs present two new and very hard problems to solve: data ownership and data access.

Let’s dive into the second part first. To get access to the hardware currently required to train and run LLMs we must use cloud or shared resources. Things like ChatGPT or NVIDA’s DGX cloud. Until these models require less hardware or the hardware magically becomes more available, this truth will hold.

Similar to the early days of the internet, sensitive information was desired to be sent and received on shared internet lines. The internet was great for transmitting public or non-sensitive information, but how could banking and healthcare use public internet lines to send and receive sensitive information? Enter TLS. This is the same problem facing LLMs today.

How can a business (or even a person for that matter) use a public and shared LLM/GenAI system without fear of data exposure? Well, it’s a very challenging. And not a problem that a traditional data security provider can solve. Luckily there are really smart people working on this solution like the folks at Protopia.ai.

So, data ownership is being addressed much like how TLS solved the private-information-flowing-on-public-internet-lines. And that’s a huge step forward. What about data access?

This one is a bit tougher. There are some schools of thought about prompt control and data classification within AI responses. But this feels a lot like CASB all over again, which didn’t exactly hit the mark for SaaS security. In my opinion, until these models can pinpoint exactly where their responses are coming from—essentially, identify the data sets they’ve learned from —and also understand who is asking the questions, we’ll continue to face risks. Only then can we prevent situations where an intern asks questions and gets answers that should only be accessible to the CEO.

Going back to what we know, the first item, we will need to build upon the solid data security foundations that got us to this point in the first place. It has become clear to me that for the next few years, Retrieval-Augmented Generation (RAG) will be how enterprises globally interact with LLMs and GenAI. While this is not a silver bullet, it’s the best shot busineses have to leverage the power of public models while keeping private information safe.

With the adoption of RAG techniques, the core data security pillars that have been bearing the load of a data lake or warehouse to date will need to be braced for extra load.

Data classification and discovery needs to be cheap, fast, and accurate. Businesses must continuously ensure that any information unsuitable for RAG workloads hasn’t slipped into the database from which retrieval occurs. This constant vigilance is crucial to maintaining secure and compliant operations. This is the first step.

The next step is to layer access control and data access monitoring such that the business can easily set the rules for which types of data are allowed to be used by the different models and use cases. Just as service accounts for BI tools need access control, so to do service accounts for the purposes of RAG. On top of these access controls, near-real-time data access logging must be present. As the RAG workloads access the data, these logs are used to inform the business if any access has changed and allows the business to easily comply with internal and external audits proving they are only using approved data sets with public LLMs and GenAI models.

Last step, keep the data secure at rest. The use of LLMs and GenAI will only accelerate the migration of sensitive data into the cloud. These data elements that were once protected on-prem will have to be protected in the cloud as well. But there is a catch. The scale requirements of this data protection will be a new challenge for businesses. You will not be able to point your existing on-prem-based encryption or tokenization solution to a cloud database like Snowflake and expect to get the full value of Snowflake.

When prospects or customers ask me, “What is ALTR’s solution for securing LLMs and GenAI” I used to joke with them and say, “Nothing!” But now I’ve learned the right response, “The same thing we’ve always done to secure your data—just with even more precision and focus for today’s challenges.” The use of LLMs and GenAI is exciting and scary at the same time. One way to reduce the anxiety is to start with a solid foundation of understanding what data you have, how that data is allowed to be used, and whether you prove that the data is safe at rest and in motion.

This does not mean you cannot use ChatGPT. It just means you must realize that you were once that careless child running with arms wide open to Mickey, but now you are the concerned parent. Your teams and company will be eager to dive headfirst into GenAI, but it’s crucial that you can articulate why this journey is complex and how you plan to guide them there safely. It begins with mastering the fundamentals and gradually tackling the tough new challenges that come with this powerful technology.