Format-Preserving Encryption: A Deep Dive into FF3-1 Encryption Algorithm

In the ever-evolving landscape of data security, protecting sensitive information while maintaining its usability is crucial. ALTR’s Format Preserving Encryption (FPE) is an industry disrupting solution designed to address this need. FPE ensures that encrypted data retains the same format as the original plaintext, which is vital for maintaining compatibility with existing systems and applications. This post explores ALTR's FPE, the technical details of the FF3-1 encryption algorithm, and the benefits and challenges associated with using padding in FPE.

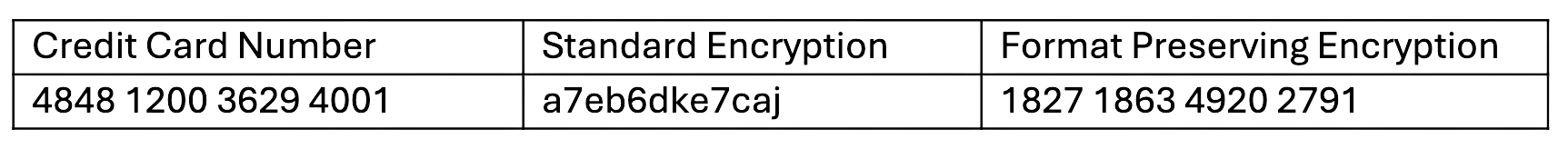

Format Preserving Encryption is a cryptographic technique that encrypts data while preserving its original format. This means that if the plaintext data is a 16-digit credit card number, the ciphertext will also be a 16-digit number. This property is essential for systems where data format consistency is critical, such as databases, legacy applications, and regulatory compliance scenarios.

The FF3-1 encryption algorithm is a format-preserving encryption method that follows the guidelines established by the National Institute of Standards and Technology (NIST). It is part of the NIST Special Publication 800-38G and is a variant of the Feistel network, which is widely used in various cryptographic applications. Here’s a technical breakdown of how FF3-1 works:

1. Feistel Network: FF3-1 is based on a Feistel network, a symmetric structure used in many block cipher designs. A Feistel network divides the plaintext into two halves and processes them through multiple rounds of encryption, using a subkey derived from the main key in each round.

2. Rounds: FF3-1 typically uses 8 rounds of encryption, where each round applies a round function to one half of the data and then combines it with the other half using an XOR operation. This process is repeated, alternating between the halves.

3. Key Scheduling: FF3-1 uses a key scheduling algorithm to generate a series of subkeys from the main encryption key. These subkeys are used in each round of the Feistel network to ensure security.

4. Tweakable Block Cipher: FF3-1 includes a tweakable block cipher mechanism, where a tweak (an additional input parameter) is used along with the key to add an extra layer of security. This makes it resistant to certain types of cryptographic attacks.

5. Format Preservation: The algorithm ensures that the ciphertext retains the same format as the plaintext. For example, if the input is a numeric string like a phone number, the output will also be a numeric string of the same length, also appearing like a phone number.

1. Initialization: The plaintext is divided into two halves, and an initial tweak is applied. The tweak is often derived from additional data, such as the position of the data within a larger dataset, to ensure uniqueness.

2. Round Function: In each round, the round function takes one half of the data and a subkey as inputs. The round function typically includes modular addition, bitwise operations, and table lookups to produce a pseudorandom output.

3. Combining Halves: The output of the round function is XORed with the other half of the data. The halves are then swapped, and the process repeats for the specified number of rounds.

4. Finalization: After the final round, the halves are recombined to form the final ciphertext, which maintains the same format as the original plaintext.

Implementing FPE provides numerous benefits to organizations:

1. Compatibility with Existing Systems: Since FPE maintains the original data format, it can be integrated into existing systems without requiring significant changes. This reduces the risk of errors and system disruptions.

2. Improved Performance: FPE algorithms like FF3-1 are designed to be efficient, ensuring minimal impact on system performance. This is crucial for applications where speed and responsiveness are critical.

3. Simplified Data Migration: FPE allows for the secure migration of data between systems while preserving its format, simplifying the process and ensuring compatibility and functionality.

4. Enhanced Data Security: By encrypting sensitive data, FPE protects it from unauthorized access, reducing the risk of data breaches and ensuring compliance with data protection regulations.

5. Creation of production-like data for lower trust environments: Using a product like ALTR’s FPE, data engineers can use the cipher-text of production data to create useful mock datasets for consumption by developers in lower-trust development and test environments.

Padding is a technique used in encryption to ensure that the plaintext data meets the required minimum length for the encryption algorithm. While padding is beneficial in maintaining data structure, it presents both advantages and challenges in the context of FPE:

1. Consistency in Data Length: Padding ensures that the data conforms to the required minimum length, which is necessary for the encryption algorithm to function correctly.

2. Preservation of Data Format: Padding helps maintain the original data format, which is crucial for systems that rely on specific data structures.

3. Enhanced Security: By adding extra data, padding can make it more difficult for attackers to infer information about the original data from the ciphertext.

1. Increased Complexity: The use of padding adds complexity to the encryption and decryption processes, which can increase the risk of implementation errors.

2. Potential Information Leakage: If not implemented correctly, padding schemes can potentially leak information about the original data, compromising security.

3. Handling of Padding in Decryption: Ensuring that the padding is correctly handled during decryption is crucial to avoid errors and data corruption.

ALTR's Format Preserving Encryption, powered by the technically robust FF3-1 algorithm and married with legendary ALTR policy, offers a comprehensive solution for encrypting sensitive data while maintaining its usability and format. This approach ensures compatibility with existing systems, enhances data security, and supports regulatory compliance. However, the use of padding in FPE, while beneficial in preserving data structure, introduces additional complexity and potential security challenges that must be carefully managed. By leveraging ALTR’s FPE, organizations can effectively protect their sensitive data without sacrificing functionality or performance.

For more information about ALTR’s Format Preserving Encryption and other data security solutions, visit the ALTR documentation

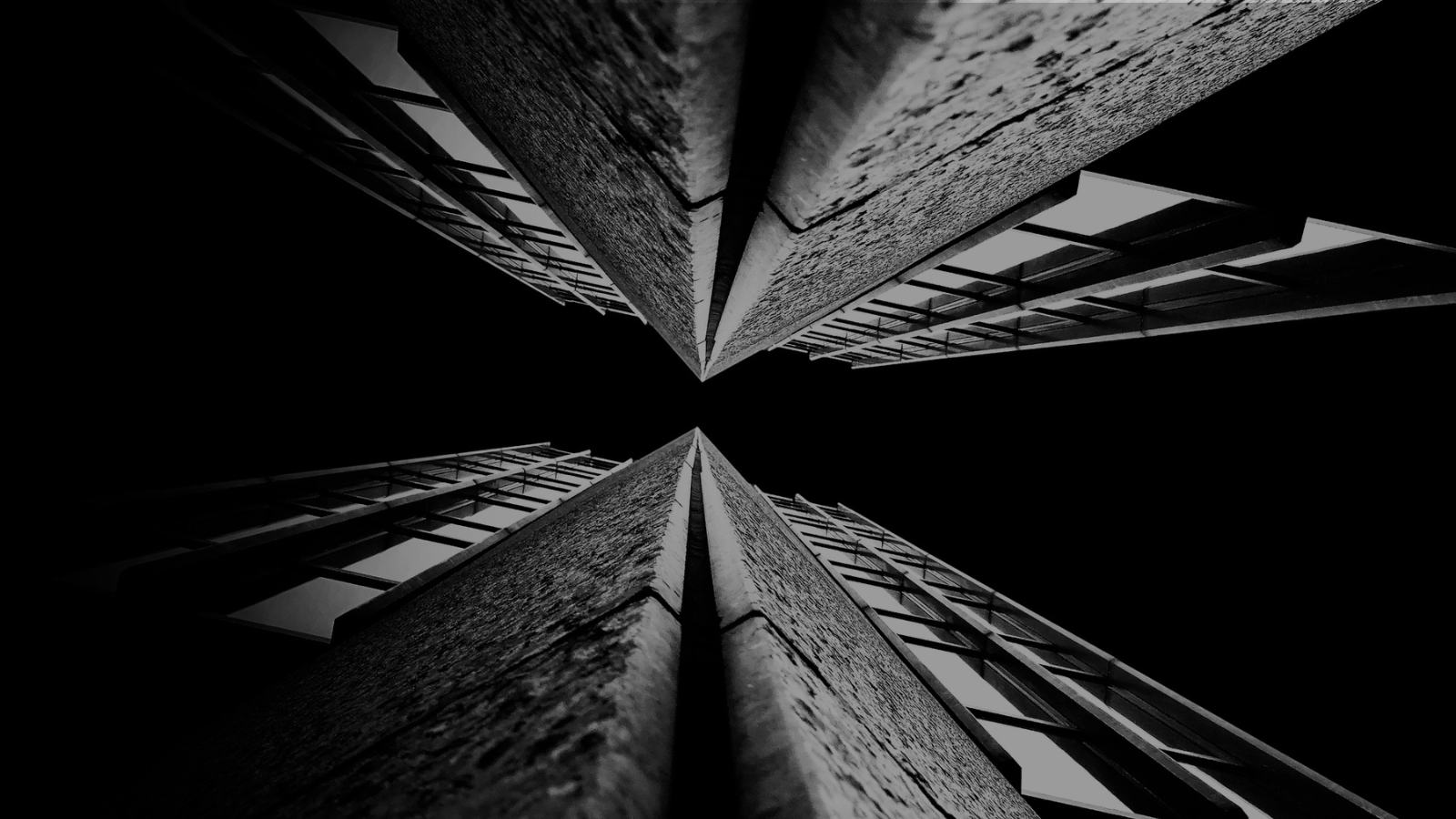

For years (even decades) sensitive information has lived in transactional and analytical databases in the data center. Firewalls, VPNs, Database Activity Monitors, Encryption solutions, Access Control solutions, Privileged Access Management and Data Loss Prevention tools were all purchased and assembled to sit in front of, and around, the databases housing this sensitive information.

Even with all of the above solutions in place, CISO’s and security teams were still a nervous wreck. The goal of delivering data to the business was met, but that does not mean the teams were happy with their solutions. But we got by.

The advent of Big Data and now Generative AI are causing businesses to come to terms with the limitations of these on-prem analytical data stores. It’s hard to scale these systems when the compute and storage are tightly coupled. Sharing data with trusted parties outside the walls of the data center securely is clunky at best, downright dangerous in most cases. And forget running your own GenAI models in your datacenter unless you can outbid Larry, Sam, Satya, and Elon at the Nvidia store. These limits have brought on the era of cloud data platforms. These cloud platforms address the business needs and operational challenges, but they also present whole new security and compliance challenges.

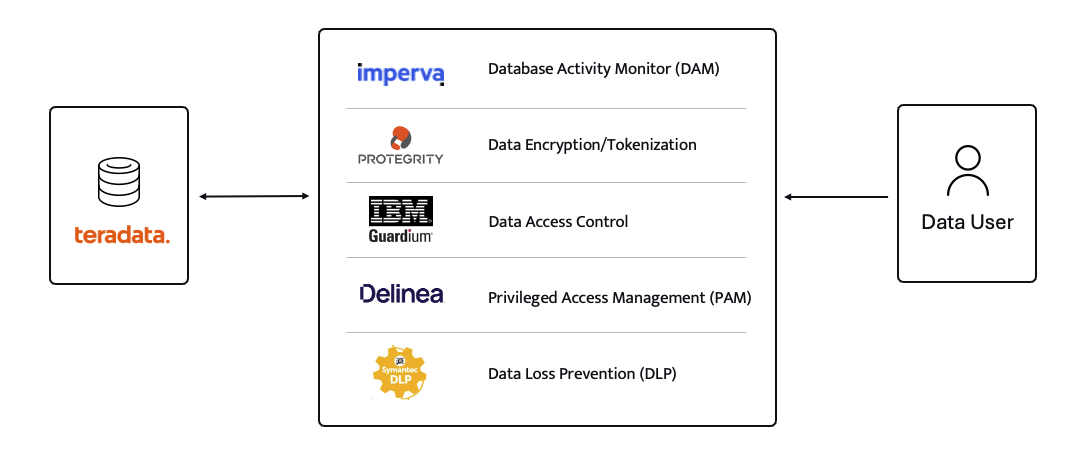

ALTR’s platform has been purpose-built to recreate and enhance these protections required to use Teradata for Snowflake. Our cutting-edge SaaS architecture is revolutionizing data migrations from Teradata to Snowflake, making it seamless for organizations of all sizes, across industries, to unlock the full potential of their data.

What spurred this blog is that a company reached out to ALTR to help them with data security on Snowflake. Cool! A member of the Data & Analytics team who tried our product and found love at first sight. The features were exactly what was needed to control access to sensitive data. Our Format-Preserving Encryption sets the standard for securing data at rest, offering unmatched protection with pricing that's accessible for businesses of any size. Win-win, which is the way it should be.

Our team collaborated closely with this person on use cases, identifying time and cost savings, and mapping out a plan to prove the solution’s value to their organization. Typically, we engage with the CISO at this stage, and those conversations are highly successful. However, this was not the case this time. The CISO did not want to meet with our team and practically stalled our progress.

The CISO’s point of view was that ALTR’s security solution could be completely disabled, removed, and would not be helpful in the case of a compromised ACCOUNTADMIN account in Snowflake. I agree with the CISO, all of those things are possible. Here is what I wanted to say to the CISO if they had given me the chance to meet with them!

The ACCOUNTADMIN role has a very simple definition, yet powerful and long-reaching implications of its use:

One of the main points I would have liked to make to the CISO is that as a user of Snowflake, their responsibility to secure that ACCOUNTADMIN role is squarely in their court. By now I’m sure you have all seen the news and responses to the Snowflake compromised accounts that happened earlier this year. It is proven that unsecured accounts by Snowflake customers caused the data theft. There have been dozens of articles and recommendations on how to secure your accounts with Snowflake and even a mandate of minimum authentication standards going forward for Snowflake accounts. You can read more information here, around securing the ACCOUNTADMIN role in Snowflake.

I felt the CISO was missing the point of the ALTR solution, and I wanted the chance to explain my perspective.

ALTR is not meant to secure the ACCOUNTADMIN account in Snowflake. That’s not where the real risk lies when using Snowflake (and yes, I know—“tell that to Ticketmaster.” Well, I did. Check out my write-up on how ALTR could have mitigated or even reduced the data theft, even with compromised accounts). The risk to data in Snowflake comes from all the OTHER accounts that are created and given access to data.

The ACCOUNTADMIN role is limited to one or two people in an organization. These are trusted folks who are smart and don’t want to get in trouble (99% of the time). On the other hand, you will have potentially thousands of non-ACCOUNTADMIN users accessing data, sharing data, screensharing dashboards, re-using passwords, etc. This is the purpose of ALTR’s Data Security Platform, to help you get a handle on part of the problem which is so large it can cause companies to abandon the benefits of Snowflake entirely.

There are three major issues outside of the ACCOUNTADMIN role that companies have to address when using Snowflake:

1. You must understand where your sensitive is inside of Snowflake. Data changes rapidly. You must keep up.

2. You must be able to prove to the business that you have a least privileged access mechanism. Data is accessed only when there is a valid business purpose.

3. You must be able to protect data at rest and in motion within Snowflake. This means cell level encryption using a BYOK approach, near-real-time data activity monitoring, and data theft prevention in the form of DLP.

The three issues mentioned above are incredibly difficult for 95% of businesses to solve, largely due to the sheer scale and complexity of these challenges. Terabytes of data and growing daily, more users with more applications, trusted third parties who want to collaborate with your data. All of this leads to an unmanageable set of internal processes that slow down the business and provide risk.

ALTR’s easy-to-use solution allows Virgin Pulse Data, Reporting, and Analytics teams to automatically apply data masking to thousands of tagged columns across multiple Snowflake databases. We’re able to store PII/PHI data securely and privately with a complete audit trail. Our internal users gain insight from this masked data and change lives for good.

- Andrew Bartley, Director of Data Governance

I believed the CISO at this company was either too focused on the ACCOUNTADMIN problem to understand their other risks, or felt he had control over the other non-admin accounts. In either case I would have liked to learn more!

There was a reason someone from the Data & Analytics team sought out a product like ALTR. Data teams are afraid of screwing up. People are scared to store and use sensitive data in Snowflake. That is what ALTR solves for, not the task of ACCOUNTADMIN security. I wanted to be able to walk the CISO through the risks and how others have solved for them using ALTR.

The tools that Snowflake provides to secure and lock down the ACCOUNTADMIN role are robust and simple to use. Ensure network policies are in place. Ensure MFA is enabled. Ensure you have logging of ACCOUNTADMIN activity to watch all access.

I wish I could have been on the conversation with the CISO to ask a simple question, “If I show you how to control the ACCOUNTADMIN role on your own, would that change your tone on your teams use of ALTR?” I don’t know the answer they would have given, but I know the answer most CISO’s would give.

Nothing will ever be 100% secure and I am by no means saying ALTR can protect your Snowflake data 100% by using our platform. Data security is all about reducing risk. Control the things you can, monitor closely and respond to the things you cannot control. That is what ALTR provides day in and day out to our customers. You can control your ACCOUNTADMIN on your own. Let us control and monitor the things you cannot do on your own.

Since 2015 the migration of corporate data to the cloud has rapidly accelerated. At the time it was estimated that 30% of the corporate data was in the cloud compared to 2022 where it doubled to 60% in a mere seven years. Here we are in 2024, and this trend has not slowed down.

Over time, as more and more data has moved to the cloud, new challenges have presented themselves to organizations. New vendor onboarding, spend analysis, and new units of measure for billing. This brought on different cloud computer-related cost structures and new skillsets with new job titles. Vendor lock-in, skill gaps, performance and latency and data governance all became more intricate paired with the move to the cloud. Both operational and transactional data were in scope to reap the benefits promised by cloud computing, organizational cost savings, data analytics and, of course, AI.

The most critical of these new challenges revolve around a focus on Data Security and Privacy. The migration of on-premises data workloads to the Cloud Data Warehouses included sensitive, confidential, and personal information. Corporations like Microsoft, Google, Meta, Apple, Amazon were capturing every movement, purchase, keystroke, conversation and what feels like thought we ever made. These same cloud service providers made this easier for their enterprise customers to do the same. Along came Big Data and the need for it to be cataloged, analyzed, and used with the promise of making our personal lives better for a cost. The world's population readily sacrificed privacy for convenience.

The moral and ethical conversation would then begin, and world governments responded with regulations such as GDPR, CCPA and now most recently the European Union’s AI Act. The risk and fines have been in the billions. This is a story we already know well. Thus, Data Security and Privacy have become a critical function primarily for the obvious use case, compliance, and regulation. Yet only 11% of organizations have encrypted over 80% of their sensitive data.

With new challenges also came new capabilities and business opportunities. Real time analytics across distributed data sources (IoT, social media, transactional systems) enabling real time supply chain visibility, dynamic changes to pricing strategies, and enabling organizations to launch products to market faster than ever. On premise applications could not handle the volume of data that exists in today’s economy.

Data sharing between partners and customers became a strategic capability. Without having to copy or move data, organizations were enabled to build data monetization strategies leading to new business models. Now building and training Machine Learning models on demand is faster and easier than ever before.

To reap the benefits of the new data world, while remaining compliant, effective organizations have been prioritizing Data Security as a business enabler. Format Preserving Encryption (FPE) has become an accepted encryption option to enforce security and privacy policies. It is increasingly popular as it can address many of the challenges of the cloud while enabling new business capabilities. Let’s look at a few examples now:

Real Time Analytics - Because FPE is an encryption method that returns data in the original format, the data remains useful in the same length, structure, so that more data engineers, scientists and analysts can work with the data without being exposed to sensitive information.

Data Sharing – FPE enables data sharing of sensitive information both personal and confidential, enabling secure information, collaboration, and innovation alike.

Proactive Data Security– FPE allows for the anonymization of sensitive information, proactively protecting against data breaches and bad actors. Good holding to ransom a company that takes a more proactive approach using FPE and other Data Security Platform features in combination.

Empowered Data Engineering – with FPE data engineers can still build, test and deploy data transformations as user defined functions and logic in stored procedures or complied code will run without failure. Data validations and data quality checks for formats, lengths and more can be written and tested without exposing sensitive information. Federated, aggregation and range queries can still run without fail without the need for decryption. Dynamic ABAC and RBAC controls can be combined to decrypt at runtime for users with proper rights to see the original values of data.

Cost Management – While FPE does not come close to solving Cost Management in its entirety, it can definitely contribute. We are seeing a need for FPE as an option instead of replicating data in the cloud to development, test, and production support environments. With data transfer, storage and compute costs, moving data across regions and environments can be really expensive. With FPE, data can be encrypted and decrypted with compute that is a less expensive option than organizations' current antiquated data replication jobs. Thus, making FPE a viable cost savings option for producing production ready data in non-production environments. Look for a future blog on this topic and all the benefits that come along.

FPE is not a silver bullet for protecting sensitive information or enabled these business use cases. There are well documented challenges in the FF1 and FF3-1 algorithms (another blog on that to come). A blend of features including data discovery, dynamic data masking, tokenization, role and attribute-based access controls and data activity monitoring will be needed to have a proactive approach towards security within your modern data stack. This is why Gartner considers a Data Security Platform, like ALTR, to be one of the most advanced and proactive solutions for Data security leaders in your industry.

Securing sensitive information is now more critical than ever for all types of organizations as there have been many high-profile data breaches recently. There are several ways to secure the data including restricting access, masking, encrypting or tokenization. These can pose some challenges when using the data downstream. This is where Format Preserving Encryption (FPE) helps.

This blog will cover what Format Preserving Encryption is, how it works and where it is useful.

Whereas traditional encryption methods generate ciphertext that doesn't look like the original data, Format Preserving Encryption (FPE) encrypts data whilst maintaining the original data format. Changing the format can be an issue for systems or humans that expect data in a specific format. Let's look at an example of encrypting a 16-digit credit card number:

As you can see with a Standard Encryption type the result is a completely different output. This may result in it being incompatible with systems which require or expect a 16-digit numerical format. Using FPE the encrypted data still looks like a valid 16-digit number. This is extremely useful for where data must stay in a specific format for compatibility, compliance, or usability reasons.

Format Preserving Encryption in ALTR works by first analyzing the column to understand the input format and length. Next the NIST algorithm is applied to encrypt the data with the given key and tweak. ALTR applies regular key rotation to maximize security. We also support customers bringing their own keys (BYOK). Data can then selectively be decrypted using ALTR’s access policies.

FPE offers several benefits for organizations that deal with structured data:

1. Adds extra layer of protection: Even if a system or database is breached the encryption makes sensitive data harder to access.

2. Original Data Format Maintained: FPE preserves the original data structure. This is critical when the data format cannot be changed due to system limitations or compliance regulations.

3. Improves Usability: Encrypted data in an expected format is easier to use, display and transform.

4. Simplifies Compliance: Many regulations like PCI-DSS, HIPAA, and GDPR will mandate safeguarding, such as encryption, of sensitive data. FPE allows you to apply encryption without disrupting data flows or reporting, all while still meeting regulatory requirements.

FPE is widely adopted in industries that regularly handle sensitive data. Here are a few common use cases:

ALTR offers various masking, tokenization and encryption options to keep all your Snowflake data secure. Our customers are seeing the benefit of Format Preserving Encryption to enhance their data protection efforts while maintaining operational efficiency and compliance. For more information, schedule a product tour or visit the Snowflake Marketplace.

When one of my relatives started participating in a research study run by a major west coast university, it got me thinking about the personal data higher education organizations gather and administer. For this study, he shared all his previous medical history; handed over personal data including name, address, and social security #; and goes in once a week to have his vitals read and recorded. This study includes hundreds of people and is just one research project in one department in a huge organization.

Universities are really like large conglomerates that can include healthcare facilities, scientific and medical research laboratories as well as businesses that market to potential students for recruiting and alumni for fundraising. This makes universities a substantial target for bad actors looking for rich sources of data to steal. In April 2021, Gizmodo revealed that several leading US universities had been affected by a major hack, with some sensitive data revealed online in an attempt to pressure ransom payments. The COVID-19 pandemic brought increased threats to higher ed which are negatively impacting school credit ratings, according to Moody’s.

And because universities span across multiple industries, they must comply with multiple relevant laws including PII and HIPAA privacy protections in addition to specific regulations around student data laid out in the Family Educational Rights and Privacy Act (FERPA). FERPA puts protections around “student education records” including grades, transcripts, class lists, student course schedules, and student financial information.

This all makes data privacy more complicated, but even more crucial for higher education organizations.

The good news is that all this data is worth the risk. It’s leading to medical innovations like the new drug trial my relative is participating in. It’s also leading to better outcomes for students. After seeing research from other universities on the power of momentum in student success, Michigan State University analyzed 16 years of student data and found a similar result. Students who attempted 15 or more credits in their first semesters saw six-year graduation rates close to 88 percent compared to the university’s overall 78 percent graduation rate. This led the school to launch a campaign encouraging students to attempt 15 credit hours to graduate faster and save money.

Arizona State University, Georgia State and other leading universities developed adaptive learning technology that continually assesses students and gives instructors feedback on how they can adjust to class or individual student needs. This contributed to a 20% increase in completion for 5,000 algebra students. The University of Texas at Austin is doing something similar to Michigan State by using more than 10 years of demographic and academic data to determine the likelihood a student will graduate in four years. Students identified as at-risk are offered peer mentoring, academic support and learning through small affinity groups.

As higher ed organizations strive to optimize use of data, they’re migrating workloads to cloud data platforms like Snowflake to optimize financial performance and provide streamlined analytics access. They’re making data available to increasing numbers of users; collecting and sharing sensitive data including PII and PHI, while complying with relevant regulations; and distributing data across multiple tools for data classification and business intelligence.

This would be difficult enough with the siloed processes some universities face. Trying to coordinate this across multiple departments, conflicting architectures, and legacy tools can be almost impossible. In addition, giving more and more users access to more data creates more and more risk, exposure, attack surfaces, and potential credential threats. “All or nothing” access doesn’t work at scale. In order to ensure that they’re meeting regulatory requirements, universities must have visibility into who is accessing what data, when and how much, have the power to control that access, and document that access on command.

ALTR can help by unifying data governance across the data ecosystem. ALTR can help you know, control and protect all your data, no matter where it lives. We do this by providing discovery, visibility, management, and security capabilities that enable organizations to identify sensitive data, see who’s accessing it, control how much is being consumed, and take action on anomalies or policy violations. And ALTR works across all the major cloud databases, business intelligence, ETL, and tools such as Snowflake, AWS, ThoughtSpot, Matillion, OneTrust, Collibra, and more.

Don’t let data privacy or protection concerns become a roadblock to the essential research and insights universities provide. Protect data throughout your environment and stay compliant with all the regulations with unified data governance from ALTR.

Interested in how ALTR can help simplify data governance and protect your sensitive data? Request a demo!

After many in depth, heated conversations around the ALTR office about the dynamics of the data governance and security industries, in 2021 we decided it was time to jump into the online conversation with both feet! January is a great time to look back at our top data governance blog posts for the year, and there are some clear trends in what we covered: we feel passionately that data governance without security just isn’t enough, that knowing your data means knowing how your data is being used and why, and that Snowflake + ALTR delivers an easy yet powerful solution to the data governance and security problem.

And we can tell those topics resonated with you looking at our top data governance blog posts for the year….

The Hidden Power of Data Consumption Observability

Seeing who’s consuming what data, where, when, why and how much lets you make better decisions for your business.

Do You Know What Your Tableau Users Are Doing in Snowflake?

If you’re using a Tableau service account to let your users access Snowflake data, you may not be able to see what data users are accessing or govern them individually. ALTR can help.

Why “Why?” is the Most Important Question in Governing Data Access

When you know why data is being used, you can more easily create purposed-based access control policies that custom-fit your organization, are simpler to automate, and reduce risk.

The Shifting Lines Between Data Governance and Data Security

Automated policy implementation lets data governance teams control data access, allowing for greater effectiveness and efficiency across process.

No Matter What You Call It, Data Governance Must Control and Protect Sensitive Data

The Forrester Wave™: Data Governance Solutions report shines a light on the Data Intelligence/Governance space.

Thinking About Data Governance Without Data Security? Think Again!

Traditional data governance without security puts your company and your data at risk. A complete data governance solution must include security.

Go Further, Faster with Snowflake and ALTR

Boost your value from Snowflake more quickly by embracing ALTR complete data control and protection right from the start

Why DIY Data Governance When You Could DI-With ALTR for Free?

What if controlling and protecting your data could be free, easy and in some cases, more powerful? See why doing it with ALTR Free is better.

This year we plan to dive deeper into where the data governance industry is falling short, why data privacy and protection will continue to become more and more critical, and how you can make sure you’re prepared for what’s coming down the pike in 2022.

Sign up for our newsletter to get the latest first!

Consolidating your business data in cloud data warehouses is a smart move that unlocks innovation and value. All your data in one place makes it easier to connect the dots in ways that were impossible or unimaginable before. For instance, a retail chain can optimize sales projections by analyzing weather patterns, or a logistics company can more accurately predict costs by accounting for the salaries of all the people involved in a shipment. The key to making a project like this successful is to overcome the cloud migration challenges that can pop up along the way.

Getting those new data-fed insights is a process that starts with moving the data to a consolidated cloud data warehouse like Snowflake. An extract, transform, and load (ETL) migration technology partner simplifies moving or loading the data from each of your company’s locations into a cloud data warehouse to make it analytics-ready in no time. Migrating data is what these companies do best. Data governance and sensitive data security are not their priority, however, which is a tremendous concern when the most valuable data is often the most valuable – both to the business and to bad actors. That makes sensitive data migration one of the biggest cloud migration challenges. Confidential information like customer PII, which includes email, home addresses, or social security numbers, can be extremely useful to analytics. For example, it can help marketing teams know where, when and to whom they should target a specific offer if they can determine what age, sex, location are mostly likely to buy. However if breached, customer PII can cause create significant risk of legal exposure and to your reputation.

The need for high levels of data protection and secure access can cause significant tradeoffs in data usability and sharing, which adds risk and complicates matters for analytics teams. Even the built-in security and governance capabilities of data warehouses require a level of database coding expertise that is costly to implement and time-consuming to manage at scale. Distributed enterprises need a thoughtful yet simpler approach to protecting data in the cloud that keeps information airtight and doesn’t slow down access and progress.

Before you migrate data to the cloud, let’s understand how cloud migration data security can help overcome your cloud migration challenges - and why some solutions fit better than others for your specific business needs. We all know that we must protect sensitive data in order to comply with appropriate regulations and maintain the trust of our customers. What is not always clear is if the same standards for storing and protecting data on-premises also apply to data in the cloud.

These requirements include using NIST-approved security or standards for at-rest data protection. At a minimum, we must ensure there’s not a single door for hackers to get through, known as a single-party threat. If data can be de-obfuscated by just by one person, the protection method doesn’t count. For example, simply reversing a medical record is not enough. Encryption meets this requirement because you need both the encrypted data and the key to unlock it in order to access the original data.

For data in the cloud, however, you need to rethink tooling and management decisions. Let’s look at methods for data obfuscation including encryption, but through a cloud lens. You’ll quickly run into several issues:

To avoid these access and encryption issues, some security methods rely on transforming data through “one-way” techniques like hashing before storing the hash in the cloud. Hash codes ensure privacy and allow users to still know the dataset comprises, for example, social security numbers. However, an authorized user who needs the real social security number won’t be able to retrieve it, because once hashed, the data cannot be recovered in the cloud database.

Even anonymization techniques, such as storing the data as a range, limit the application of data. You might not need an individual anonymized data point today, but you may very need it later. Your business may depend on allowing some authorized users to have access to the original data, while ensuring it is meaningless and opaque to everyone else.

If analytics is the goal of your sensitive data migration, then the preferred security solution is tokenization for its ability to balance data protection and sharing.

When it comes to solving security-related cloud migration challenges, tokenization has all the obfuscation benefits of encryption, hashing, and anonymization, while providing a much greater analytics usability. Let’s look at the advantages in more detail.

One of the best approaches to solve your sensitive data cloud migration challenge is to embed data security and governance right into your migration pipeline. ALTR has partnered with ETL leader Matillion to do just that. ALTR's open-source integration for Matillion ETL lets you tokenize data through Matillion so that it's protected in the flow of your cloud migration. The ALTR shared job is used to automatically tokenize, as well as apply policy on sensitive columns that have been loaded into Snowflake.

See how it works:

Given the volume of data being generated and collected, enterprises are looking for ways to scale data storage. Migrating their data to the cloud is a popular solution, as it not only solves data volume problems, but also offers numerous advantages. While it would be nice to flip a switch and be in the cloud, it’s not that simple! Moving to the cloud requires a strategy and a big part of that is data security. Tokenization solves one of the biggest cloud migration challenges: sensitive data migration. It delivers tough protection for sensitive data while allowing flexibility to utilize the data down to the individual, allowing companies to unlock the value of their cloud data quickly and securely.

Organizations are moving more data to the cloud than ever before. According to a 2019 Deloitte study of more than 500 IT leaders, security and data protection is the top driver for moving data to the cloud, moving ahead of traditional reasons like reduced costs and improved performance. With cyber security threats continuing to increase in volume and effectiveness, it makes sense that companies would look to cloud providers and online data warehouses for expert, enterprise level security and protection.

But while some companies are comfortable with this shift, others feel like it’s too much too fast. Because they've been used to maintaining data within their own data centers with their own security solutions and policies, moving both data and security to a third party can seem like there’s just too much outside IT and security managers' control. Various security providers have stepped in with products that deliver that control, many using a cloud proxy server. While at first glance this may seem like an ideal solution, it’s really a step back from the benefits of moving to the cloud. Let's look at when it makes sense to use a cloud proxy for data security and when it does not.

Vendor-provided cloud proxy security solutions do have some advantages: they can allow you to set up your own policies and maintain custom control over who accesses data, when and how. They can also allow you to centralize control across a wide variety of cloud data stores because they’re not tied to a specific platform or API. But along with this level of control comes additional work for your team.

You’ll have to worry about deploying, maintaining, upgrading and scaling this additional component in your infrastructure – responsibilities you’ve tried to avoid by moving to the cloud. If you choose a standalone cloud proxy you’ll run into the privacy issues of sending data through a third party. You’ll also need to modify all your applications to go through the cloud proxy. And if you have applications that don’t go through the proxy, you can’t see what users are doing with your data, let alone stop them. The applications that do go through the proxy may run into issues when cloud platforms make configuration changes you’re unaware of, forcing downtime. Many of the largest cloud applications and data platforms, like Microsoft Office 365, discourage proxy use.

The good news is that today you have options that didn’t exist even a few years ago. As more and more applications, workloads, and data move to the cloud, more and more supporting infrastructure is moving as well. Leading cloud apps and platform providers are building in ways for you to have the same kind of hands-on control over your data you had when it lived on your infrastructure or even via a cloud proxy security solution – because it is your data. Salesforce, for example, allows users to disguise and tokenize emails in their platform using a third-party service, making them visible only at the point of sending a mass email via marketing automation software. The leading SaaS providers understand they can gain competitive advantage by helping users who were not as comfortable with the move to the cloud get comfortable.

Snowflake is a leader in this space as well, seeing themselves as part of that larger cloud ecosystem. Snowflake provides an extensible platform that allows you to choose to run the platform’s powerful security tools or integrate third-party security solutions like ALTR that sit beside the data, instead of between the data and your users like a proxy would. You get all the benefits of a cloud solution – scalability, stability, low maintenance – and all the advantages of running your own security – the ability to mask data so it’s invisible to Snowflake, for example. This allows you to maintain that sense of “checks and balances.”

Today’s best platforms understand that they’re part of a cloud infrastructure and data ecosystem, and they’re allowing other products to plug and play natively vs using a cloud proxy to provide those features. The future of sensitive data in the cloud is integrations like this, and cloud data stores will continue to launch features that allow users to control their data more intimately.

The benefits of a cloud platform with control of your sensitive data. That’s where the future is.

Want to see how ALTR integrates with OneTrust and Snowflake? Check out our on demand webinar, "Simplifying Data Governance (and Security) through Automation." Click here to learn more!

Imagine you’re in the midst of a brand-new data governance project. You’ve done your data discovery and classification – you know where your sensitive data is. Now you just have to write the policies that determine who gets access to it. But how should you approach setting up those permissions? This blog will walk you through considerations for RBAC vs ABAC vs PBAC. If you already know what those mean, great. If not, don't worry, we'll explain.

Starting with marketing, you think, “Marketing sends emails to customers, so they need access to customer information.” It just makes sense, right? This would be “role-based access control” (RBAC) – users get access to data based on their spot in the company org chart.

But if you start to dig deeper, it gets more complicated – does the VP of brand need customer email access? Does a copywriter? Unless you’re actually in marketing – or product development or finance – it can be difficult to know what roles actually need access to specific sensitive data in order to do their jobs. Does everyone in finance need access to all the financial information? Does a UX Designer in the product team need access to all the technical product specs? People on the same teams can have very different jobs and need very different tools and access. And, if people have access to sensitive data that’s not essential to their jobs, it just increases the risk of potential theft or loss.

How could you find out who needs what? Do a survey or reach out to each individual to ask? Or work with the department head who may not even know exactly what each person needs? Now that can get really time consuming. Let's take a look at some other approaches - RBAC vs PBAC vs ABAC - and don't worry, we'll explain each.

You could go ahead and set up your role-based access controls – give access to the marketing team – and then see what happens. See who uses what data, when and how much. And then you can ask the most important question: why? You can get very targeted about why a user is accessing certain data at certain times. Maybe the Marketing Ops Specialist is accessing 3,000 customer emails on Thursdays to send out a coupon for the weekend. Not only does watching consumption allow you to narrow in on the data being accessed, but it also acts as a source of truth. Instead of relying on people to accurately tell you what data they need, you can see what data they’re actually using.

Once you understand the reason the data is being accessed and agree that this is an acceptable business usage, you can put policies in place that only allow this data to be accessed by this user at this time in this amount. We call this purpose-based access control. Your policies are no longer about whether someone is “on the marketing team”, but rather “this person needs to send emails for the marketing team.”

Purpose-based access control might be considered a form of “attribute-based access control” (ABAC) where the attribute is simply “purpose.” Focusing just on purpose has a few advantages over full-fledged ABAC though: first, it’s significantly less complicated and it’s much more accurate. Instead of setting up and maintaining thousands of attributes about the user, the data, the activity, and the context, you’re just focusing on the one that really matters: why the data is needed. And since it’s based on real consumption information, there is no guessing who might need what when or where. Instead of trying to write rules for a limitless number of contingencies, purpose-based access control narrows in on actual data usage and need.

This leads to another key advantage: if you know “why” someone needs the data, you also know “how much” they need. Going back to our marketing email example, you can look at the pattern of consumption over the last few weeks and find that they generally only send out about 3,000 emails each time. It would be out of the ordinary to send 10,000. So, you can set up your “this person sends marketing emails” purpose-based policy to have a limit of 3,000 at a time, only on Thursdays. If they need to do something out of the norm, they can ask for that permission. Setting thresholds like this protects privacy and reduces the potential for unintentional data leaks or credentialed access theft.

Knowing “why” also puts you better in line with privacy regulations that require companies to not only provide what data they store on people, but also for what purpose. Having that information at your fingertips makes it easier to comply with the law.

Finally, because it’s based on just one variable – does this person need to do this activity – it’s much simpler to automate approvals and access when a new person joins the team or if the activity shifts to another team member.

Now imagine you had included watching consumption as part of your data discovery and classification project – as you were finding sensitive data, you were also finding out how it was being used. Maybe marketing isn’t the only group using marketing data. You would have a true-to-life map of data usage throughout the company, you could build your purpose-based access controls right the first time based on real need, and you would spend less time adjusting policies, making exceptions, and tightening access.

Purpose-based access control is as simple as RBAC in that it focuses on just one variable, yet it can be a better fit to your org because it’s based on how data is actually used, it’s simpler to automate, and it reduces risk by limiting access to a narrow amount of data only to those who need it for a specific purpose.

Now, the next question is, “Why aren’t you doing this already?”

Companies often think they have just two choices for Data Governance: expensive or DIY. Expensive means options that start at $100k and only go up. Expensive can also mean long implementations, software changes, and a hefty investment in people to set up and maintain the tool.

It’s no wonder you may think, “We’ll just do it ourselves!” But that comes with its own costs: expensive programmers to write code, time commitments that bog down other data priorities, difficulty scaling with more data and users, and limits on what you can do.

What if controlling and protecting your data could be free, easy and in some cases, more powerful? Let’s look at how to do some basic tasks DIY on Snowflake versus with the ALTR Free Snowflake upgrade:

See how easy Data Masking is in ALTR vs Snowflake:

Ask a company which role or team is ultimately responsible for ensuring data protection or data security, and they often cannot give a single, clear answer. Even if the organization has a Chief Data Officer or designated data protection officers, responsibility is typically distributed across various functions under the CTO, the CIO, the risk or compliance team, and the CISO, with input from business units, data scientists, business analysts, product developers, and marketers.

While it might sound nice to say “Data security is everybody’s job,” in practice this scenario commonly leads to an ambiguous, inefficient mess — and serious security gaps.

Even if they do not make software as their primary work, virtually every enterprise today is heavily reliant on software, in the sense of purchasing or creating applications to improve processes. Examples abound: Insurance companies build mobile apps so policyholders can file claims and adjusters can fill out damage reports. Big retailers and shippers write massive logistical programs to manage complex supply chains. Many types of companies create their own software for making forecasts. And almost every enterprise tasks solution architects or other application owners with implementing major third-party packages for many corporate functions.

Of course, software vendors are even more heavily engaged in this work, and tensions abound. The CTO wants software that makes the company’s IP portfolio more valuable, product and marketing teams want apps that are better and cheaper, the CISO wants the product to be more secure, and so on. Application owners and the developers who work with them can be pulled in different directions as they try to create and manage highly functional apps. In this setting, security and governance concerns can easily fall by the wayside — affecting not just the vendor itself, but all of their clients as well.

All of these organizations rely heavily on the data that flows into and out of enterprise applications. The good news is that these apps function as superhighways for the flow of data, bringing huge benefits in terms of productivity.

But the benefits also come with real risks. Now more than ever, business apps handle many kinds of data coming in at all hours from all over the map, and then pass that data to any user with the right credentials. In many cases, unfortunately, this includes exposing sensitive data to employees who don't need it for their jobs, or who shouldn't be permitted to see it at all. Giving so many people access to that much data creates serious potential hazards even with traditional cybersecurity measures in place, as a glance at the past decade of headlines about corporate data breaches makes obvious.

When implementing data protection is so fragmented, no single team is given real responsibility, much less empowerment, to carry out the task. The result? Data security falls between the cracks.

There is an answer to this dilemma: put the responsibility for data protection in the hands of the application owners who create or manage the applications that use the data, and empower them — and the development teams that work with them — accordingly. Such empowerment implies removing organizational roadblocks and using appropriate technology to handle the burdens of data protection. This quickly improves data security and compliance, but it also boosts innovation and competitiveness over the longer term.

ALTR co-founder and CTO James Beecham recently led a discussion of these issues in a Data Protection World Forum webinar, “Data Protection Is Everyone's Job, so It's No One's Job.” He was joined by Jeff Sanchez, a managing director at Protiviti who draws on his nearly thirty years of industry experience as he leads that firm’s global Data Security and Privacy solutions. During the session, these experts explained exactly how organizations can empower application owners and development teams with solutions that enable them to quickly incorporate security and compliance at the same time — and at the code level.

Access the webinar now so you can find out how this approach not only protects the organization from data breaches and compliance failures, but also enables personnel across many functions to improve innovation and competitiveness.

We've partnered with Chicago-based data and analytics consultancy firm, Aptitive, to drive innovation in the cloud for our customers; the first order of business was to chat with their CTO, Fred Bliss, regarding data-driven enterprises. Fred's experience with enterprise organizations to develop custom applications, data pipelines, and analytical data platforms makes him an expert when it comes to best practices for organizations that want to become data-driven. In preparation for our upcoming webinar with Aptitive on February 17, we had a few questions for Fred; his answers are certainly worth sharing.

"Data-driven" is both a buzzword and a reality. When you hear that someone wants to become data-driven, you have to look past the technology angle and into the people and process side of the business. An organization could build the greatest, most innovative analytics technology in the world, but if it's driven entirely by IT in a "build it and they will come" approach, it will likely fail due to poor adoption. When I see data-driven done right, it's creating a vision and plan collaboratively with both business and IT leaders, getting a business executive to sponsor and spearhead the effort, and adopting the platform in their day-to-day operations. When a customer of ours started driving every executive meeting using dashboards and data to make decisions, instead of "gut feels", we knew a change had happened in the organization at the top, and it paved the way for the rest of the organization to quickly follow suit.

1. Sponsorship from a key business leader.

2. Connecting technology and development efforts directly to a business case that carries a big ROI.

The days of spending a year (or more) building the "everything"' enterprise data warehouse are over - start small, and start with providing real insights that drive action. Dashboards that provide "that's interesting" metrics are interesting - but so what? We need to use data that goes beyond telling us what happened, and instead points business leaders to where they need to focus their initiatives to take appropriate action.

100% IT-driven projects. As much as I love building a beautiful back-end architecture, if it's not solving the pain of a business or delivering a new opportunity that they never had before, it's not going to compel anyone to do anything differently. While some IT-driven projects make sense (for cost reduction, whether in technology cost or the opportunity cost of maintaining a complex system), the real adoption comes when the business is able to do things more quickly than they could before.

It significantly removes the future risk of managing security in an analytics environment. While basic security is a no-brainer (SSO, authentication, row-level security, etc), when you have a complex organization, effectively designing a security model that can scale takes time, thought, and long-term planning. An organization's data is their most valuable asset. A security-first approach ensures that as the company grows and scales its data initiatives, they can also allow security to scale with it, rather than hold it back from opportunities.

A massive thank you to Fred from the whole ALTR team for taking the time to share some of your knowledge with us and our readers. For more insights from Fred as well as our own CTO, James Beecham, tune in to our webinar on February 17th: The Hidden ROI - Taking a Security-First Approach with Cloud Data Platforms.

In preparation for a recent webinar, I chatted with both the other presenters to get deeper insight into the webinar topic “A Security-First Approach to Re-Platforming Data in the Cloud” and to give you an idea of what you can expect to learn from our On-Demand webinar.

This post features Omer Singer, the Head of Cyber Security Strategy from Snowflake, another industry expert with years of experience in both cybersecurity and cloud data warehouses.

As I’m sure you already know, Snowflake enables organizations to easily and securely access, integrate, and analyze data with near-infinite scalability. The rapid adoption of solutions like Snowflake is the main reason we are discussing what it looks like to re-platform data in the cloud. Who better to give us valuable insight and lessons learned than one of their own?

Here's some of what Omer had to say:

1. What's a common mistake you see companies make when re-platforming data in the cloud/Snowflake?

Companies are used to thinking about network security architectures, and they've been adapting that approach to cloud security. When it comes to data security, there is still a tendency to start with a flat architecture that relies too heavily on authentication as the exclusive security control. In fact, Snowflake has granular RBAC capabilities that are very capable of restricting data access to the right people. While Snowflake has automated nearly all the onerous management tasks, access control is one of those things that each org needs to tailor for itself. Because it's on the customer to manage who has access to what datasets, it's also on the customer to monitor for account compromise and abuse, no different from monitoring infrastructure cloud activity. That's something that security teams are becoming more aware of recently and I'm glad that they have solutions like ALTR that can help them be successful.

2. What are the benefits of a Security Data Lake? Why is it important?

Anyone that's paying attention to the headlines can tell that cybersecurity has yet to achieve its objective of companies operating online with assurance. Instead, there are justified concerns around the risk companies take on by adopting new technologies and becoming more interconnected. The answer is not to avoid progress but to see these powerful new technologies as opportunities for achieving radically better cybersecurity. The cloud, with its bottomless storage and nearly unlimited compute resources, can become an enabler for big progress in areas like threat detection and identity management. A security data lake is really just a concept that says "Infosec is joining the rest of the company on a cloud data platform". At Snowflake, we're starting to see the impact of this movement at our customers and it's very exciting.

3. What do you hope the audience takes away from this webinar?

It's not like security teams have an overabundance of time on their hands but I'm hoping that they use this webinar as an opportunity to revisit their data security strategy. Just like everyone in the audience has learned in the past that using the public cloud doesn't mean not worrying about cloud security, I hope there's a similar aha! moment around the increasingly critical cloud data platform. I also hope that the examples we'll be sharing about combining ALTR insights with other datasets to catch compromised accounts and insider threats will get attendees fired up about doing security analytics themselves.

End of Q&A.

Between Omer (Snowflake) and Lou (Q2), our webinar was illuminating and thought-provoking for everyone involved. Click the image below to watch the webinar on demand.

Data and security professionals alike are feeling the pressure to make data accessible yet secure. The rapid adoption of cloud data platforms like Snowflake enables organizations to make faster business decisions because of how easy it has become to analyze and share large quantities of data.

But how has this impacted organizations' overall risk exposure and data security strategies?

During our latest webinar, “A Security-First Approach to Re-Platforming Cloud Data”, we explored that question and discuss the best practices for mitigating that risk. I was joined by Omer Singer, Snowflake’s Head of Cyber Security Strategy, as well as Lou Senko, Chief Availability Officer at Q2.

First, let’s level set on what we mean by “Security-First.” Typically, security-related projects are brought in at the eleventh hour with a corresponding eleventh hour budget. This makes the task of adding data security into a product or application stressful and cumbersome. A security-first approach means that security is part of the discussion from day 1, built into the strategy, not an add-on.

That’s why I am so excited that Lou Senko joined us for this discussion. Lou understands the importance of security-first and he’s experienced the challenges and benefits firsthand. Lou and his team are responsible for the availability, performance, and quality of the services that Q2 delivers, including security and regulatory compliance.

In preparation for the webinar, I had the privilege of getting some time with Lou for a quick Q&A session that gives a sneak peek into what you can expect to learn about during the live event.

1. Why did Q2 choose Snowflake and what benefits were you looking for/problem were you looking to solve?

Q2 went through the ‘digitization’ of our back office internal IT back in 2013-2014, resulting in us moving all of our in-house application portfolio over to best-of-breed SaaS solutions. This provided Q2 an opportunity to re-imagine our internal IT staffing – shifting away from looking after machines to more Business Analysts, Reporting Analysts and experts of this SaaS applications – working with the business to drive more value from the insights these applications offer. At first, we were building our own data warehouse, but ended up taking a step backwards from that.

With Snowflake we get all of these new capabilities without adding complexity from an infrastructure perspective. We had run into the typical issues with large data – finding ways to keep it performant, serving both ETL and OLTP usage models, and it was a big lift to manage and secure it. Snowflake’s simplicity has allowed us to focus resources on additional projects and accelerate our ability to innovate.

2. For others in the same position, what are the biggest risks to look out for when re-platforming your data in the cloud?

Security. We pulled all the data out of these highly secure, highly trusted SaaS applications and then plunked it all into a single database. Before, a bad actor would need to figure out how to hack Netsuite, Salesforce, Workday, etc. But now they just have to focus on hacking this one database and you take it all. So, it makes the data warehouse a very rich target.

3. What do you hope the audience takes away from this webinar?

First, I hope they can learn from our experiences, so they don’t have to waste a lot of time for no reason. I want them to better understand the risks too. The business needs the insights from the data – so you must deliver – but pulling it out of your vendors application just removed all that security.

Overall, I’m excited to show them how combining Snowflake with ALTR can enable them to optimize their benefits and minimize their risk.

End of Q&A

As you can see you are in for an amazing discussion if you check out the on-demand webinar. You will learn best practices from Lou around scaling and making data available to all consumers who need access without putting burdens on your operations teams.

Alongside Lou, we were also joined by Omer Singer of Snowflake who shared how modern businesses are enhancing threat detection capabilities with best of breed tools like Snowflake to cut cost and time related to security incident and event management. Check out the Q&A we had with Omer here.

In preparation for our upcoming webinar, Simplifying Data Governance Through Automation, I chatted with OneTrust’s Sam Gillespie to get a preview of his thoughts and insights. Sam is an Offering Manager at OneTrust with years of experience supporting and guiding clients through implementation.

As more companies than ever are using data to make better business decisions, they’re also contending with growing privacy, governance, and risk obligations. OneTrust unifies data governance under one platform, streamlining business processes and ensuring privacy and security practices are built in. The integration between OneTrust and ALTR further simplifies this by automating the enforcement of governance policy.

Let’s hear what Sam thinks about how data use and data regulations are creating challenges for companies:

1. Can you talk a little bit about how increasing privacy regulations are making it more challenging than ever to utilize data? How is this affecting teams from privacy and legal to data and security?

I think we all expected there to be a GDPR “domino effect.” However, the scale and pace at which we’re seeing new privacy laws are taking many by surprise. In practically every corner of the globe, new privacy laws or proposed ones have come into place which require organizations to better understand, process and protect personal data. Although many of them have shared principles and foundations, no law is the same. Even just within the U.S. each law is different in its scope, requirements, and definitions of personal data. Yet data is becoming even more key to the growth and future of most organizations, so privacy and security teams must be able to meet this ever-growing complex regulatory landscape without hindering business use of data where it can be avoided. This is a huge and complex task and is a core reason why most organizations, big and small, are turning to technology to help with this.

2. What is the problem with enforcing governance policy today?

Most companies I speak to are too far on either end of the scale. They either lock down their data to better protect it but then force their people to go through an often complex process to get access to it, if their teams are even aware the data exists, which causes slowdowns in day-to-day operations and business use of the data. Or on the other end, they have pretty open access to all data all the time, risking violations of policies and, in extreme cases, the law. Therefore, governing your data is a tricky balancing act of business enablement and compliance. It can be done though, and the benefits are felt throughout the organization. But you need to ensure you have the right tools and processes in place to facilitate this.

3. Are there ways to make enforcement of data governance policy more effective?

This is where technology is going to play a vital role. The sheer amount of data that most organizations have, coupled with the ever-increasing ways in which it can be used, means that governing its use manually is impractical. However, technology is not going to solve all your problems. You also need to make sure that the right tool is embedded within your current processes and operations for it to be used effectively. Also, it has to be scalable and easy to implement—it’s only going to effectively do its job if it works and is used!

4. What do you hope the audience takes away from this webinar?

I hope the audience takes away that help is out there! Tools like ALTR and OneTrust can really help organizations with their privacy and security obligations in a way that will actually work! Great technology that complements each other and ultimately helps customers solve a business challenge: what’s not to love?!

Thanks to Sam for sharing his thoughts. In the webinar, you can hear more and see how the OneTrust + ALTR integration makes automated data governance easy. Watch on demand now.

A few years ago, a handful of “tokens” used to be as good as gold at the local arcade. It meant a chance to master Skee-Ball or prove yourself a pinball wizard by getting your initials on the leaderboard. But what's "tokenization of data?” It's kind of the same thing except instead of exchanging money for tokens, you exchange sensitive data. It's a data security solution alternative to encryption or anonymization. By substituting a “token” with no intrinsic value in place of sensitive data, such as social security numbers or birth dates that do have value, usually quite a lot of it, companies can keep the original data safe in a secure vault, while moving tokenized data throughout the business.

And today, one of the places just about every business is moving data is to the cloud. Companies may be using cloud storage to replace legacy onsite hardware or to consolidate data from across the business to enable BI and analysis without affecting performance of operational systems. To get the most of this analysis, companies often need to include sensitive data.

Tokenization of data is ideal for sensitive data security in the cloud data warehouse environment for at least 3 reasons:

While many of us might think encryption is one of the strongest ways to protect stored data, it has a few weaknesses, including this big one: the encrypted information is simply a version of the original plain text data, scrambled by math. If a hacker gets their hands on a set of encrypted data and the key, they essentially have the source data. That means breaches of sensitive PII, even of encrypted data, require reporting under state data privacy laws. Tokenization on the other hand, replaces the plain text data with a completely unrelated “token” that has no value if breached. Unlike encryption, there is no mathematical formula or “key” to unlocking the data – the real data remains secure in a token vault.

When one of the main goals of moving data to the cloud is to make it available for analytics, tokenizing the data delivers a distinct advantage: actions such as counts of new users, lookups of users in specific locations, and joins of data for the same user from multiple systems can be done on the secure, tokenized data. Analysts can gain insight and find high-level trends without requiring access to the plain text sensitive data. Standard encrypted data, on the other hand, must be decrypted to operate on, and once the data is decrypted there’s no guarantee it will be deleted and not be forgotten, unsecured, in the user’s download folder. As companies seek to comply with data privacy regulations, demonstrating to auditors that access to raw PII is as limited as possible is also a huge bonus. Tokenization allows you to feed tokenized data directly from Snowflake into whatever application needs it, without requiring data to be unencrypted and potentially inadvertently exposed to privileged users.

Anonymized data is a security alternative that removes the personally identifiable information by grouping data into ranges. It can keep sensitive data safe while still allowing for high-level analysis. For example, you may group customers by age range or general location, removing the specific birth date or address. Analysts can derive some insights from this, but if they wish to change the cut or focus in, for example looking at users aged 20 to 25 versus 20 to 30, there’s no ability to do so. Anonymized data is limited by the original parameters which might not provide enough granularity or flexibility. And once the data has been analyzed, if a user wants to send a marketing offer to the group of customers, they can’t, because there’s no relationship to the original, individual PII.

Tokenization of data essentially provides the best of both worlds: the strong at-rest protection of encryption and the analysis opportunity provided by anonymization. It delivers tough security for sensitive data while allowing flexibility to utilize the data down to the individual. Tokenization allows companies to unlock the value of sensitive data in the cloud.