Format-Preserving Encryption: A Deep Dive into FF3-1 Encryption Algorithm

In the ever-evolving landscape of data security, protecting sensitive information while maintaining its usability is crucial. ALTR’s Format Preserving Encryption (FPE) is an industry disrupting solution designed to address this need. FPE ensures that encrypted data retains the same format as the original plaintext, which is vital for maintaining compatibility with existing systems and applications. This post explores ALTR's FPE, the technical details of the FF3-1 encryption algorithm, and the benefits and challenges associated with using padding in FPE.

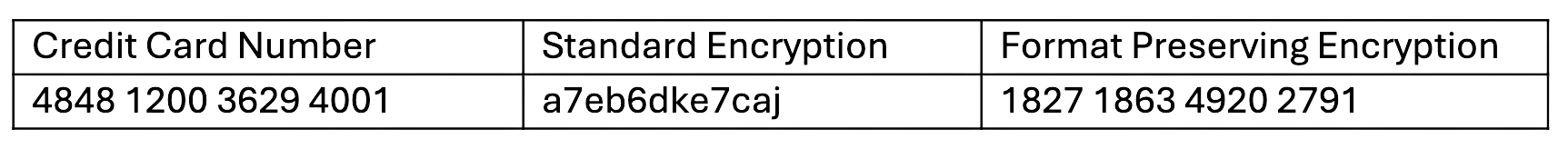

Format Preserving Encryption is a cryptographic technique that encrypts data while preserving its original format. This means that if the plaintext data is a 16-digit credit card number, the ciphertext will also be a 16-digit number. This property is essential for systems where data format consistency is critical, such as databases, legacy applications, and regulatory compliance scenarios.

The FF3-1 encryption algorithm is a format-preserving encryption method that follows the guidelines established by the National Institute of Standards and Technology (NIST). It is part of the NIST Special Publication 800-38G and is a variant of the Feistel network, which is widely used in various cryptographic applications. Here’s a technical breakdown of how FF3-1 works:

1. Feistel Network: FF3-1 is based on a Feistel network, a symmetric structure used in many block cipher designs. A Feistel network divides the plaintext into two halves and processes them through multiple rounds of encryption, using a subkey derived from the main key in each round.

2. Rounds: FF3-1 typically uses 8 rounds of encryption, where each round applies a round function to one half of the data and then combines it with the other half using an XOR operation. This process is repeated, alternating between the halves.

3. Key Scheduling: FF3-1 uses a key scheduling algorithm to generate a series of subkeys from the main encryption key. These subkeys are used in each round of the Feistel network to ensure security.

4. Tweakable Block Cipher: FF3-1 includes a tweakable block cipher mechanism, where a tweak (an additional input parameter) is used along with the key to add an extra layer of security. This makes it resistant to certain types of cryptographic attacks.

5. Format Preservation: The algorithm ensures that the ciphertext retains the same format as the plaintext. For example, if the input is a numeric string like a phone number, the output will also be a numeric string of the same length, also appearing like a phone number.

1. Initialization: The plaintext is divided into two halves, and an initial tweak is applied. The tweak is often derived from additional data, such as the position of the data within a larger dataset, to ensure uniqueness.

2. Round Function: In each round, the round function takes one half of the data and a subkey as inputs. The round function typically includes modular addition, bitwise operations, and table lookups to produce a pseudorandom output.

3. Combining Halves: The output of the round function is XORed with the other half of the data. The halves are then swapped, and the process repeats for the specified number of rounds.

4. Finalization: After the final round, the halves are recombined to form the final ciphertext, which maintains the same format as the original plaintext.

Implementing FPE provides numerous benefits to organizations:

1. Compatibility with Existing Systems: Since FPE maintains the original data format, it can be integrated into existing systems without requiring significant changes. This reduces the risk of errors and system disruptions.

2. Improved Performance: FPE algorithms like FF3-1 are designed to be efficient, ensuring minimal impact on system performance. This is crucial for applications where speed and responsiveness are critical.

3. Simplified Data Migration: FPE allows for the secure migration of data between systems while preserving its format, simplifying the process and ensuring compatibility and functionality.

4. Enhanced Data Security: By encrypting sensitive data, FPE protects it from unauthorized access, reducing the risk of data breaches and ensuring compliance with data protection regulations.

5. Creation of production-like data for lower trust environments: Using a product like ALTR’s FPE, data engineers can use the cipher-text of production data to create useful mock datasets for consumption by developers in lower-trust development and test environments.

Padding is a technique used in encryption to ensure that the plaintext data meets the required minimum length for the encryption algorithm. While padding is beneficial in maintaining data structure, it presents both advantages and challenges in the context of FPE:

1. Consistency in Data Length: Padding ensures that the data conforms to the required minimum length, which is necessary for the encryption algorithm to function correctly.

2. Preservation of Data Format: Padding helps maintain the original data format, which is crucial for systems that rely on specific data structures.

3. Enhanced Security: By adding extra data, padding can make it more difficult for attackers to infer information about the original data from the ciphertext.

1. Increased Complexity: The use of padding adds complexity to the encryption and decryption processes, which can increase the risk of implementation errors.

2. Potential Information Leakage: If not implemented correctly, padding schemes can potentially leak information about the original data, compromising security.

3. Handling of Padding in Decryption: Ensuring that the padding is correctly handled during decryption is crucial to avoid errors and data corruption.

ALTR's Format Preserving Encryption, powered by the technically robust FF3-1 algorithm and married with legendary ALTR policy, offers a comprehensive solution for encrypting sensitive data while maintaining its usability and format. This approach ensures compatibility with existing systems, enhances data security, and supports regulatory compliance. However, the use of padding in FPE, while beneficial in preserving data structure, introduces additional complexity and potential security challenges that must be carefully managed. By leveraging ALTR’s FPE, organizations can effectively protect their sensitive data without sacrificing functionality or performance.

For more information about ALTR’s Format Preserving Encryption and other data security solutions, visit the ALTR documentation

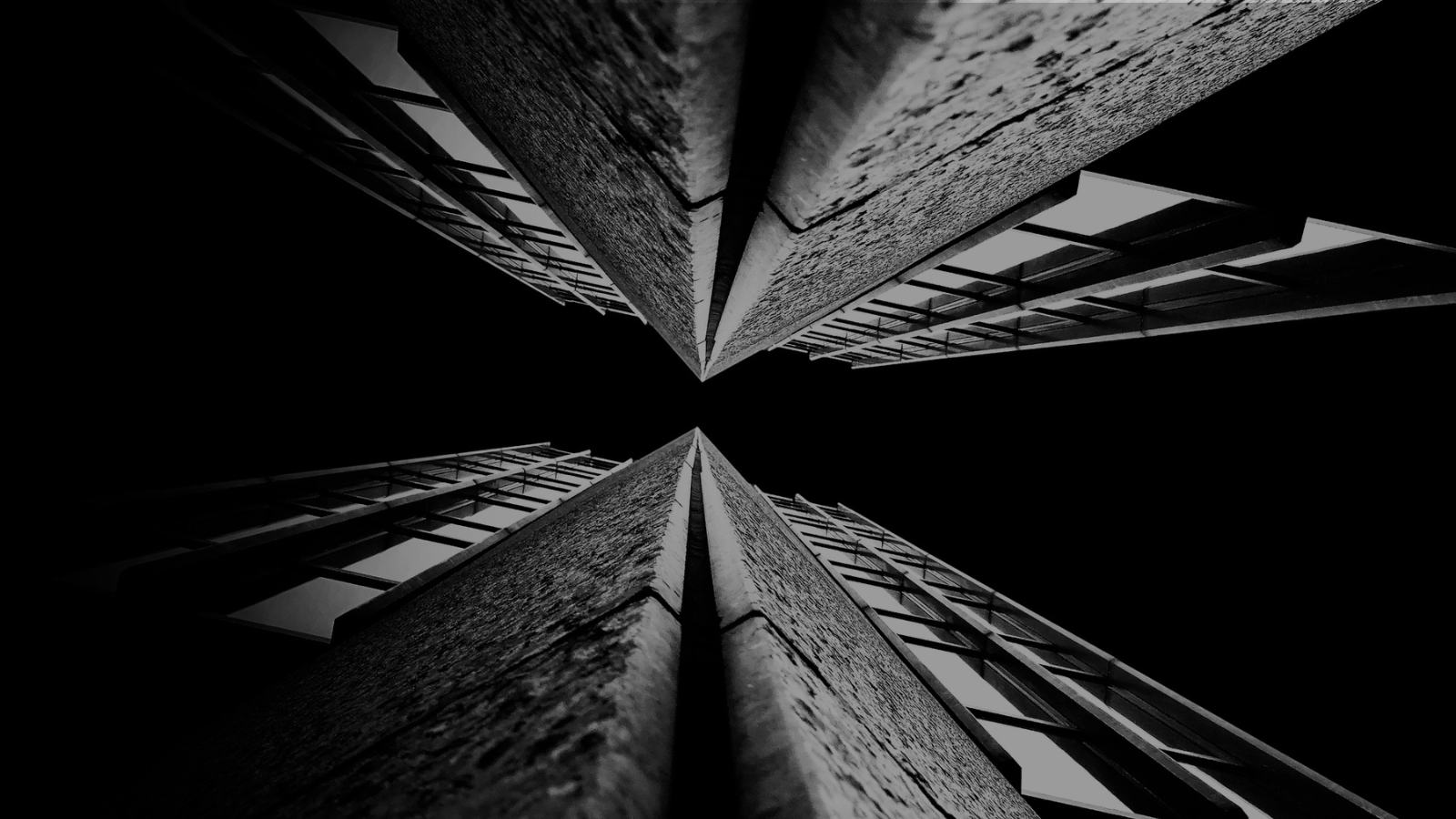

For years (even decades) sensitive information has lived in transactional and analytical databases in the data center. Firewalls, VPNs, Database Activity Monitors, Encryption solutions, Access Control solutions, Privileged Access Management and Data Loss Prevention tools were all purchased and assembled to sit in front of, and around, the databases housing this sensitive information.

Even with all of the above solutions in place, CISO’s and security teams were still a nervous wreck. The goal of delivering data to the business was met, but that does not mean the teams were happy with their solutions. But we got by.

The advent of Big Data and now Generative AI are causing businesses to come to terms with the limitations of these on-prem analytical data stores. It’s hard to scale these systems when the compute and storage are tightly coupled. Sharing data with trusted parties outside the walls of the data center securely is clunky at best, downright dangerous in most cases. And forget running your own GenAI models in your datacenter unless you can outbid Larry, Sam, Satya, and Elon at the Nvidia store. These limits have brought on the era of cloud data platforms. These cloud platforms address the business needs and operational challenges, but they also present whole new security and compliance challenges.

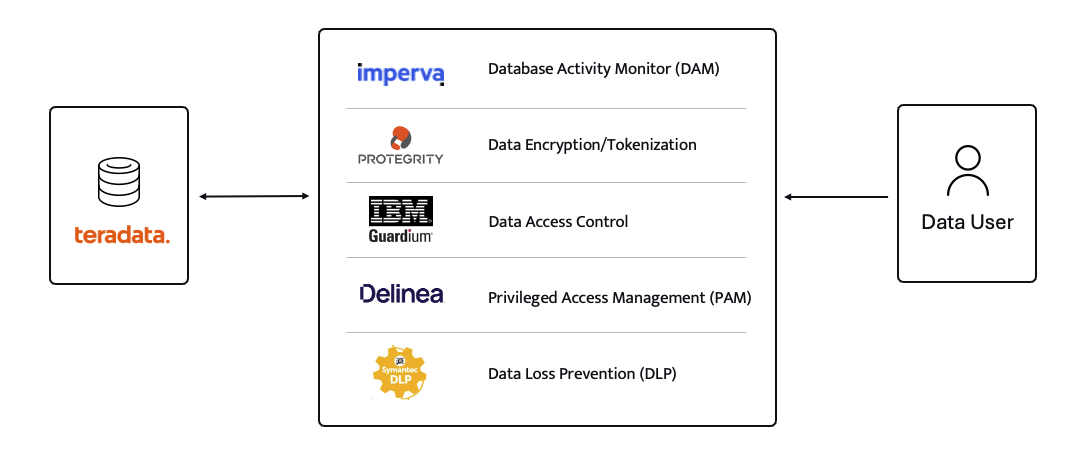

ALTR’s platform has been purpose-built to recreate and enhance these protections required to use Teradata for Snowflake. Our cutting-edge SaaS architecture is revolutionizing data migrations from Teradata to Snowflake, making it seamless for organizations of all sizes, across industries, to unlock the full potential of their data.

What spurred this blog is that a company reached out to ALTR to help them with data security on Snowflake. Cool! A member of the Data & Analytics team who tried our product and found love at first sight. The features were exactly what was needed to control access to sensitive data. Our Format-Preserving Encryption sets the standard for securing data at rest, offering unmatched protection with pricing that's accessible for businesses of any size. Win-win, which is the way it should be.

Our team collaborated closely with this person on use cases, identifying time and cost savings, and mapping out a plan to prove the solution’s value to their organization. Typically, we engage with the CISO at this stage, and those conversations are highly successful. However, this was not the case this time. The CISO did not want to meet with our team and practically stalled our progress.

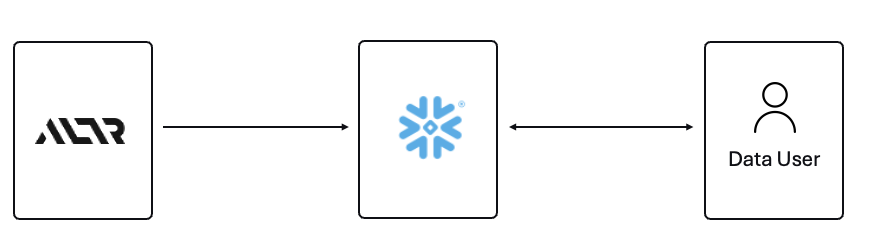

The CISO’s point of view was that ALTR’s security solution could be completely disabled, removed, and would not be helpful in the case of a compromised ACCOUNTADMIN account in Snowflake. I agree with the CISO, all of those things are possible. Here is what I wanted to say to the CISO if they had given me the chance to meet with them!

The ACCOUNTADMIN role has a very simple definition, yet powerful and long-reaching implications of its use:

One of the main points I would have liked to make to the CISO is that as a user of Snowflake, their responsibility to secure that ACCOUNTADMIN role is squarely in their court. By now I’m sure you have all seen the news and responses to the Snowflake compromised accounts that happened earlier this year. It is proven that unsecured accounts by Snowflake customers caused the data theft. There have been dozens of articles and recommendations on how to secure your accounts with Snowflake and even a mandate of minimum authentication standards going forward for Snowflake accounts. You can read more information here, around securing the ACCOUNTADMIN role in Snowflake.

I felt the CISO was missing the point of the ALTR solution, and I wanted the chance to explain my perspective.

ALTR is not meant to secure the ACCOUNTADMIN account in Snowflake. That’s not where the real risk lies when using Snowflake (and yes, I know—“tell that to Ticketmaster.” Well, I did. Check out my write-up on how ALTR could have mitigated or even reduced the data theft, even with compromised accounts). The risk to data in Snowflake comes from all the OTHER accounts that are created and given access to data.

The ACCOUNTADMIN role is limited to one or two people in an organization. These are trusted folks who are smart and don’t want to get in trouble (99% of the time). On the other hand, you will have potentially thousands of non-ACCOUNTADMIN users accessing data, sharing data, screensharing dashboards, re-using passwords, etc. This is the purpose of ALTR’s Data Security Platform, to help you get a handle on part of the problem which is so large it can cause companies to abandon the benefits of Snowflake entirely.

There are three major issues outside of the ACCOUNTADMIN role that companies have to address when using Snowflake:

1. You must understand where your sensitive is inside of Snowflake. Data changes rapidly. You must keep up.

2. You must be able to prove to the business that you have a least privileged access mechanism. Data is accessed only when there is a valid business purpose.

3. You must be able to protect data at rest and in motion within Snowflake. This means cell level encryption using a BYOK approach, near-real-time data activity monitoring, and data theft prevention in the form of DLP.

The three issues mentioned above are incredibly difficult for 95% of businesses to solve, largely due to the sheer scale and complexity of these challenges. Terabytes of data and growing daily, more users with more applications, trusted third parties who want to collaborate with your data. All of this leads to an unmanageable set of internal processes that slow down the business and provide risk.

ALTR’s easy-to-use solution allows Virgin Pulse Data, Reporting, and Analytics teams to automatically apply data masking to thousands of tagged columns across multiple Snowflake databases. We’re able to store PII/PHI data securely and privately with a complete audit trail. Our internal users gain insight from this masked data and change lives for good.

- Andrew Bartley, Director of Data Governance

I believed the CISO at this company was either too focused on the ACCOUNTADMIN problem to understand their other risks, or felt he had control over the other non-admin accounts. In either case I would have liked to learn more!

There was a reason someone from the Data & Analytics team sought out a product like ALTR. Data teams are afraid of screwing up. People are scared to store and use sensitive data in Snowflake. That is what ALTR solves for, not the task of ACCOUNTADMIN security. I wanted to be able to walk the CISO through the risks and how others have solved for them using ALTR.

The tools that Snowflake provides to secure and lock down the ACCOUNTADMIN role are robust and simple to use. Ensure network policies are in place. Ensure MFA is enabled. Ensure you have logging of ACCOUNTADMIN activity to watch all access.

I wish I could have been on the conversation with the CISO to ask a simple question, “If I show you how to control the ACCOUNTADMIN role on your own, would that change your tone on your teams use of ALTR?” I don’t know the answer they would have given, but I know the answer most CISO’s would give.

Nothing will ever be 100% secure and I am by no means saying ALTR can protect your Snowflake data 100% by using our platform. Data security is all about reducing risk. Control the things you can, monitor closely and respond to the things you cannot control. That is what ALTR provides day in and day out to our customers. You can control your ACCOUNTADMIN on your own. Let us control and monitor the things you cannot do on your own.

Since 2015 the migration of corporate data to the cloud has rapidly accelerated. At the time it was estimated that 30% of the corporate data was in the cloud compared to 2022 where it doubled to 60% in a mere seven years. Here we are in 2024, and this trend has not slowed down.

Over time, as more and more data has moved to the cloud, new challenges have presented themselves to organizations. New vendor onboarding, spend analysis, and new units of measure for billing. This brought on different cloud computer-related cost structures and new skillsets with new job titles. Vendor lock-in, skill gaps, performance and latency and data governance all became more intricate paired with the move to the cloud. Both operational and transactional data were in scope to reap the benefits promised by cloud computing, organizational cost savings, data analytics and, of course, AI.

The most critical of these new challenges revolve around a focus on Data Security and Privacy. The migration of on-premises data workloads to the Cloud Data Warehouses included sensitive, confidential, and personal information. Corporations like Microsoft, Google, Meta, Apple, Amazon were capturing every movement, purchase, keystroke, conversation and what feels like thought we ever made. These same cloud service providers made this easier for their enterprise customers to do the same. Along came Big Data and the need for it to be cataloged, analyzed, and used with the promise of making our personal lives better for a cost. The world's population readily sacrificed privacy for convenience.

The moral and ethical conversation would then begin, and world governments responded with regulations such as GDPR, CCPA and now most recently the European Union’s AI Act. The risk and fines have been in the billions. This is a story we already know well. Thus, Data Security and Privacy have become a critical function primarily for the obvious use case, compliance, and regulation. Yet only 11% of organizations have encrypted over 80% of their sensitive data.

With new challenges also came new capabilities and business opportunities. Real time analytics across distributed data sources (IoT, social media, transactional systems) enabling real time supply chain visibility, dynamic changes to pricing strategies, and enabling organizations to launch products to market faster than ever. On premise applications could not handle the volume of data that exists in today’s economy.

Data sharing between partners and customers became a strategic capability. Without having to copy or move data, organizations were enabled to build data monetization strategies leading to new business models. Now building and training Machine Learning models on demand is faster and easier than ever before.

To reap the benefits of the new data world, while remaining compliant, effective organizations have been prioritizing Data Security as a business enabler. Format Preserving Encryption (FPE) has become an accepted encryption option to enforce security and privacy policies. It is increasingly popular as it can address many of the challenges of the cloud while enabling new business capabilities. Let’s look at a few examples now:

Real Time Analytics - Because FPE is an encryption method that returns data in the original format, the data remains useful in the same length, structure, so that more data engineers, scientists and analysts can work with the data without being exposed to sensitive information.

Data Sharing – FPE enables data sharing of sensitive information both personal and confidential, enabling secure information, collaboration, and innovation alike.

Proactive Data Security– FPE allows for the anonymization of sensitive information, proactively protecting against data breaches and bad actors. Good holding to ransom a company that takes a more proactive approach using FPE and other Data Security Platform features in combination.

Empowered Data Engineering – with FPE data engineers can still build, test and deploy data transformations as user defined functions and logic in stored procedures or complied code will run without failure. Data validations and data quality checks for formats, lengths and more can be written and tested without exposing sensitive information. Federated, aggregation and range queries can still run without fail without the need for decryption. Dynamic ABAC and RBAC controls can be combined to decrypt at runtime for users with proper rights to see the original values of data.

Cost Management – While FPE does not come close to solving Cost Management in its entirety, it can definitely contribute. We are seeing a need for FPE as an option instead of replicating data in the cloud to development, test, and production support environments. With data transfer, storage and compute costs, moving data across regions and environments can be really expensive. With FPE, data can be encrypted and decrypted with compute that is a less expensive option than organizations' current antiquated data replication jobs. Thus, making FPE a viable cost savings option for producing production ready data in non-production environments. Look for a future blog on this topic and all the benefits that come along.

FPE is not a silver bullet for protecting sensitive information or enabled these business use cases. There are well documented challenges in the FF1 and FF3-1 algorithms (another blog on that to come). A blend of features including data discovery, dynamic data masking, tokenization, role and attribute-based access controls and data activity monitoring will be needed to have a proactive approach towards security within your modern data stack. This is why Gartner considers a Data Security Platform, like ALTR, to be one of the most advanced and proactive solutions for Data security leaders in your industry.

Securing sensitive information is now more critical than ever for all types of organizations as there have been many high-profile data breaches recently. There are several ways to secure the data including restricting access, masking, encrypting or tokenization. These can pose some challenges when using the data downstream. This is where Format Preserving Encryption (FPE) helps.

This blog will cover what Format Preserving Encryption is, how it works and where it is useful.

Whereas traditional encryption methods generate ciphertext that doesn't look like the original data, Format Preserving Encryption (FPE) encrypts data whilst maintaining the original data format. Changing the format can be an issue for systems or humans that expect data in a specific format. Let's look at an example of encrypting a 16-digit credit card number:

As you can see with a Standard Encryption type the result is a completely different output. This may result in it being incompatible with systems which require or expect a 16-digit numerical format. Using FPE the encrypted data still looks like a valid 16-digit number. This is extremely useful for where data must stay in a specific format for compatibility, compliance, or usability reasons.

Format Preserving Encryption in ALTR works by first analyzing the column to understand the input format and length. Next the NIST algorithm is applied to encrypt the data with the given key and tweak. ALTR applies regular key rotation to maximize security. We also support customers bringing their own keys (BYOK). Data can then selectively be decrypted using ALTR’s access policies.

FPE offers several benefits for organizations that deal with structured data:

1. Adds extra layer of protection: Even if a system or database is breached the encryption makes sensitive data harder to access.

2. Original Data Format Maintained: FPE preserves the original data structure. This is critical when the data format cannot be changed due to system limitations or compliance regulations.

3. Improves Usability: Encrypted data in an expected format is easier to use, display and transform.

4. Simplifies Compliance: Many regulations like PCI-DSS, HIPAA, and GDPR will mandate safeguarding, such as encryption, of sensitive data. FPE allows you to apply encryption without disrupting data flows or reporting, all while still meeting regulatory requirements.

FPE is widely adopted in industries that regularly handle sensitive data. Here are a few common use cases:

ALTR offers various masking, tokenization and encryption options to keep all your Snowflake data secure. Our customers are seeing the benefit of Format Preserving Encryption to enhance their data protection efforts while maintaining operational efficiency and compliance. For more information, schedule a product tour or visit the Snowflake Marketplace.

Getting your sensitive data under control doesn’t have to be complicated, time-consuming or costly. In fact, there’s a lot you can do with ALTR’s free plan to know, control, and protect sensitive data quickly so you can move on to more value-adding activities. ALTR lets you see who’s using what data, when, and how much. Within minutes, you can quickly classify data, apply controls, and generate alerts, even block access. Don’t believe me? Let’s review five things you can do in an hour with ALTR.

Before you can govern private data, you need to know which data is sensitive.

First, let’s assume you’ve already set-up your ATLR SaaS platform account and have logged in. To classify your data, all you need to do next is connect one of your databases. As you're connecting the database, simply check the option to classify this data. ALTR then categorizes the data and presents a tab for Classification, which is where you can find the data grouped under common data tags.

If you did not classify a database when it was first connected, you can go back later to classify it. Just click the name of the database from within the ALTR screen, select the classify data checkbox, and update your database. In a few minutes, ALTR presents the classification report.

The report shows how data is classified as sensitive. ALTR categorizes the private data into types, such as social security numbers, email addresses, and names. You can use this information to then add controls to any column of data, such as locking or blocking people from access.

You can also allow access, but see every attempt to access the data, known as a query.

The second way to protect your sensitive data is to use the Query Log function, which lets you know immediately who is trying to do what with your sensitive data. ALTR lists every single query that users executed on your sensitive data: the log includes the exact query and who created it. All of this information is collected in one place, allowing you to filter the queries so you can see immediately what's happening across your company. After the first 24 hours, ALTR presents a heat map that provides a visualization of the activity on your sensitive data. The heat map is updated once a day.

When you’re putting a lock on a particular column of data, you can also add a masking policy. With masking, the goal is to give users only the minimal amount of information they need from the data, nothing more, to provide the most protection possible. In real terms, not everyone needs the same level of access to the same data.

For example, a marketing specialist might need a full email address whereas an analyst only wants to know how many people have a specific service like Gmail, so they just need to see the @domain. They don’t need the entire, fully qualified, email address.

Another common masking technique is to only show the last four digits of the social security number to allow a call center employee to verify your SSN—but they don't need access to the whole thing to verify that you are who you say you are. Masking is a simple yet highly effective way to enable functionality without fear of inadvertently showing the digital crown jewels.

ALTR allows you to add thresholds that prevent or allow access to datasets.

To prevent access, you can set the threshold for a Block action when a rule is met. The threshold rule for blocking could be based on access rate, when someone tries to access the data a certain number of times; a time window, like the weekend; or from a range of IP addresses. You can include other parameters such as user groups that the threshold rule applies to.

Lastly, to protect your sensitive data, you can also set a threshold to Generate Anomaly, which instead of blocking access, grants access, but also sends an alert that lets you know who is accessing the sensitive data. Similar to blocks, you can establish anomaly thresholds based on access rate, time window, and IP address. For example, you may grant access while sending alerts at a certain time, such as during the weekdays when an administrator is on duty, and block access completely during the weekend or at night. ALTR sends alerts whenever someone tries to access the data.

Regardless of the policy you choose, ALTR allows you to set controls in minutes without code. You can classify sensitive data, block access, or generate alerts as soon as you connect ALTR to your data. Just pick the dataset that you want to and apply the rules. You can do a lot within that first hour. And it just gets better from there.

Most of today’s workforce is accustomed to working in an office under carefully crafted IT systems. However, the abrupt shift to remote work due to the pandemic has caused a lot of new risks and exposures. Teams are now decentralized, and security is top of mind, so organizations are relying on things like multifactor authentication, remote access, and encryption – but is that really enough?

Since COVID-19, the FBI has seen a 300% increase in reported cyber crimes, which is only accelerating the urgency to adapt. There is no silver bullet for success in this unpredictable environment, but there are certainly new best practices and lessons to be learned.

At ALTR, we are helping our customers protect valuable data, and we’ve seen first-hand the struggles they are facing. Here are a few questions we are answering for them:

ALTR’s cloud-native service embeds observability, governance, and protection at the code level to close those gaps and improve security, simplify compliance, and increase accountability. This unique approach to data access controls fosters more rapid development and deployment of secure applications, and it enables greater innovation across the entire enterprise.

It’s more important now than ever before to share knowledge and work together to adapt in these uncertain times – that’s why ALTR is participating in IDG’s ”New Reality Virtual Tradeshow Series.” It has been a great platform to discuss the new risk and security landscape with peers and other industry experts. The intention of the conference is to have all attendees walk away knowing:

Our own VP of Product & Marketing, Doug Wick, spoke about “Remote Access and the Rising Tide of Sensitive Data.” This presentation and many more are still available OnDemand during the final 2 days of the event (July 28-29). Check out the virtual conference here.

To find out more about how ALTR protects sensitive data across the enterprise, stop by our virtual booth, or read more here.

How embarrassing: one second you’re trying to provide a third-party vendor with the information they need to perform a very specific task, and the next thing you know you’ve accidentally dangled all of your private data right in front of their eyes. Best case scenario, the vendor is kind enough to turn and look the other way while you put your unseeables back where they belong. Worst case scenario, the vendor exploits your unintended exposure by selling your vulnerability to the highest bidder.

It’s an all too common tale of the 21st century, and something every business should consider since every organization has sensitive data and countless users can access that data. Here’s why it’s so dang hard to keep data protected these days.

The operations landscape nowadays is far more complex than those of previous eras. Businesses today rely on their relationships with contractors, vendors, and partners to ensure every facet of their organization is optimized, and many of those relationships are now location agnostic. Thanks to the internet, the entire world has become one big talent pool, but with cloud allowing you to be anywhere, the risk to your data has multiplied.

It’s not that everyone these days is dishonest, it’s that even your most trusted business partners are capable of making an honest mistake. Without proper tools to secure data, even trustworthy vendors may see more than they should. Take, for instance, the risk posed by third-party application developers. Oftentimes, in an effort to use realistic datasets to build and maintain applications, developers end up accessing production data. This puts the development partner and the business at an increased risk of a regulatory or compliance breach, not to mention detrimental reputation loss. Improper data exposure with partners is common, and everyone from HVAC vendors (in the case of the Target breach) to medical transport providers is seeing more than they should.

The most common method for protecting private data is controlling access at the application level. This is definitely important to keep the bad guys out, but what about the data itself? Are you also managing what data and how much these users can consume? What happens if the user’s password is guessed or stolen by a cybercriminal? All your sensitive data is now exposed to a malicious third party with credentialed access to as much data as they like.

The reason these risks present themselves in the first place is because current solutions fail to focus on what it is that needs protection: data. In essence, these controls are about users, not about protecting the data itself. Newer methods use dynamic data masking and thresholds so that credentialed users can only see the minimum amount of data they need to perform their jobs and can only access a certain amount of data in a chosen time frame.

That’s how ALTR’s Data Security as a Service delivers the privacy your data deserves. With ALTR, organizations gain a clearer understanding of the relationships between users and the actual data they are accessing. They also provide format-preserving dynamic masking of data to ensure sensitive data is hidden from unauthorized groups. Lastly, they provide real-time breach mitigation by imposing thresholds on how much data can be accessed based on normal usage patterns. By understanding who is accessing what data, and how much, businesses are better able to secure private data before it is exposed without having to re-engineer applications.

To learn more about how ALTR protects your business, download our complimentary white paper, How to Address the Top 5 Human Threats to Your Data.

In 2018, California passed the California Consumer Privacy Act (CCPA), which grants California residents the right to knowledge concerning the data harvested from them by corporations and control over its dissemination. The CCPA includes six key principles with respect to data protection for California residents, who have the rights to:

In other words, if you live in California, you’ve got a right to know what corporations know about you – and the ability to stop them from sharing it with other companies. It doesn’t apply to every company, only to businesses over a certain revenue threshold that make significant profits off of consumer data. But that describes a lot of companies out there, and it probably includes your bank, in part because the CCPA applies to any company that uses the data of California residents whether or not the company itself is located in California.

If you’re steering the company ship, what can you do to comply with the CCPA and protect your reputation? To start, since customers have the right to know what data a company holds and whether it’s sold or transferred to another entity, internal record keeping is more vital than ever. If you maintain accurate records that trace the movement of any given customer’s data in order to be able to provide it back to the customer on request, you’re in good shape.

It also pays to install protocols both for protecting and destroying data, as customers are allowed to refuse the sale of their data or demand it be deleted. Let’s say a customer calls and requests their data be purged. You remove it from your company’s internal system, but then what? To satisfy the customer and remain in compliance with the CCPA, you’ll need to audit vendors or other entities you regularly work with to ensure you’re all securely on the same page. Controlling the data that you share externally in the first place by using a program like ALTR can help. Instead of giving every vendor unchecked access to the entire pool of customer data, ALTR dynamically mask chosen fields and only gives each vendor access to exactly what they need to complete their work. Along with controlling what they see, you can also control how much by imposing thresholds that will block access once limits are exceeded, preventing a breach in real time. Curbing the flow of data this way makes it easier to fulfill those customer requests.

When it comes to customer calls, the CCPA gives companies 45 days to respond to consumer data requests. Creating a team specifically trained to respond to data requests within this timeframe will put your company ahead of the curve. Training a few key employees to efficiently and easily respond to requests will almost certainly be easier than scrambling to comply only after requests have started to pile up. ALTR’s Data Access Monitoring as a Service can help the team to identify who accessed what data, when they accessed it, and how much was viewed, and give that information directly back to the customer in real time. Logging all data requests and responses immutably, you now have an audit trail that makes compliance easy.

While the CCPA does not go as far as its New York counterpart act with respect to potential lawsuits, leaving enforcement primarily to the office of the attorney general, it’s of course better to avoid lawsuits altogether by ensuring you’re in compliance. California will thank you, and so will your customers.

To learn how ALTR is helping organizations like yours, check out our latest CCPA case study.

___________________________________________________________________________________________________

What’s more valuable – your credit card number or your name? It may depend on the situation, but many of us never thought the information about us that’s freely and publicly available – our names, our addresses, our emails – or even less public data like our social security numbers, would be worth something to somebody someday. But the world of data has changed in the last few years, that day is here, and when you look at PII vs PCI, PII data is now worth its weight in credit cards.

As recently as the early 2000s, there was no clear way to deal with credit card fraud. Who was on the hook for the purchases made by a scammer with a stolen credit card number? Generally, it was the credit card company. That created an incentive for those companies to impose stronger security on companies that wanted to offer the benefits of credit card payments to their customers. Eventually the industry came together on the Payment Card Industry Data Security Standard (PCI DSS) in 2006.

In order for merchants and other vendors to be compliant with PCI DSS, they must meet requirements for secure networks and protection of cardholder data, validated by audit. And the requirements are scaled by the number of transactions handled, from less than 20,000 to more than 6 million annually. Non-compliance can result in fines from some major credit card companies. While compliance with PCI DSS is not required by federal law, it does have the effect of putting a focus on credit card data security.

Obviously, credit card companies had an incentive to ensure data was secure in order to limit their liability for fraud. But how do PCI vs PII value and risk stack up? What’s the liability for breaches of personally identifiable information (PII)? Until recently, there was very little. One of the reasons was that we simply didn’t realize PII data was valuable.

Around the same time PCI credit card protections were being implemented in 2006, Facebook was ramping up. While we understood that credit cards could be stolen and used to purchase goods, we were putting our names, our hometowns, our mother’s names, our dog’s names, our employers, our favorite restaurants out there for the world to see without a thought for what could be done with this data.

It turns out that PII data is supremely valuable. In fact, PII, PCI and PHI (personal health information) represent the data treasure trove. Facebook and others turned our personal information into lucrative revenue streams by offering it to third parties for advertising targeting, political research, and more. A study calculated that internet companies earned an average of $202 per American internet user in 2018 from personal data. Many companies use the information they gather about us as customers to send targeted offers to increase sales, create new product lines, or optimize distribution channels.

And the value of PII is not lost on cyber bad actors: PII can be used for everything from fraudulent tax returns to synthetic identity fraud. In fact, when you compare PII vs PCI, PII comes out ahead. Because PII tends to be a longer-term identifier – you don’t change your name or your social security number it stays with us just like our PHI health histories – it has more value to thieves than credit card numbers that can be easily canceled and reissued.

.png)

So, while the value of PII is increasing for both legitimate users and bad actors, the penalty for PII breaches is finally increasing as well. All 50 U.S states now have PII regulations like personal data breach notification laws. Europe’s General Data Protection Law (GDPR), the California Consumer Privacy Act (CCPA), and laws under consideration in 10 states add fines onto direct and indirect costs such as time and effort to deal with a breach and lost opportunities. According to the IBM 2020 Cost of a Data Breach Report, PII data was the most frequently compromised data and more costly than other types. The average cost to companies is now $150 per PII record. The combined costs of a breach now create a significant liability for those companies that gather, hold and share PII data.

The good news is that the cost and difficulty of securing that data is decreasing. Merchants have moved from encryption to tokenization when storing credit card data for its ease of use, low overhead, and the fact that breaches don’t result in data that can be utilized by thieves. Protectors of PII can do the same. Combine that with the increase in compute power promised by Moore’s law and SaaS-based solutions like ALTR’s can deliver low-cost, easy-to-implement data security that democratizes PII security.

See our complete guide to PII data - How to identify, understand and protect the personally identifiable information your company is responsible for.

Just like there was a critical inflection point for PCI data where the amount of theft and fraud drove the credit card companies to require better security, there is an inflection point for PII data where the cost of breaches outweigh the cost of security. And we’ve passed it. Especially as we move sensitive data to the cloud, where access is much more rampant than in your locked down data center, it’s critical to ensure that data is secure. Breaches are only going to get more expensive, and it’s past time protect PII as stringently as PCI.

Get started protecting PII, PCI and PHI data in the cloud with the ALTR free plan.

In the Data Management Body of Knowledge, data strategy is defined as a “set of choices and decisions that together, chart a high-level course of action to achieve high-level goals.” Data strategy sits at a critical spot within any organization: you’re defining what you’re going to do with data to reach the business outcomes you want to achieve. In doing so, you must take into account things like your regulatory environment, current infrastructure, and the limits on what you’re able to do with data.

In an article published in Harvard Business Review, the authors view data strategy as having two styles: offense and defense. Offensive data strategy focuses on getting value out of data to build better products, improve your competitive position, and improve profitability, while a defensive data strategy is focused on things like regulatory compliance, risk mitigation, and data security. An organization must make considered trade-offs between offense and defense, the authors propose, as there are limited resources available and attempting to accomplish all of your offensive and defensive goals is akin to having your cake and eating it too.

Here’s the thing: we disagree.

The Harvard Business Review article was published in the spring of 2017, before the privacy regulations we know and love today were in effect, before 2020’s massive shift to the cloud, and before data solidified itself as the critical new trend. The world has changed since then, yet this viewpoint is still echoed by leaders in the fields of data governance and data management as true. It’s time to take a step back and refresh our thinking. Here’s what we know:

Hardly anyone knew the name “Snowflake” in 2017, and in 2020 the Cloud Data Platform became the largest IPO by a software company in U.S. history. They did so by offering a simple way for organizations to store and analyze huge amounts of information. They’re not alone, either. Companies like Fivetran and Matillion make it easy to load data into cloud data platforms like Snowflake, while those like Tableau allow you to extract value from data within those platforms. With the shift to the cloud, it’s easier than ever to implement an offensive data strategy. Unfortunately, new and increasing privacy regulations mean your focus is forced elsewhere.

The Harvard Business Review article was right when it said companies in highly regulated environments must focus on defensive data strategies. What wasn’t accounted for in 2017 were the sweeping privacy regulations that have come into effect around the globe. Now, every company is a regulated company and must spend time and resources implementing a defensive data strategy to avoid the costly penalties that come with a data breach. So if you must focus on defense, is there a way to somehow get the best of both worlds?

Defensive strategies must take a page out of the offensive playbook and implement tools for risk mitigation, data governance, and data security as simply as possible. If tools can be implemented as services, without requiring resources to install and maintain, your team can accomplish both your offensive and defensive goals. Further, tools that can mitigate the risk of credentialed threats through proactive security allow you to enhance your offensive capabilities by moving more sensitive workloads to the cloud and sharing data with more teams.

You no longer have to make considered trade-offs between offensive and defensive data strategies. By implementing a defensive data strategy that mirrors the simplicity of your offensive tools, you can actually increase your ability to get value out of data. In this case, you truly can have your cake and eat it too.

ALTR's cloud platform helps mitigate data risk so you can confidently share and analyze sensitive data. To see how ALTR can help your organization request a demo or try it for yourself!

OneTrust is the #1 fastest growing company on the Inc. 500, and for good reason. Organizations big and small, including about half of the Fortune 500, rely on the OneTrust platform to easily operationalize privacy, security, and data governance. This is especially critical as data (and data privacy) solidifies itself as the next big trend.

While it’s easier than ever to get value out of data, managing data has never been more complicated. Organizations have more data than ever, with more users needing access to data than ever before. On top of this, data is riskier than ever, forcing teams responsible for Privacy, Legal, Security, Risk, and Compliance to manage growing privacy regulations and attack vectors at the same time.

OneTrust and ALTR have approached this problem from separate ends: OneTrust by simplifying privacy and governance policy and ALTR by simplifying the implementation and enforcement of those policies. While separate approaches, our vision of enabling secure, governed access to data is a shared one. By combining our technologies, we’re able to meet in the middle to provide a holistic solution for data governance and data security where data teams get access to the data they need, while governance, privacy, and legal teams feel confident in their customers' and employees' privacy, and security and risk teams ensure data remains protected. This is why we’re beyond excited to announce our partnership and integration.

Our partnership with OneTrust allows thousands of customers to more tightly integrate their governance, data, and security teams. We believe this will help our shared customers dramatically simplify data governance programs, using automation to close the gap between governance policy creation and security enforcement.

With our new integration, OneTrust scans data sources to catalog your sensitive data and create policy to govern access based on its sensitivity. OneTrust then uses this policy to automatically configure access controls within ALTR, and ALTR enforces your governance policy on every request for sensitive data.

This new partnership brings together OneTrust’s centralized platform for privacy, security, and data governance with ALTR’s advanced, real-time enforcement. With this, you can automate access to sensitive data and close the gap between policy creation and enforcement, at scale, in a really simple way.

Check out the OneTrust and ALTR webinar, as we take a deeper dive into the benefits of this partnership along with a live demonstration of our new integration. Click here to watch the webinar on demand!

As a society, we’ve been forced seemingly overnight into a new work environment with offices closing (and many companies permanently downsizing office space) and remote work seeming more and more like it's here to stay. The new normal is sure to be more digital, and enterprises are moving quickly to adapt to these changes by enabling remote work and further accelerating the migration to the cloud. Unfortunately, these rapid changes have also opened up new avenues for attackers to exploit. If companies are to remain secure in the new normal, they’ll need to adapt their security posture as well.

Enterprises already invest heavily in security (worldwide security spending is already over $100 billion annually, and expected to grow to $170 billion by 2022), but still lack basic visibility into and control over the sensitive data they collect and consume. This lack of visibility prevents companies from understanding how their organization uses data and also from taking advantage of these data consumption patterns, a key requirement as we evolve into the age of data. Meanwhile, a lack of control around data consumption means while companies may have implemented controls around who is able to access data and what data they’re allowed to access, they’ve not closed a critical gap: how much data a credentialed request is allowed to consume.

These two factors — an inability to understand enterprise data consumption and a lack of control around how much data is allowed to be consumed — combined with a quickly evolving regulatory environment, create a perfect storm for today’s enterprises: credentialed requests for data are often able to consume without limits, opening up a level of risk that puts entire companies at stake. With the rapid changes demanded by today’s new normal, the urgency to close this gap has only grown in importance.

Companies that don’t place limits on the consumption of sensitive data are already in very dangerous territory as they remain vulnerable to both insider and external threats. Verizon’s latest Data Breach Investigations Report informs us that inside actors are involved in 30% of data breaches, and over 80% of hacking-related breaches (hacking by external parties is the most common type of threat action) involve the use of brute-force attacks or stolen credentials. The common denominator here is clear: having credentials is the best way to obtain what threat actors are looking for — sensitive data.

In addition to the financial impacts of a breach (CCPA fines can be up to $7,500 per record, for example), the impacts to brand reputation and operations pile up quickly, with strategic efforts put on hold while team members turn into firefighters and customers lose trust in the company.

To mitigate these risks, enterprises need a solution that provides observability and control over data consumption. These controls provide confidence in the security of the organization’s data no matter where it lives, enabling companies to properly and rapidly take advantage of the migration to the cloud. In fact, it’s only by having these capabilities that organizations can confidently and securely enter the new normal.

Ideally, it would be great if you could treat your data the same way banks treat money in an ATM. Here’s the process as we see it:

This is where most companies are today, and where security tools offer their services. You’re able to solve for identity, authentication, and privilege, and most tools can provide some level of auditing for you as well. However, there is a major piece missing from the enterprise’s arsenal that banks solved a long time ago: controlling how much someone is able to consume — money in the bank’s case, data in ours.

For security and logical reasons, banks place limits on the amount of money you’re allowed to withdraw from an ATM. These limits are enforced on individual trips to the ATM, as well as contextually throughout the day. Limits like this protect the end user from fraudulent activity, protect the bank from customers withdrawing more money than they have (either accidentally or maliciously), and ultimately build trust in the bank’s ability to securely store its customers’ money.

This is exactly what enterprises need to be doing with sensitive data. You need the ability to contextually understand consumption patterns across all sensitive data (whether PII, PHI, or PCI data), limit how much data a request is allowed to consume, and proactively prevent requests from consuming more data than they are allowed to.

With ALTR, organizations can set governance policy to limit the consumption of sensitive data across the enterprise. Each time sensitive data is requested, ALTR records both the request itself and metadata around the request (which data was requested, how much, when, from where, etc.), and analyzes the request against ALTR’s risk engine before allowing or preventing the return of sensitive data. Data consumption and policy-related information can be sent to enterprise SIEMs and external security clouds and visualization tools (like Snowflake and Domo) for further analysis so the company can understand and learn from its data consumption behavior.

By implementing data consumption governance with ALTR, enterprises can understand how their organization consumes sensitive data, protects that data, protects their customers, keeps up with a rapidly changing regulatory environment, builds trust, and solidifies their reputation while securely and confidently entering the new normal.

Ready to learn more about improving visibility into and control over your organization's data consumption? Check out this brief overview or reach out to get the conversation started. We’d love to hear from you!

In part one of this series, we talked about how 2020’s massive increase in the use of cloud data platforms lead to organizations rushing to get to the fastest “time to data and insights”. This meant they were left with no option but to consider data governance and security last, which is massively problematic not only for regulatory reasons but for financial reasons as well. So part one was more about the problem; this article will address the solution.

A multi-cloud data governance and security architecture starts with the data generated and where that data is stored. Data sources can span between OLTP databases to large data sets used for data science. These databases exist across multiple cloud data platforms (Snowflake, AWS Redshift, Google BigQuery) as "fit for purpose" databases for analytics, operations, or data science. Data observability, governance, and security is applied from the ingestion point and ends in the exfiltration of data by various data consumer types such as business intelligence solutions.

The discovery and classification of data across multiple cloud data platforms and data sources is paramount. Once data is discovered and classified, you may introduce automation to apply governance and security policies based on security and compliance requirements specific to the business. Sensitive information is stored in a tokenized format and replaced with keyless and map-less reference tokens.

Data consumption and analytics components of the architecture may observe data access in real time and provide intelligence for stopping both credentialed breaches and erroneous access to data from applications and services used by data consumers such as data scientists, analysts, and developers. Any anomalous behavior should be blocked, slowed down, or reported to the security operations center and initiate a workflow in a company's security orchestration, automation, and response (SOAR) services.

The architecture's data governance and security components must support different business goals such as data monetization, revenue generation, operational reporting, security, and compliance while promoting data access performance and "time to data." In other words, the best multi-cloud data governance and security architecture are invisible but very active when it needs to be.

As we proceed into 2021, there is no sign of slowing down data generation, storage, and consumption. Think about IoT data generation, storage, and protection. This shift into the edge is going to be massive! An Andreessen Horowitz article calls for the “The End of Cloud Computing” , and with good reason. Peter Levine (Andreessen Horowitz) rightly says, “Data Drives the Change, Real World, Real Time”. With this massive change in structured, unstructured, and edge device data, Business leaders should positively incentivize organizations to establish multi-cloud data governance and security architectures now. 2021 is the year.

A properly designed and implemented multi-cloud data governance and security architecture will significantly reduce costs and introduce automation around data discovery, classification, and security. With this architecture, you will know how much data risk exists. Once you know the risks, you can Implement governance and security policy once and apply it everywhere. Marrying this with automation into your security operations center (SOC, SOAR) will be very important to ensure you can respond to real data security threats in near real-time.

So that’s why we’re here! We’d love to show you firsthand how ALTR’s Data Security as a Service can help your organization reduce costs and introduce automation around data discovery, classification, and security.

2020 saw an increase in cloud data platforms used for operation, analytic, and data science workloads at neck-breaking speed. In a rush to get to the fastest "time to data and insights," organizations are left with no option but to think about data governance and security last. The first phase of migration to the cloud involved applications and infrastructure. Now organizations are moving their data to the cloud as well. As organizations shift into high gear with data migration to the cloud, it's time to adopt a cloud data governance and security architecture to support this massive exodus to the major cloud data platforms (Snowflake, AWS, BigQuery, Azure) at scale.

DalleMule & DavenPort, in their article What's your data strategy? , say that more than 70% of employees have access to data they should not, and 80% of analysts' time is spent simply discovering and preparing data. We see this firsthand when we work with small and large organizations alike, and this is a widespread pattern. Answering the question of who has access to what data for one cloud data platform is hard enough; imagine answering this question for a multi-cloud data platform environment.

Let's say you're using Snowflake and AWS Redshift. Your critical analytic and data science workloads are spread across both. How do we solve the challenge of answering who has access to what data consistently and across those two cloud data platforms? For companies that are heavily regulated, you must answer these questions while using a specific regulatory lens such as GDPR, HIPAA, CCPA, or PCI. These regulations further complicate things.

The struggle for balance between complying with regulations and promoting the fastest time to data means the experience for developers, analysts, and data scientists must be pleasurable and seamless. Data governance and security historically has introduced bumps on the road to velocity. DalleMule & DavenPort’s article presents a robust data strategy framework; they look at a data strategy as a "defensive" versus an "offensive" one. The defensive strategy focuses on regulatory compliance, governance, and security controls whereas the offensive approach focuses on business and revenue generation. The key, they say, is striking a balance; and we agree.

From a technical strategy perspective, in order to implement either a defensive or offensive strategy and achieve a continually shifting balance across multiple cloud data platforms, you need a shared data governance and security architecture. This architecture must transparently observe, detect, protect, and secure all sensitive data while increasing performance over time.

Snowflake famously separated compute and storage. Data governance, security, and data should follow suit. Making the shift from embedded role-based and identity security and access controls in the cloud data platform to an external intelligent multi-cloud data governance and security architecture allows for the optimum flexibility and ability to apply consistent governance and security policies across various data sources and elements. Organizations will define data governance and security policy once and have it instantly applied in all distributed cloud data platforms.

Avoiding governance, security, and access policy lock with one cloud data platform provider will be critically important to adopt a multi-cloud strategy. Think of it this way: suppose you implement data access and security controls for data in Redshift. In that case, you can't expect the same policy to automatically be implemented consistently in your Azure, Snowflake, or Google BigQuery data workloads. This type of automation would require an open and flexible multi-cloud data governance and security architecture. It's essential to avoid the unnecessary complexity and cost of having data governance and security silos across cloud data platform providers. Unnecessary complexity doesn't make technical or business sense. Not having multi-cloud data governance and security architecture will negatively impact data observability, governance, and security costs significantly. The more data you migrate to the cloud, the more your cost increases. Worldwide data is expected to increase by 61% to 175 zettabytes, most of which will be residing in cloud infrastructures. Think about what this will do to governance and security costs across multiple cloud data platform environments.

This massive movement of data to the cloud will require an incredibly robust data discovery and classification capability. This capability will answer where the data is and what type of data it is. AI and ML will be critical to making sense of the discovery and classification meta-data across these data workloads. You can't protect what you can't see. The discovery of vulnerable assets like data has been the age-old challenge with implementing security controls over large enterprises. With observability, discovery, and governance, you will now be inundated with a tremendous amount of data about people's access and security controls in place to mitigate potential data security risks.

Check out part two of this series to learn how a properly designed and implemented multi-cloud data governance and security architecture can reduce costs and introduce automation around data discovery, classification, and security.

An earlier post talked about why cloud data warehouses (CDWs) match so well with data security as a service (DSaaS). This post goes into more detail about exactly how DSaaS improves data access governance for CDWs.

The greatest power of the cloud is that it eliminates the need to operate many parts of a traditional IT infrastructure, from servers to networking equipment. This of course brings a lot of benefits with it, including lower capital expenditure on hardware and software, much more efficient operations, and significant savings of time and money. CDWs in particular also enable better data visualizations and advanced analytics so your organization can make better business decisions. Those are big wins.

When it comes to data access, however, there are some vital functions that the cloud cannot get rid of. As discussed last time, the first function is user authentication, which can be handled for CDWs in a straightforward way by using a single sign-on (SSO) solution. This step answers a fundamental question — Are you who you say you are? — before allowing a user to access the CDW at all.

What happens once a user is inside the CDW is covered by the more complex functions of authorization and tracking. That’s where DSaaS comes in.

DSaaS operates via a special database driver that enables granular control and transparency for data access without creating any meaningful impact on the performance of the cloud data warehouse. That means you can get the most out of the scalability, speed, and ease of access provided by CDWs such as Snowflake or Amazon Redshift, while also achieving better privacy and compliance.

The key is that DSaaS works all the way down to the level of the individual query. When a user attempts a specific data request, the system is able to see it and place controls on it using a “zero trust” approach. This means that every authorization is treated independently, not only when a user begins a session of using the CDW, but also at each step along the way.

Without slowing down anyone’s work, this allows the system to answer a second fundamental question — Should this user be permitted to execute this query right now? — each time the user attempts a data transaction.

To use an everyday analogy, the process works something like an ATM machine. When you use an ATM, it’s not enough that you’re a bank customer with the correct PIN; that system will enforce very specific limitations on whatever you try to do. Before you can make a withdrawal or transfer, it checks that the money is available. Before you can attempt to clean out your account all at once, it enforces a single-transaction limit or daily limit to prevent you from doing so. And if you finish your transaction, walk away, and then walk back when you remember something else you meant to do, it makes you go through authentication again.

Although the technology operates differently, DSaaS does something very similar for a CDW, this time treating data like money. It enforces rules around questions such as these:

DSaaS makes it easy for administrators, compliance officers, and security personnel to establish rulesets that govern the flow of data, without requiring an organization’s developers to code and test the logic from the ground up.

By enforcing these rulesets in real time, DSaaS enables businesses to put up guardrails that prevent users from accessing specific types or amounts of data that they shouldn’t. The upshot is that your organization is able to enjoy all of the value that CDWs create through efficient data access, while mitigating the attendant security and compliance risks.

Beyond regulating data access in real time, DSaaS also creates an immutable record of transactions at the query level. This provides a level of context that goes beyond visibility (Can we see what is happening?) to true data observability (Are we able to draw conclusions from what is happening?). That level of insight is a boon for compliance and security officers.

Working at the application layer, DSaaS can see both sides of a data transaction, providing a rich history of the queries a user made, which data they touched, and which data they received back. Such detail shines a bright light into previously dark corners of data access to uncover previously hidden patterns.

Because the records of these data transactions, along with administrative actions, are kept in a tamper-resistant archive, any data that is changed will be detected and can be changed back if necessary. And because the archive itself records exactly which users and records were affected, it aids in creating an audit trail for complying with recent tough privacy regulations such as CCPA.

Using a CDW increases the value of your data to your organization; DSaaS reduces the attendant risks. Using both together enables your organization to improve privacy and compliance while taking full advantage of the portability, scalability, and speed of the cloud.

In a recent Database Trends and Applications webinar, “Protecting Sensitive Data in Your Cloud Data Warehouse with Query-Level Governance,” I had a chance too really dig into why you need full transparency and control over data access, and how to optimize privacy and compliance for today's most popular cloud data platforms.

Whether you already run a CDW or are considering it, check out this webinar onDemand and find out how DSaaS can help you make the most of your investment.

Identity and access management (IAM) is the set of technology and processes that grant access to the right company assets, to the right people, at the right time, and for the right reason. In my twenty years of IAM experience, I have seen the full evolution from web single sign on in the early 2000s, to identity provisioning in 2004, identity governance and administration in 2005, and finally identity and access intelligence and automation driven by “identity fabrics” in 2019.

It is time for IAM concepts to be applied to the data cloud. At ALTR, we see a large trend of increased complexity, maintenance, and operating costs for ensuring people have access to the right data, for the right reasons, and at the right time. Applying IAM concepts to data can simplify this process and reduce your administrative burden.

Just as IAM platforms centrally manage identities and their access to applications, so should a central data governance and security system manage access to sensitive data. Sounds neat, right? Well, it's a bit more complicated than that. Just as Identity is moving towards a multi-cloud model, so is data. This means that data is distributed across multiple data clouds like Snowflake, AWS (Amazon Web Services) Redshift, and Google BiqQuery. This shift into a multi-data cloud architecture requires a platform that has the following characteristics:

A cloud native data governance and security system uses a cloud service provider’s (AWS, GCP, Azure) IAM roles to grant privileges on data warehouses, schemas, and table rows and columns via policy tags. These grants based on IAM roles allow for proper user or application operations on sensitive data.

A data security strategy that combines a multi-level (warehouse, schema, table, rows, columns) approach in an easy to implement, scale, and manage strategy is the “north star” of any sensitive data protection program. Answering key questions on establishing this multi-level model and augmenting it with secure views and functions are key to ensuring a solid strategy against massive data exposure and exfiltration.

Having a strategy to map your Identity model to your sensitive data is great, but now you need to think about context. This approach is the “dynamic” nature of responding to potential threats. To gain context, you need a broader view of identity, data sources, security controls, and what governance rules apply.

By connecting identity, governance, and security together, you can gain much more granular views into and control over how data is used.

Let us look at an end-to-end use case. In this sample use case, we set up a data catalog service to discover data in Snowflake, classify sensitive data, and notify ALTR of sensitive data for consumption governance and protection. Here are the five simple steps to take for this use case.

This end-to-end use case can be scaled to multiple data cloud platforms to govern and protect sensitive data distributed across cloud data platforms. ALTR provides the central data governance and security control point to manage policy once and affect data across your organization, significantly reducing complexity and cost for data protection.

To learn more about how ALTR can help your business, check out the latest demo from ALTR CTO, James Beecham, here.