Format-Preserving Encryption: A Deep Dive into FF3-1 Encryption Algorithm

In the ever-evolving landscape of data security, protecting sensitive information while maintaining its usability is crucial. ALTR’s Format Preserving Encryption (FPE) is an industry disrupting solution designed to address this need. FPE ensures that encrypted data retains the same format as the original plaintext, which is vital for maintaining compatibility with existing systems and applications. This post explores ALTR's FPE, the technical details of the FF3-1 encryption algorithm, and the benefits and challenges associated with using padding in FPE.

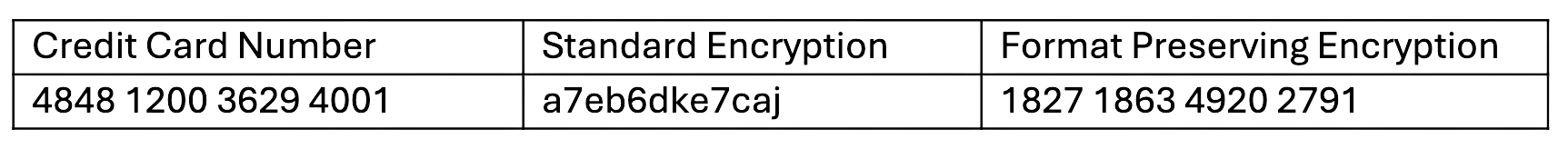

Format Preserving Encryption is a cryptographic technique that encrypts data while preserving its original format. This means that if the plaintext data is a 16-digit credit card number, the ciphertext will also be a 16-digit number. This property is essential for systems where data format consistency is critical, such as databases, legacy applications, and regulatory compliance scenarios.

The FF3-1 encryption algorithm is a format-preserving encryption method that follows the guidelines established by the National Institute of Standards and Technology (NIST). It is part of the NIST Special Publication 800-38G and is a variant of the Feistel network, which is widely used in various cryptographic applications. Here’s a technical breakdown of how FF3-1 works:

1. Feistel Network: FF3-1 is based on a Feistel network, a symmetric structure used in many block cipher designs. A Feistel network divides the plaintext into two halves and processes them through multiple rounds of encryption, using a subkey derived from the main key in each round.

2. Rounds: FF3-1 typically uses 8 rounds of encryption, where each round applies a round function to one half of the data and then combines it with the other half using an XOR operation. This process is repeated, alternating between the halves.

3. Key Scheduling: FF3-1 uses a key scheduling algorithm to generate a series of subkeys from the main encryption key. These subkeys are used in each round of the Feistel network to ensure security.

4. Tweakable Block Cipher: FF3-1 includes a tweakable block cipher mechanism, where a tweak (an additional input parameter) is used along with the key to add an extra layer of security. This makes it resistant to certain types of cryptographic attacks.

5. Format Preservation: The algorithm ensures that the ciphertext retains the same format as the plaintext. For example, if the input is a numeric string like a phone number, the output will also be a numeric string of the same length, also appearing like a phone number.

1. Initialization: The plaintext is divided into two halves, and an initial tweak is applied. The tweak is often derived from additional data, such as the position of the data within a larger dataset, to ensure uniqueness.

2. Round Function: In each round, the round function takes one half of the data and a subkey as inputs. The round function typically includes modular addition, bitwise operations, and table lookups to produce a pseudorandom output.

3. Combining Halves: The output of the round function is XORed with the other half of the data. The halves are then swapped, and the process repeats for the specified number of rounds.

4. Finalization: After the final round, the halves are recombined to form the final ciphertext, which maintains the same format as the original plaintext.

Implementing FPE provides numerous benefits to organizations:

1. Compatibility with Existing Systems: Since FPE maintains the original data format, it can be integrated into existing systems without requiring significant changes. This reduces the risk of errors and system disruptions.

2. Improved Performance: FPE algorithms like FF3-1 are designed to be efficient, ensuring minimal impact on system performance. This is crucial for applications where speed and responsiveness are critical.

3. Simplified Data Migration: FPE allows for the secure migration of data between systems while preserving its format, simplifying the process and ensuring compatibility and functionality.

4. Enhanced Data Security: By encrypting sensitive data, FPE protects it from unauthorized access, reducing the risk of data breaches and ensuring compliance with data protection regulations.

5. Creation of production-like data for lower trust environments: Using a product like ALTR’s FPE, data engineers can use the cipher-text of production data to create useful mock datasets for consumption by developers in lower-trust development and test environments.

Padding is a technique used in encryption to ensure that the plaintext data meets the required minimum length for the encryption algorithm. While padding is beneficial in maintaining data structure, it presents both advantages and challenges in the context of FPE:

1. Consistency in Data Length: Padding ensures that the data conforms to the required minimum length, which is necessary for the encryption algorithm to function correctly.

2. Preservation of Data Format: Padding helps maintain the original data format, which is crucial for systems that rely on specific data structures.

3. Enhanced Security: By adding extra data, padding can make it more difficult for attackers to infer information about the original data from the ciphertext.

1. Increased Complexity: The use of padding adds complexity to the encryption and decryption processes, which can increase the risk of implementation errors.

2. Potential Information Leakage: If not implemented correctly, padding schemes can potentially leak information about the original data, compromising security.

3. Handling of Padding in Decryption: Ensuring that the padding is correctly handled during decryption is crucial to avoid errors and data corruption.

ALTR's Format Preserving Encryption, powered by the technically robust FF3-1 algorithm and married with legendary ALTR policy, offers a comprehensive solution for encrypting sensitive data while maintaining its usability and format. This approach ensures compatibility with existing systems, enhances data security, and supports regulatory compliance. However, the use of padding in FPE, while beneficial in preserving data structure, introduces additional complexity and potential security challenges that must be carefully managed. By leveraging ALTR’s FPE, organizations can effectively protect their sensitive data without sacrificing functionality or performance.

For more information about ALTR’s Format Preserving Encryption and other data security solutions, visit the ALTR documentation

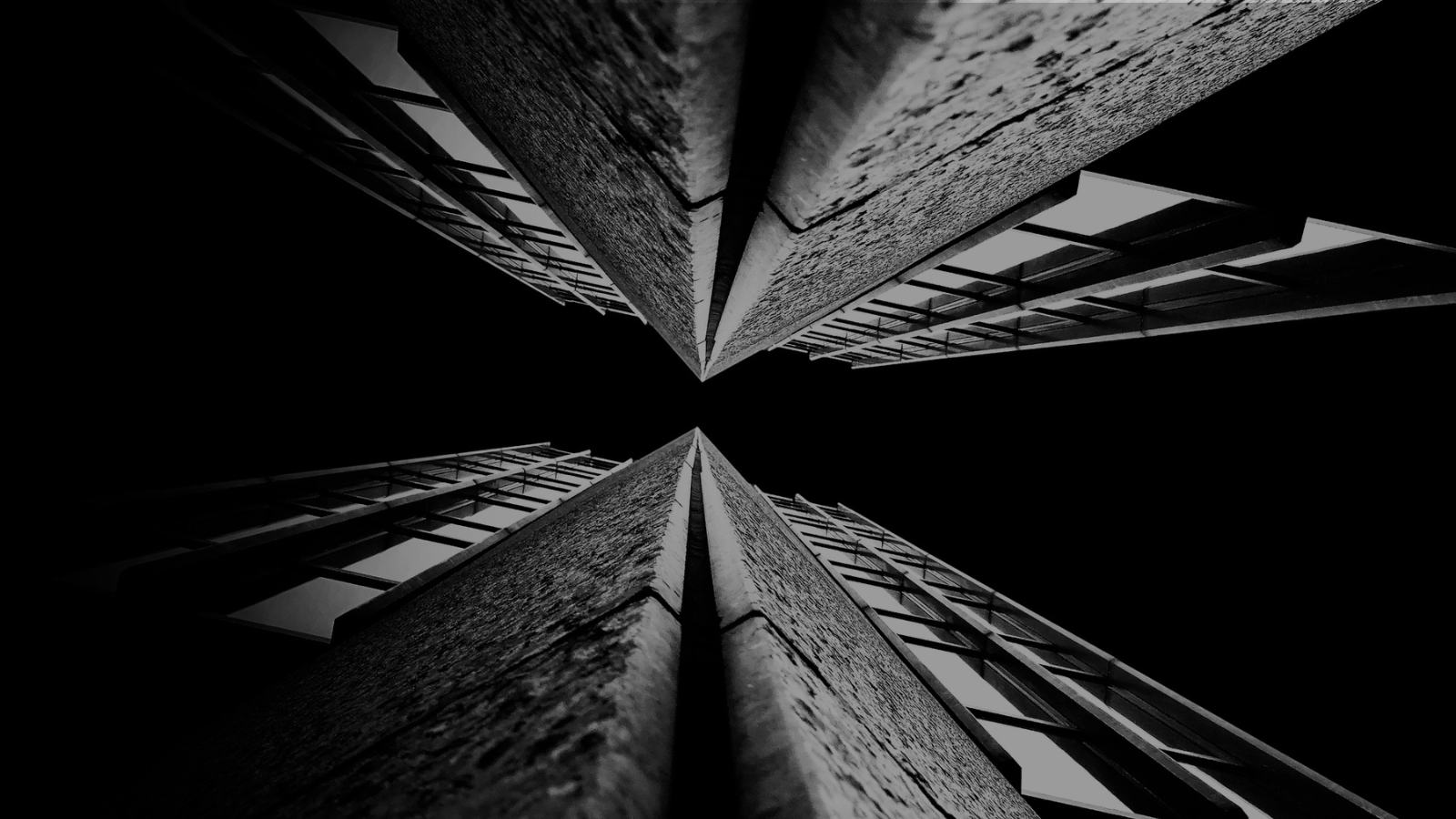

For years (even decades) sensitive information has lived in transactional and analytical databases in the data center. Firewalls, VPNs, Database Activity Monitors, Encryption solutions, Access Control solutions, Privileged Access Management and Data Loss Prevention tools were all purchased and assembled to sit in front of, and around, the databases housing this sensitive information.

Even with all of the above solutions in place, CISO’s and security teams were still a nervous wreck. The goal of delivering data to the business was met, but that does not mean the teams were happy with their solutions. But we got by.

The advent of Big Data and now Generative AI are causing businesses to come to terms with the limitations of these on-prem analytical data stores. It’s hard to scale these systems when the compute and storage are tightly coupled. Sharing data with trusted parties outside the walls of the data center securely is clunky at best, downright dangerous in most cases. And forget running your own GenAI models in your datacenter unless you can outbid Larry, Sam, Satya, and Elon at the Nvidia store. These limits have brought on the era of cloud data platforms. These cloud platforms address the business needs and operational challenges, but they also present whole new security and compliance challenges.

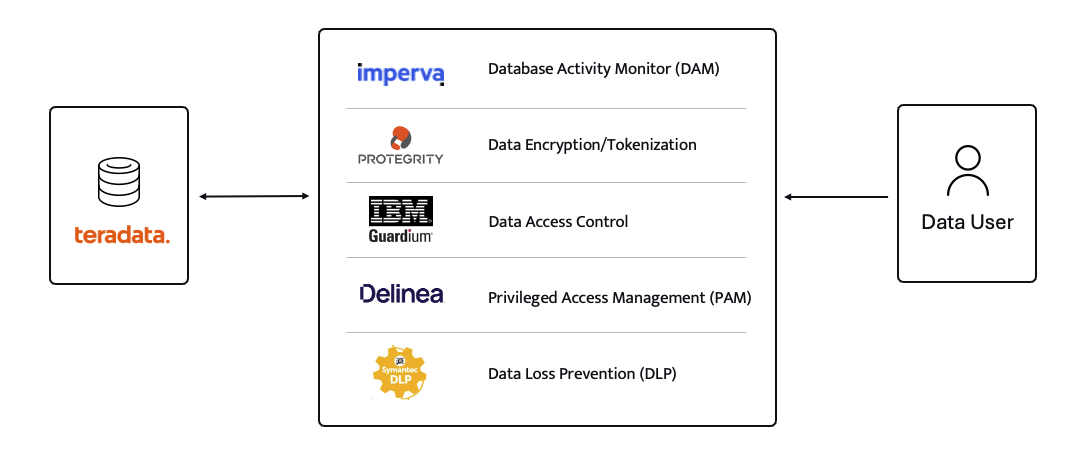

ALTR’s platform has been purpose-built to recreate and enhance these protections required to use Teradata for Snowflake. Our cutting-edge SaaS architecture is revolutionizing data migrations from Teradata to Snowflake, making it seamless for organizations of all sizes, across industries, to unlock the full potential of their data.

What spurred this blog is that a company reached out to ALTR to help them with data security on Snowflake. Cool! A member of the Data & Analytics team who tried our product and found love at first sight. The features were exactly what was needed to control access to sensitive data. Our Format-Preserving Encryption sets the standard for securing data at rest, offering unmatched protection with pricing that's accessible for businesses of any size. Win-win, which is the way it should be.

Our team collaborated closely with this person on use cases, identifying time and cost savings, and mapping out a plan to prove the solution’s value to their organization. Typically, we engage with the CISO at this stage, and those conversations are highly successful. However, this was not the case this time. The CISO did not want to meet with our team and practically stalled our progress.

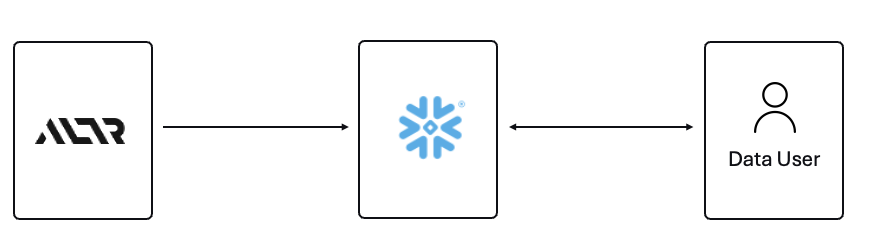

The CISO’s point of view was that ALTR’s security solution could be completely disabled, removed, and would not be helpful in the case of a compromised ACCOUNTADMIN account in Snowflake. I agree with the CISO, all of those things are possible. Here is what I wanted to say to the CISO if they had given me the chance to meet with them!

The ACCOUNTADMIN role has a very simple definition, yet powerful and long-reaching implications of its use:

One of the main points I would have liked to make to the CISO is that as a user of Snowflake, their responsibility to secure that ACCOUNTADMIN role is squarely in their court. By now I’m sure you have all seen the news and responses to the Snowflake compromised accounts that happened earlier this year. It is proven that unsecured accounts by Snowflake customers caused the data theft. There have been dozens of articles and recommendations on how to secure your accounts with Snowflake and even a mandate of minimum authentication standards going forward for Snowflake accounts. You can read more information here, around securing the ACCOUNTADMIN role in Snowflake.

I felt the CISO was missing the point of the ALTR solution, and I wanted the chance to explain my perspective.

ALTR is not meant to secure the ACCOUNTADMIN account in Snowflake. That’s not where the real risk lies when using Snowflake (and yes, I know—“tell that to Ticketmaster.” Well, I did. Check out my write-up on how ALTR could have mitigated or even reduced the data theft, even with compromised accounts). The risk to data in Snowflake comes from all the OTHER accounts that are created and given access to data.

The ACCOUNTADMIN role is limited to one or two people in an organization. These are trusted folks who are smart and don’t want to get in trouble (99% of the time). On the other hand, you will have potentially thousands of non-ACCOUNTADMIN users accessing data, sharing data, screensharing dashboards, re-using passwords, etc. This is the purpose of ALTR’s Data Security Platform, to help you get a handle on part of the problem which is so large it can cause companies to abandon the benefits of Snowflake entirely.

There are three major issues outside of the ACCOUNTADMIN role that companies have to address when using Snowflake:

1. You must understand where your sensitive is inside of Snowflake. Data changes rapidly. You must keep up.

2. You must be able to prove to the business that you have a least privileged access mechanism. Data is accessed only when there is a valid business purpose.

3. You must be able to protect data at rest and in motion within Snowflake. This means cell level encryption using a BYOK approach, near-real-time data activity monitoring, and data theft prevention in the form of DLP.

The three issues mentioned above are incredibly difficult for 95% of businesses to solve, largely due to the sheer scale and complexity of these challenges. Terabytes of data and growing daily, more users with more applications, trusted third parties who want to collaborate with your data. All of this leads to an unmanageable set of internal processes that slow down the business and provide risk.

ALTR’s easy-to-use solution allows Virgin Pulse Data, Reporting, and Analytics teams to automatically apply data masking to thousands of tagged columns across multiple Snowflake databases. We’re able to store PII/PHI data securely and privately with a complete audit trail. Our internal users gain insight from this masked data and change lives for good.

- Andrew Bartley, Director of Data Governance

I believed the CISO at this company was either too focused on the ACCOUNTADMIN problem to understand their other risks, or felt he had control over the other non-admin accounts. In either case I would have liked to learn more!

There was a reason someone from the Data & Analytics team sought out a product like ALTR. Data teams are afraid of screwing up. People are scared to store and use sensitive data in Snowflake. That is what ALTR solves for, not the task of ACCOUNTADMIN security. I wanted to be able to walk the CISO through the risks and how others have solved for them using ALTR.

The tools that Snowflake provides to secure and lock down the ACCOUNTADMIN role are robust and simple to use. Ensure network policies are in place. Ensure MFA is enabled. Ensure you have logging of ACCOUNTADMIN activity to watch all access.

I wish I could have been on the conversation with the CISO to ask a simple question, “If I show you how to control the ACCOUNTADMIN role on your own, would that change your tone on your teams use of ALTR?” I don’t know the answer they would have given, but I know the answer most CISO’s would give.

Nothing will ever be 100% secure and I am by no means saying ALTR can protect your Snowflake data 100% by using our platform. Data security is all about reducing risk. Control the things you can, monitor closely and respond to the things you cannot control. That is what ALTR provides day in and day out to our customers. You can control your ACCOUNTADMIN on your own. Let us control and monitor the things you cannot do on your own.

Since 2015 the migration of corporate data to the cloud has rapidly accelerated. At the time it was estimated that 30% of the corporate data was in the cloud compared to 2022 where it doubled to 60% in a mere seven years. Here we are in 2024, and this trend has not slowed down.

Over time, as more and more data has moved to the cloud, new challenges have presented themselves to organizations. New vendor onboarding, spend analysis, and new units of measure for billing. This brought on different cloud computer-related cost structures and new skillsets with new job titles. Vendor lock-in, skill gaps, performance and latency and data governance all became more intricate paired with the move to the cloud. Both operational and transactional data were in scope to reap the benefits promised by cloud computing, organizational cost savings, data analytics and, of course, AI.

The most critical of these new challenges revolve around a focus on Data Security and Privacy. The migration of on-premises data workloads to the Cloud Data Warehouses included sensitive, confidential, and personal information. Corporations like Microsoft, Google, Meta, Apple, Amazon were capturing every movement, purchase, keystroke, conversation and what feels like thought we ever made. These same cloud service providers made this easier for their enterprise customers to do the same. Along came Big Data and the need for it to be cataloged, analyzed, and used with the promise of making our personal lives better for a cost. The world's population readily sacrificed privacy for convenience.

The moral and ethical conversation would then begin, and world governments responded with regulations such as GDPR, CCPA and now most recently the European Union’s AI Act. The risk and fines have been in the billions. This is a story we already know well. Thus, Data Security and Privacy have become a critical function primarily for the obvious use case, compliance, and regulation. Yet only 11% of organizations have encrypted over 80% of their sensitive data.

With new challenges also came new capabilities and business opportunities. Real time analytics across distributed data sources (IoT, social media, transactional systems) enabling real time supply chain visibility, dynamic changes to pricing strategies, and enabling organizations to launch products to market faster than ever. On premise applications could not handle the volume of data that exists in today’s economy.

Data sharing between partners and customers became a strategic capability. Without having to copy or move data, organizations were enabled to build data monetization strategies leading to new business models. Now building and training Machine Learning models on demand is faster and easier than ever before.

To reap the benefits of the new data world, while remaining compliant, effective organizations have been prioritizing Data Security as a business enabler. Format Preserving Encryption (FPE) has become an accepted encryption option to enforce security and privacy policies. It is increasingly popular as it can address many of the challenges of the cloud while enabling new business capabilities. Let’s look at a few examples now:

Real Time Analytics - Because FPE is an encryption method that returns data in the original format, the data remains useful in the same length, structure, so that more data engineers, scientists and analysts can work with the data without being exposed to sensitive information.

Data Sharing – FPE enables data sharing of sensitive information both personal and confidential, enabling secure information, collaboration, and innovation alike.

Proactive Data Security– FPE allows for the anonymization of sensitive information, proactively protecting against data breaches and bad actors. Good holding to ransom a company that takes a more proactive approach using FPE and other Data Security Platform features in combination.

Empowered Data Engineering – with FPE data engineers can still build, test and deploy data transformations as user defined functions and logic in stored procedures or complied code will run without failure. Data validations and data quality checks for formats, lengths and more can be written and tested without exposing sensitive information. Federated, aggregation and range queries can still run without fail without the need for decryption. Dynamic ABAC and RBAC controls can be combined to decrypt at runtime for users with proper rights to see the original values of data.

Cost Management – While FPE does not come close to solving Cost Management in its entirety, it can definitely contribute. We are seeing a need for FPE as an option instead of replicating data in the cloud to development, test, and production support environments. With data transfer, storage and compute costs, moving data across regions and environments can be really expensive. With FPE, data can be encrypted and decrypted with compute that is a less expensive option than organizations' current antiquated data replication jobs. Thus, making FPE a viable cost savings option for producing production ready data in non-production environments. Look for a future blog on this topic and all the benefits that come along.

FPE is not a silver bullet for protecting sensitive information or enabled these business use cases. There are well documented challenges in the FF1 and FF3-1 algorithms (another blog on that to come). A blend of features including data discovery, dynamic data masking, tokenization, role and attribute-based access controls and data activity monitoring will be needed to have a proactive approach towards security within your modern data stack. This is why Gartner considers a Data Security Platform, like ALTR, to be one of the most advanced and proactive solutions for Data security leaders in your industry.

Securing sensitive information is now more critical than ever for all types of organizations as there have been many high-profile data breaches recently. There are several ways to secure the data including restricting access, masking, encrypting or tokenization. These can pose some challenges when using the data downstream. This is where Format Preserving Encryption (FPE) helps.

This blog will cover what Format Preserving Encryption is, how it works and where it is useful.

Whereas traditional encryption methods generate ciphertext that doesn't look like the original data, Format Preserving Encryption (FPE) encrypts data whilst maintaining the original data format. Changing the format can be an issue for systems or humans that expect data in a specific format. Let's look at an example of encrypting a 16-digit credit card number:

As you can see with a Standard Encryption type the result is a completely different output. This may result in it being incompatible with systems which require or expect a 16-digit numerical format. Using FPE the encrypted data still looks like a valid 16-digit number. This is extremely useful for where data must stay in a specific format for compatibility, compliance, or usability reasons.

Format Preserving Encryption in ALTR works by first analyzing the column to understand the input format and length. Next the NIST algorithm is applied to encrypt the data with the given key and tweak. ALTR applies regular key rotation to maximize security. We also support customers bringing their own keys (BYOK). Data can then selectively be decrypted using ALTR’s access policies.

FPE offers several benefits for organizations that deal with structured data:

1. Adds extra layer of protection: Even if a system or database is breached the encryption makes sensitive data harder to access.

2. Original Data Format Maintained: FPE preserves the original data structure. This is critical when the data format cannot be changed due to system limitations or compliance regulations.

3. Improves Usability: Encrypted data in an expected format is easier to use, display and transform.

4. Simplifies Compliance: Many regulations like PCI-DSS, HIPAA, and GDPR will mandate safeguarding, such as encryption, of sensitive data. FPE allows you to apply encryption without disrupting data flows or reporting, all while still meeting regulatory requirements.

FPE is widely adopted in industries that regularly handle sensitive data. Here are a few common use cases:

ALTR offers various masking, tokenization and encryption options to keep all your Snowflake data secure. Our customers are seeing the benefit of Format Preserving Encryption to enhance their data protection efforts while maintaining operational efficiency and compliance. For more information, schedule a product tour or visit the Snowflake Marketplace.

In my last blog post I discussed the increase in market attention around the category of “Data Governance”. It was fascinating to see the “Forrester Wave™: Data Governance Solutions, Q3 2021” report come out just a few weeks after that. It’s another proof point that the market segment is attracting more attention than ever before, and we’re thrilled to see ALTR partners Collibra and OneTrust get recognized for their outstanding leadership in the space.

But the report is also evidence that we may not all be talking about the same thing when we say, “Data Governance.” Based on the companies included, the report could have easily been called “Data Intelligence Solutions” instead. All of the companies named in the Forrester Wave really focus on knowing about your data: data discovery, data classification and data cataloging, and many actually refer to themselves as “data intelligence” companies. Data intelligence is a critical first step to using data as well as the first step to data governance. It could even be considered the first generation of data governance technology. But think about the word “govern” – it means rule, control, regulate. It’s about taking action. So, just knowing about the data is simply not enough.

The report points this out when it talks about how companies are maturing their privacy, security, and compliance features in response to growing regulations. Companies in the report are taking different approaches to addressing the need for increased security features. One of the ways they’re tackling this is by partnering with companies, like ALTR, who can help them take the next strides into controlling and protecting data.

We could look at this evolution as the next generation of data governance technology or Data Governance Gen 2. This means moving beyond just knowing about the data into controlling and protecting it. It includes functions like data masking, data consumption controls and data tokenization. Data masking blocks out key information in sensitive data to ensure that only the people who need to see data can and only when they should. Data consumption controls limit the amount of data any individual user can access, at a specific time, based on location to only the amount needed to do their role or a specific task. This ensures that a bad actor with seemingly authorized access can’t bleed you dry of data. And tokenization replaces sensitive data completely with non-valuable placeholder tokens.

All of these policy-based data controls are based on the work done in the data intelligence step – data can’t be governed and protected effectively until you know what data you have and where.

There’s actually a big gap between knowing about your data and taking action to ensure it’s secure. In the past, data might have been de facto protected by safeguards placed on the perimeter by security teams. But since data has moved to the cloud, security teams no longer own or manage the infrastructure where the data resides. Cloud data platforms do. Those platforms employ enterprise class security features and firewalls that protect against traditional attacks, but they can’t know who should have access to what data. Companies are still responsible for managing user access and controlling and protecting their data.

With the data governance and security teams potentially coming at the issue with different architectural approaches and different end goals in mind, this can leave a gap that no one is minding. That makes having a combined data control and protection solution essential.

Despite the varying definitions and ideas around what data governance is or should be, let’s not lose sight of the goal: keeping sensitive data safe and usable. That’s why global data privacy regulations have been passed. It’s why data governance teams and functions have been created to comply with those regulations. It’s why we created ALTR to help companies combine their data governance and security into one platform that makes it easy to ensure sensitive data is both controlled and protected. In the end, it’s all about safeguarding the data.

Data is one of your company’s most valuable intellectual property assets. Your most sensitive data drives your ability to innovate and create contextually relevant interactions and engagements with your customers, partners, and suppliers. The more you know about them, the more effectively you can serve them and meet their needs. And doing that leads to unprecedented business success.

But we don't believe utilizing this data should force you to live with increased risk. You should be able to allow the appropriate people to have access to relevant data when they need it, without fear of negative repercussions. With ALTR’s industry leading approach to knowing, controlling and protecting your data you can have both: you can leverage data to its full value while reducing risk to near zero.

Many data governance solutions focus just on creating intelligence around data itself by discovering, classifying, and cataloging sensitive data. This provides a necessary foundation but leaves a significant gap in “knowing your data.” How can you really know your data if you don’t know what your data is doing?

ALTR’s unique data consumption intelligence technology delivers rich reporting on data usage that allows you to observe and understand how data is consumed throughout the normal course of business – who’s accessing data, when and how much. You can see how different roles and different users touch different types of data over weeks and months via ALTR’s exclusive heatmap for data consumption intelligence. You can comprehend which individuals need access daily versus monthly. You can also see how automated services such as marketing programs or analytics platforms need specific data at specific times.

This holistic yet detailed visibility enables a comprehensive understanding of how data flows through the enterprise, so you can map out what represents normal. Without this knowledge, how will you know if a request for a large amount of data at an off hour is a threat or just a standard business process? In the absence of knowing normal, everything looks abnormal. We can help you arrive at that understanding of normal quickly, and from this powerful vantage point, you’ll have the capacity to detect and respond to abnormal requests for data in real-time before they can even execute. Having this baseline understanding positions you to start building refined data access controls.

The goal of data access controls should be to limit access to data to only legitimate uses, to prevent misuse or misappropriation, without adding unnecessary friction to business processes. The body of knowledge you gather from seeing how data is used normally allows you to create policies that are not arbitrary, but instead custom fit to how your business actually works. When you know what valid usage looks like, you can put consumption policies in place to control data efficiently without constricting the business.

With ALTR’s platform, you can easily, with no-code required, create granular policies that automatically block access to sensitive data completely, dynamically mask data, or set consumption thresholds based on risk. Our active controls can not only limit access of data to specific users, but also allow preset actions predicated on those controls such as logging the request, sending an alert, or stopping access entirely.

Then these actions themselves become signals that increase your knowledge of data usage. You can see how often a threshold was exceeded, and you can then adjust and tweak your policies based on what you learn. If, for example, you thought a certain role only needed 10,000 rows once a week, but every 4 weeks, they actually need 50,000 for month-end reporting, you can modify your policy to allow that expected activity. You will no longer have to address an anomaly that really isn’t while also removing an impediment to the user. You can also set rate alerts at significant milestones such as 100, 1000, 10,000 and 100,000 records which then allow you to build a distribution curve of the most common access requests to least so you can easily home in on requests that are out of normal.

Over time, these controls also provide a body of knowledge that continues to grow every time an alert is triggered. The insights you gather put you in a better position to protect your data.

At each stage of this system, your understanding of legitimate data usage gets more precise and your controls around data access become more granular. This helps the abnormal requests stand out, shining a light on activities that might indicate a real threat. With ALTR, you can detect and respond to those anomalies in real time, alert your security team to potential threats through your enterprise security SIEM or SOAR, and completely, immediately stop data from being viewed or accessed. And for the most sensitive data, you can preemptively tokenize it to ensure that it’s secure at rest, in use, and in transit.

And again, the signals and alerts you receive when threats arise in this stage help you better understand where dangers exist and optimize your policies around those. This allows you to enable greater freedom of data use where needed but also tighten your data protections where necessary to reduce risk.

By utilizing ALTR’s unified solution for knowing, controlling, and protecting your data wherever it lives, you can build a self-perpetuating system or flywheel that creates a feedback loop, relaying relevant insights so you can continually refine policies and optimize efficacy at each stage. The more you know about how data is being used, the better you can control it. And the better you control data, the better you can protect it across the enterprise.

With ALTR, you can maximize the full value of your data while continually minimizing the risk.

Since ALTR announced the general availability of our direct cloud integration with Snowflake in February 2021, we’ve seen growing momentum from Snowflake customers who want deep insight into data consumption with automated data access controls and patented security solutions that protect against even the most privileged security threats.

We've worked with companies to understand their challenges and help them build a plan to achieve their goals by utilizing the Snowflake + ALTR native solution. Here are a few examples of the data governance challenges our customers have faced - hopefully you may see a way to overcoming your own similar obstacles.

We are working with a large financial enterprise consolidating data from multiple on-prem and cloud-based software systems (Workday, Salesforce, internal data warehouses) into Snowflake’s Data Cloud. The company felt their data was safer when spread across different systems which required access to multiple accounts to compromise. Now that they were consolidating data in Snowflake, the risk to data was elevated with a single compromised credential having the potential to open the door to all their data.

ALTR Solution: In order to maintain their security in the cloud, the company combined ALTR with Snowflake from day one. ALTR provided discovery and classification of sensitive data during merging, delivered access logs to the company’s SIEM for consumption analysis, and implemented governance policy to limit which roles can see data.

Outcomes: With ALTR governance and security included from the outset, the company can be sure that its consolidated data is as protected in the cloud as it was on-prem and that protection can scale with the company’s use of Snowflake to new users and use cases.

This healthcare data aggregator, analyzer and retailer with sensitive PHI on most Americans wanted to transition data to Snowflake to make sharing and distribution easier. But before doing that it needed to translate its high-end on-prem security posture to the cloud to comply with regulatory requirements. This included finding, classifying and controlling all the company’s sensitive data prior to migration. With a busy DBA team at the company, there was just no bandwidth to take that on.

ALTR Solution: ALTR showed the company how quick and easy it can be to understand where the data is and put policy on it, without code. In fact, it could be done in just a couple of half-hour sessions and the solution could be up and running within a week—providing real-time alerts and consolidated access logs to Splunk for analysis.

Outcomes: Discovery and classification reports will support audit and compliance requirements as data moves into Snowflake. The security team can effectively understand consumption of data and place policy over access including masking. Because of the low effort required by the DBA team and the fast implementation, the company gets a rapid time to value.

A $200M+ logistics company worked with ALTR’s partner Aptitive to move data to and process it in Snowflake. However, their highly sensitive financial data required extra security and attention. It had to be added to Snowflake in order to provide a full picture to the business, but the company’s Snowflake DBA wanted to assure company leadership the data would be safe from credentialed access threats, including himself!

ALTR Solution: ALTR provides a solution that creates visibility around data access via tokenization combined with access reporting, real time messaging alerts, column governance, and thresholds for an air-tight way to store and use sensitive data in Snowflake.

Outcomes: With the ALTR solution, if sensitive data is accessed by someone outside of authorized users, the company leadership is notified and can take actions against any insider or credentialed access threats. This opens the door for more sensitive data into Snowflake, allowing the company to extract insights and value from all its data.

A large data service powered by a Snowflake database required many layers of security including an in-house encryption engine. However, tens of thousands of end users sharing a single Tableau connection prevented any governance or security on individual user access.

ALTR Solution: Integrate ALTR’s data consumption governance with the company’s encryption to control which users can decrypt data. Provide per user visibility and governance through the shared Tableau connection. Integrate access logs into Splunk to provide security teams real-time visibility into access. Implement data consumption thresholds to prevent credential access threats.

Outcomes: The company can safely provide access to data through Tableau without fear of credential access threats. Continue to close security gaps by rolling out at-rest-protection to ensure data does not get exposed.

Do any of these situations or roadblocks sound familiar to you? Is your company running into any governance or security challenges like these? We’d love to discuss how ALTR can help you overcome them and continue your journey to data insight on Snowflake.

One of the greatest things about working in product marketing is the ability to study the market you live in and the industry around it, and identify not just the trends affecting us today, but where things are heading.

In ALTR’s case, we straddle the environment between data governance and data security, an area that until recently has had some pretty distinct lines. Over the past year, we’ve seen a shifting of these lines that is giving us some important data about the way organizations are evolving their data management practices, especially around data governance and data security.

Historically, data governance has been mainly about policy: defining what data an organization needs to protect, how it should do it, who should have access to it, and more. Data security on the other hand has been about enforcement: controlling access to data; detecting, responding to and investigating potential threats; and preventing data breaches. The line here is pretty clear, but it gets a bit less clear when you dive into how you go from creating policy to actually enforcing that policy.

To go from policy creation to implementation (and then enforcement), governance, compliance, and even security teams have needed to pass the baton to other departments, oftentimes to data engineers as they are closest to the data and can implement controls. These teams then had to translate policy, apply it, maintain it, prove their controls were working on a regular basis, and revisit this whole process when new data sources were added into the mix.

When you step back to look at it, this process has a lot of gaps. To start off, no one person or team can do the job. Instead, it requires communication around what needs to be done (a problem that could use its own article), handoffs between departments, follow ups to ensure tasks have been completed, and audits after the fact to address the ever-present risks of human error. In larger organizations, you can imagine the sheer amount of time this absorbs.

To top this all off, there’s still the problem of threat detection and prevention. Organizations are trying to solve a seemingly simple problem today: controlling access to sensitive data at scale. However, the risks of granting unimpeded access to data are larger than ever. With new and changing privacy regulations like CCPA, you can now be fined thousands of dollars per record in the event of a data breach. In an organization with hundreds of millions of records or more, that number gets career-ending pretty quickly. Going forward, you need to control not just who can access what data. You also need to take context into account for each request, asking questions like “Why do you need it?”, “How much do you need?”, and the operational question of “How can I make this easy?”

The rise of governance platforms like OneTrust, Collibra, BigID, and Alation has made it easier to understand data and create governance policy. Unfortunately, a gap still exists in translating that policy into action.

In our conversations, we see forward-thinking organizations walking the logical path toward simplifying their data governance program by automating away the steps between policy creation and implementation. This would not only make managing their governance program easier, it would save time, money, and effort by removing manual steps and the reliance on multiple departments to implement and maintain policy. Bonus points if you can unify governance and security in a single platform by being able to detect and respond to threats as well.

The good news is that ALTR has built that single platform. ALTR’s tool automatically implements and enforces policy to control access to sensitive data while detecting and responding to potential threats. By integrating ALTR with your organization’s existing governance and security tools, you can automate away the creation/implementation gap.

I think you see where this is going, but how does it impact the relationship between data governance and data security? Well, if governance policies can be automatically applied, including who should have access to data, who owns access control?

Data governance and data security are tightly intertwined. One creates policy, the other enforces that policy, and there’s a gray implementation area in the middle where things have traditionally been blurry—where multiple departments had to work together in a painful, manual process. With automation, we see data governance taking ownership of the implementation role, subsequently moving access control into the governance realm, and helping clear up this complicated process. With this change, the focus of data security can move to actively monitoring for and responding to threats.

With this small shift, a huge opportunity opens up. By automating away the implementation process, everyone wins: data engineers save time, data access becomes simplified, multiple tasks prone to human error are eliminated, audits are easier to perform, and you can just plain move faster.

Automated governance policy. Unified governance and security. Open access policies so data consumers have access to the data they need while you stay confident in its privacy, security, and risk. This is exactly what needs to happen for companies to succeed in the years to come. It’s also exactly what we’re here for.

Interested in how ALTR can help simplify your data governance program through automation? Request a demo here.

.png)

Everyone who manages data today is a hero to their organization. They’re on the leading edge of the company: pulling the data streams together, ensuring the quality is high, and enabling the rest of the business to utilize data for insights and value. When we talk about “data-driven” companies, data scientists, database administrators, data analysts, governance, compliance, and security team are the drivers.

Because of this, they’re also on the leading edge of ensuring that sensitive and private data is safe and secure from prying eyes, wherever it is.

So, in honor of Data Privacy Week and international Data Privacy Day on Friday January 28, 2022, we wanted to celebrate what they do. See how they save the day, every day…

Data scientists, data analysts, database administrators – these are your Data Wizards. What they do with data can look like magic to the untrained eye. But even magic isn’t always safe, especially when it comes to moving sensitive data to the cloud. See the trouble they face and how they lift the spell in… “The Data Wizard and the Curse of the Sensitive Data.”

Someone has to make sure the organization is complying with data privacy regulations and only the necessary folks have access to sensitive data. Those doing the work of data governance and compliance are your Data Watchers. As more and more people throughout the company understand the value data can deliver, it gets harder and harder to guard the gate. See how they overcome this challenge in… “The Data Watcher and the Invasion of the Data Snatchers.”

As data makes its way throughout the organization and the IT ecosystem – both in company-owned datacenters and in the public cloud – sensitive data is a rich target for thieves, hackers, and bad actors. Your Data Warriors keep the bandits at bay. And if someone sneaks through, they can rely on ALTR to help. See how they secure sensitive data, no matter where it is, in… “The Data Warrior and the Battle of the Data Road.”

As a society we’ve been barreling down the path to being completely “data-driven” for the last ten to 20 years. We’ve produced and collected massive amounts of data and rapidly built up an ecosystem of technological conveniences on that data foundation—not thinking about the new risks that brings. But looming over the horizon are data privacy and security challenges so large that if we don’t solve them, we could actually regress from a technological perspective. All the conveniences we take for granted require that those hoards of data stay safe and secure. If we don’t halt those hazards in their tracks, they’ll only get worse, until the only choice is to lock down data, putting all the progress we’ve made at risk. In other words, we’re way out over our skis right now.

At ALTR, we’re committed to doing whatever it takes to overcome this challenge. Our mission is to solve for the root problems of data privacy and security and give everyone the tools to crush them.

We don’t believe high costs, long implementations, and big resource requirements should stop companies from controlling and protecting their data. And unlike many other companies in the data control and protection space, we’re not a legacy technology built for the datacenter and awkwardly, bulkily, expensively transitioned to the cloud. From our beginning, we saw the potential and power of building on the cloud, and as a cloud-native SaaS provider, we don’t have the overhead expenses others do. This means we can utilize advanced cloud technologies to solve these difficult problems and provide solutions to customers on cloud data platforms easily, quickly and at a very low cost.

And that’s what we’re doing. Today, we’re announcing three new plans that deliver simple, complete control over data. This includes our Free plan—the first and only in the market—which gives companies powerful data control and protection for free, for life, starting on Snowflake. Just like Snowflake is democratizing cloud data access, ALTR is democratizing cloud data governance. We’re freeing data governance so that everyone can control and protect their data.

One of the reasons companies struggle to tackle the data privacy and security challenge is that they leave understanding who is consuming what data and why until later. With other solutions, it’s too expensive or too resource-intensive, so instead of adopting data governance early, as a preventative action, companies wait to understand and manage data usage until it becomes required to comply with regulations, or worse, they have a privacy or security incident.

ALTR’s Free plan clears away those objections, supporting companies’ initial forays into Snowflake by enabling easy yet powerful data control and protection from the start, with no cost and no commitment. Our new data usage analytics capability shows who your top Snowflake users are and gives clear visibility into what and how much data they’re consuming. This allows you to better understand what normal is, create controls based on necessary usage, and quickly identify and investigate anomalies. At a high level, this intelligence will help you assess the value of your Snowflake project, plan your future roadmap, and put you in a better position to solve problems that might arise later.

The ALTR Free plan is available here on our website or now as the first complete data control and protection solution via Snowflake Partner Connect.

As you mature in your cloud data platform journey, better understand your use cases and start thinking about adding sensitive data, you may want to upgrade to ALTR Enterprise for expanded access controls, compliance support and enterprise integrations. Once you’re more advanced and have migrated all your sensitive data, you may be stuck manually implementing governance features which can make the cost of ownership and maintenance very high. ALTR Enterprise Plus can help you can automate to save time and scale more easily, utilize powerful data security features like tokenization, and get the support of our experienced customer success team.

Data governance tools that are too costly, require too much time and too many resources to implement and maintain are crippling our ability to take on the big data privacy and security problems we know we must in order to continue our pace of technological advancement. Leaving data at risk is just not sustainable for anyone. ALTR's release today is the next step in our journey to solve these problems and deliver formidable solutions that are easy to use and easy to buy – so everyone can control and protect their data, wherever they are on their data journey.

I think it’s safe to say that everyone in the technology industry was shocked by last week’s Amazon Web Services outage. As one of the major backbones of cloud-based Internet services, Amazon’s issues affected everything from Disney+, Netflix and Roku streaming to services such as Venmo and CashApp to the company’s own delivery drivers. Although this was unprecedented for Amazon, it was definitely a wakeup call to anyone who relies on cloud hosting services. And it underscored the need for true SaaS-based, multi-region “high availability” solutions to support resiliency in modern data architectures.

According to Amazon, the issue originated in its US-East-1 region in Virginia. Of course, Amazon’s services include high availability within its East, West and other global regions, so if there’s an issue in one datacenter or zone within a single region, workloads can be routed to different locations within the same region to ensure uptime. This incident was so shocking because the number of core services affected in a single region increased the blast radius so significantly.

Yet, it might not qualify as a true “disaster” as defined in most disaster recovery planning. Those are natural events (hurricanes, tornadoes, tsunamis) or man-made (accidental/intentional, terrorism, hacking) that cause a long-term disruption to the business. In this case, there was no natural event or obvious intentional sabotage that would clearly impact availability longer term. While companies could have implemented their disaster recovery plans, doing so would have come with costs: potential loss of a limited amount of data, absorption of employee resources to implement the plan, the time necessary to reverse the changes once the incident had passed, if it wasn’t permanent. There was no way to know how temporary or long lasting this incident might be so executing a disaster recovery plan could have placed an additional and unnecessary burden on the business.

That still leaves a gap between normal operations and full-on disaster recovery – a gap that can cause major issues. Many software companies today pride themselves on the “five nines” – 99.999% uptime in a given year. Some even include that SLA in their contracts. Even though this incident lasted less than a day, it was potentially enough to drop affected companies’ uptime for 2021 from five nines to three: 99.9%. They lost two orders of magnitude in one incident.

For modern enterprises who rely on data to run their internal operations, uptime and availability are just as important, and in the modern data architecture, the entire system is only as reliable as its weakest link. This is especially critical right now for industries like banking where the movement of money, and in fact the entire system, relies on the flow of data. But this will become increasingly important across all industries as data becomes more and more essential to core business functions.

That means when you’re picking your database, your ETL provider, and your BI tools, availability needs to be a key “non-functional” factor you evaluate as part of your buying process along with any features you need. When it comes to data control and security solutions, ALTR’s availability is unmatched. Because we interact with and follow data from on-prem through the ETL process to the cloud, we’re in the critical path of data, making it crucial that our service keep up with the rest of the data ecosystem. Our answer is multi-region, high availability built into normal operations via a true multi-tenant SaaS solution. Because SaaS allows economies of scale, we don’t have to build and maintain dedicated single-customer infrastructure in multiple geographic regions. We just have to construct ALTR infrastructure in multiple geographies for all our customers to leverage, significantly reducing cost and complexity.

This incident highlighted a weakness in resiliency planning: what happens when something less than a disaster causes a significant disruption to the business? We don’t think the uptime guarantees many have trusted to date are strong enough for today’s business environment, especially for business-critical data infrastructure. The future is high availability based on multi-region redundancy – we expect this to be a broad and consistent theme across industries. And for essential data control and security, that means ALTR.

Cloud computing has disrupted just about every single industry since its inception in the mid-2000s, and financial services are no exception. According to a 2020 Accenture report, banking is just behind industries like ecommerce/retail, high tech electronics, and pharma/life sciences on the cloud adoption maturity curve.

In fact, the average bank has 58 percent of its workloads in the cloud, but the majority run on private rather than public cloud which limits the cost-savings and benefits. The emphasis on private cloud may be due to the unique challenges the banking industry faces, including meeting stringent regulatory requirements on infrastructure they don’t own. And for banks that have made no move to the cloud, investments in legacy systems and the difficulty transitioning to the cloud may be an additional roadblock.

For smaller and midsize banks in the sub $10B asset class, the decision to move the cloud can be even trickier. They may feel pressure from their boards to adopt this powerful new technology while also being strongly reminded to do so safely! Regional banks often build their reputations on the trust of local communities which makes any change that could damage that an enormous risk. At the same time, they may be competing with larger players that have adopted the cloud at a rapid pace to provide cutting-edge services to consumers. Capital One became the first major bank to go cloud-only when it closed its own datacenters entirely and moved all operations to AWS public cloud. Accenture found that moving swiftly to the cloud is paying off for banking “cloud leaders”—they’re growing revenue twice as fast as the “laggards”.

So, it’s clear that the move to the cloud is coming for even smaller banks, but where to start? We suggest taking a look at your enterprise data warehouse.

For banks that need to minimize risk, are unsure how to make the move safely and properly, may not have a CISO or even dedicated security team, and whose IT teams that are focused on managing their own iron in their own datacenters, taking the first step to the cloud can seem like a heavy lift. It doesn’t have to be.

You can start small with your enterprise data warehouse. This is often a SQL server in your own datacenter where you collect a daily or weekly data dump from your core systems. It may contain some sensitive data that’s accessed by various groups around the company via business intelligence tools like PowerBI, Qlik or Tableau installed on user desktops. Marketing for example, might run zip code reports on deposits in order to make targeted offers on mortgages or car loans.

The key is that the data is already consolidated and it’s not core software – that reduces both the complexity and the risk. Moving this workload to Snowflake with ALTR data governance and security is fairly straightforward and provides several interesting advantages for small and mid-size financial institutions:

Migrating your enterprise data warehouse workload to Snowflake with ALTR essentially gives you a “secure cloud data warehouse-in-a-box”. You can take on this discreet pilot project, with minimal investment of time, resources, cost and risk, and learn how the cloud works best for your financial institution. This allows you to start operationalizing your process for moving additional workloads to the cloud safely.

In addition to the experience gained, there can be measurable results. Accenture estimates that when financial institutions move data warehousing and reporting workloads to the cloud they could see a 20-60% reduction in costs, increased operational efficiencies, improved real-time data availability, and better data governance and lineage.

Not bad for your first adventure into the cloud.

Everybody is doing it: the cloud data migration. Whether you call your project “data re-platform”, “data modernization”, “cloud data warehouse adoption,” "moving data to the cloud” or any of the other hot buzz phrases, the idea is the same: move data from multiple on-prem and SaaS-based systems and data storage into a centralized cloud data warehouse where you can use that data to spend less money or make more money. In other words, the goal of consolidating into a cloud data warehouse is, at its core, to save company money on cost of goods sold or grow company revenue streams.

What’s not to love? But one of the tradeoffs is that, as you go through the process, you end up losing the full visibility and control you had over data in your on-prem systems leading to cloud migration risks and cloud data migration challenges you might not expect.

We see this concern about visibility and control come up over and over, at every stage of the cloud data migration journey – from CIOS, CISOs and CDOs who are accountable for making sure data stays secure, to the leaders who must address this in the overall project within the given budget, until it finally lands on the Data Engineers and DBAs who must decide how they’ll fix this and pick the actual solution. Here's how to mitigate cloud migration risks...

Putting your cloud data migration project on rails out of the gate means you can move more quickly, more securely. Even if you’re going 100 MPH with data, you can be sure you’re not going to:

How do you do that? No matter if you’re using an ETL or Snowpipe to transfer data, choosing a cloud-hosted BI (Business Intelligence) tool or an on-prem solution to run analytics, doing it all with visibility, control, and protection in place from the very moment you begin will mean that your continuous data path from on-prem to the cloud will be safe, speedy and secure.

And those pesky cloud data migration challenges that could pop up at each stage won’t slow you down or derail the project:

Whether it’s Snowflake, Amazon Redshift, Google, you’ll need to answer these to get your cloud data migration project off the ground…

Again, whether you’re looking at Tableau, Qlik, PowerBI or other, similar operational questions will come up…

When moving the actual data from data sources to the new cloud data warehouse, if these questions don’t come up, they should…

Data “brains”, like Collibra, OneTrust, and Alation, have all the information about users, data itself, data classifications and the policies that should be placed on user data access. What they don’t have is a way to operationalize that policy.

If you can be prepared to answer these questions from the start, you can avoid cloud migration risks, overcome cloud data migration challenges, and the good news will travel back up the line very, very quickly to the executives who need to ensure that data visibility, control and protection are covered.

ALTR is here to help you solve the problem at a very low cost, with a low-friction implementation, and a short time to value. You can start with ALTR’s free plan today and upgrade when you have too many users and too much data to govern at scale without an automated data control and policy enforcement solution like ALTR.

Since Salesforce launched at the end of last century, the cloud application boom has been unstoppable. Along with that has come another boom: cloud-hosted data. The rise of digital transformation, as well as other trends like mobile and IoT, has led to a massive increase in the amount of data created. In fact, 64.2 Zettabytes of data was created or replicated in 2020 according to IDC. That’s 10^21 bytes of data!

Now, all that data represents a rich resource of knowledge to business – from where consumers visit online to how companies make purchases. And the best way to get value from it is to consolidate the multitude of data points and put machine learning, AI or Big Data tools on top of it to connect the dots. This data analysis can either be done in an on-premises data warehouse or in the cloud. Doing it in the cloud delivers some compelling benefits including virtually unlimited scalability with no costs for infrastructure investment and lower ongoing maintenance. The attractiveness of the cloud data warehouse model is one of the reasons Snowflake debuted with the biggest software IPO ever in 2020.

But consolidating all this data, especially sensitive data, into the cloud creates a serious challenge for Chief Information Security Officers (CISOs): how can they be 100% responsible for data security when they have 0% control over the infrastructure where it’s stored?

CISOs and their security teams had their roles nailed down: secure the datacenters with firewalls, stop employees from clicking on phishing emails or accessing malware infected websites, and protect the company perimeter from hackers and outside threats. These were tactics meant to deliver specific and important end results: keep the network safe and protect company data. Forrester Research calls this “Zero-Trust”, but it’s a perimeter defense mechanism that does not apply to the “perimeter-less” cloud.

But today, a Chief Marketing Officer (CMO) may look at the rich data streams moving throughout the company, generated by 15 or 20 different applications, with hundreds of data points about customers and prospects, and make the argument that if only that data were combined, it could deliver a minutely-detailed composite of individual users and buyers – and marketing could raise revenue by 8%.

The CMO gets the go ahead to move that data to Snowflake, but where does that leave the CISO? Suddenly, the data is in an environment he or she doesn’t control. Increasingly the business project is taking a much higher priority and security is trying to catch up. The CISO is still responsible for securing data that’s been moved outside the nice, cozy, protected perimeter the security team has spent years perfecting. If there’s a data breach, they’re still on the hook, they could still get fired, but how can they stop that if they don’t control the space?

Think of it like a parent who lets their children stay overnight at a friend’s house. The parent is still responsible for the child’s safety, so shouldn’t they ask the friend’s parents some questions? Find out about the culture of the home? Who the parents’ friends are? What kind of rules they impose? The parent doesn’t stop being responsible or stop worrying once their child leaves the home. And they certainly don’t lock their children up at home in order to “keep them safe” – that’s not reasonable.

Some CISOs and Chief Risk Officers try to maintain control by placing stringent rules around how the data can be stored and used in cloud data warehouses. I’m aware of one that requires sensitive data to be stored on Snowflake only when encrypted or tokenized. In order to be used or operated on, it has to be moved into a secure on-prem environment the CISO controls, de-crypted/de-tokenized, utilized, then encrypted or tokenized before being transferred back to Snowflake.

It may be secure, but it’s like making your child come home to ask permission before playing a game or having a snack at the sleepover. It’s really clunky and slows things down. Some security execs are jumping through a lot of hoops to overcome this accountability and control mismatch.

Others are just abdicating control and trusting cloud data warehouse providers. This leaves a hole in security: these providers have taken over responsibility for maintaining the infrastructure, the perimeter, the physical space, but they’re not taking on the responsibility of user identity and access or the data itself – that still resides with the company, especially the CISO. To be clear, Snowflake is very secure, but the more successful they become the more a target they are for bad actors and especially nations-states.

This shift to the cloud really requires a shift in the security mindset: from perimeter-centric to data-centric security. It means CISOs and security teams need to stop thinking about hardware, datacenters, perimeters and start focusing on the end goal: protecting the data itself. They need to embrace data governance and security policies around data. They need to understand who should have access to the data, understand how data is used, and place controls and protections around data access. They should look for a combined data governance and security solution that delivers complete data control and protection.

Because bad actors don’t care who’s responsible—they’re going where the data is and taking advantage of any holes they find. The 2021 Verizon Data Breach Investigations Report (DBIR) showed this clearly: this year 73 percent of the cybersecurity incidents involved external cloud assets. This is a complete flip-flop from 2019, when cloud assets were only involved in 27 percent of breaches.

Regulators also don’t care where data is when it comes to responsibility for keeping it safe: it’s on the company who collects it. Larger companies in more regulated industries face very large, really punitive fines if there’s a data leak—which can lead to severe consequences for the business…and the CISOs responsible.

If CISOs want to not only catch up to but get ahead of business priorities, bad actors, and regulatory requirements, they need to focus on controlling, protecting and minimizing risk to data—wherever it is.

.png)

ALTR’s origin story, like that of a lot of companies, starts with pain. In the early 2010s a group of engineers working at a technology company in the options trading space found themselves contending with problems that had no solutions.

They held data that could very easily and quickly be used for personal financial gain by a thief, and they had no tamperproof records of who was accessing it. They had no way to control that access in real time. And worst of all, they had no feasible way to protect data from those who would log straight into their required co-located servers to steal it – while still keeping the data functional for the business.

The reason why these solutions didn’t exist was not because no one had thought of them. At the time, database access monitoring appliances and encryption devices with sophisticated key management were available.

The problem was that the world had changed.

Like Jeff Bridges’ character Flynn in the classic movie TRON, who has his body digitized and finds himself inside of a computer – the world of connected computing had started to detach from its physical roots. Virtualization meant that a computing workload might exist on any device, and the onset of cloud computing and massive proliferation of mobile devices meant that it might not be on a device that was identifiable.

A security model built on a solid physical network topology with endpoints, routers, switches, and servers, was melting away – and with it the first generation of security solutions that were built for that universe.

Taking its place, a logical model. Identities that could be in a café in Turkey or at home in Austin, or hurtling across the sky at hundreds of miles per hour (as the humble author of this post is, right now), accessing workloads and data that also might be anywhere – inside of an “availability zone” instead of on a known server.

This new universe has come very quickly and brought new threats with it, and created a crack between itself and the old one that is literally leaking data. This is because many are trying to fight the new threats with the old tools, looking for the power plug to pull from the wall or the hard drive to wipe when there just isn’t one.

Jeff Bridges didn’t beat the evil Master Control Program by rejecting how his world had suddenly and completely changed. He went with the flow, man, and beat it at its own game.

ALTR’s products are designed for this new world, but this blog isn’t about them. We have a whole website dedicated to that. It’s about exploring the corners of this new universe, highlighting the best ideas from those around the computing and security worlds, and adding our own voice to the conversation. We hope you’ll follow along.

Here in Colorado, it’s just about winter sports season. And that means I’m thinking about making the drive up to Summit County to take advantage of some of best skiing anywhere. The destination is completely worth it, but the road is not without its potential risks: slippery inclines, dramatic switchbacks, snow drifts and 18-wheelers barreling through the weather toward the West Coast.

Businesses today face a similar situation: the ability to use data to gather insights across the enterprise is an exhilarating goal, even though getting there can require overcoming some hazards. Many enterprises are moving company information into cloud data platforms like Snowflake in big data analytics projects that take advantage of scalable storage, accessible compute power, and integrations with cloud-based BI tools for a sophisticated view of every part of the business. To get the full picture though, sensitive data must often be included. Whether that’s personal customer data or highly restricted business information, uploading and utilizing that sensitive data creates a risk due to privacy regulations and confidentiality concerns.

But just like the drive up the mountain, there are technologies that can make it easier and safer for companies to make the journey. Here are three examples where ALTR’s technology can help companies reach their big data analytics goals:

The CFO of a logistics company wants to determine the actual costs to serve their customers in order to better align pricing and improve margins. They pull operational data, inventory management, warehousing, and fuel and vehicle maintenance costs into Snowflake. But this doesn't provide a full picture without including the costs of the people doing the work. The last key piece of data is compensation information for each employee involved in delivering the products. However, unlike the other information, this is highly sensitive information about what each individual employee gets paid along with their banking info. Putting it into Snowflake means that it could be accessible to some employees outside the finance and HR teams, like the Snowflake admin, for example.

ALTR automates and makes the handling of this data easy, and because ALTR sits outside Snowflake, we’re able to create a secure mechanism that delivers an alert every time that sensitive data is accessed – by anyone. The logistics company is able to utilize all the required information – even private payroll data – to accomplish their big data analytics goal.

The CMO of a consumer goods company wants to get a holistic view of its customers and their buying behavior, but data is spread across multiple on-premises and cloud-based systems: Salesforce, Marketo, eCommerce sites, backend ERP systems, and customer behavior analytics tools. In order to tie demographic information about specific buyers to their online activity and buying activity, the data all needs to be in one place with at least one common value, usually a piece of PII (name, email, SS#). With this, marketing teams can look for buying indicators in localized regions: perhaps a mom looked at a specific blog post before purchasing diapers in Austin, TX. Maybe that’s a pattern: several moms looked at that post before buying diapers in Austin. Then marketing can use that insight to promote that blog to other moms in Austin, to drive similar purchases.

ALTR allows companies to protect sensitive PII data easily in Snowflake. You can find and classify personal information, see how it’s being used, then set policies to control access and limit consumption in the event of a policy infringement. Marketing teams can safely (and in compliance with privacy regulations) use sensitive data to do multivariate analysis, create an accurate model of customer behavior, and uncover opportunities to grow sales.

As the value of data analytics has grown, the number of people across the business who have or want access has grown in tandem. It’s no longer a handful of data engineers or analysts who can peer deep into every corner of the business but everyone from marketing to finance to HR to engineering to sales who wants to access operational, sales, marketing or finance data to make better decisions. A sales manager may want to get a better view into her territory – looking at past sales and annual trends or hot industries to find opportunities for the next quarter. All of the data to drive these insights will have to be consolidated into a single repository like Snowflake for cross reference, along with the same data for every other sales territory. In order to make her territory data available to that sales manager, the other territory data must be made unavailable so that it stays confidential.

ALTR enables companies to easily set access policies based on role for any data deemed sensitive or confidential. That means your database admin can ensure each sales manager – or any other role in the company – only has visibility into the information they need to do their jobs better. The ability to easily control access means data can be made more freely available.

Think of ALTR as a set of snow tires you put on at the beginning of the season, as you head into sensitive data territory. They’re easy to install and equipped to help you make the journey up the mountain whenever you’re ready to go. And the best part is that once you have them, you can stop thinking about the risks and concentrate on the amazing view.