Format-Preserving Encryption: A Deep Dive into FF3-1 Encryption Algorithm

In the ever-evolving landscape of data security, protecting sensitive information while maintaining its usability is crucial. ALTR’s Format Preserving Encryption (FPE) is an industry disrupting solution designed to address this need. FPE ensures that encrypted data retains the same format as the original plaintext, which is vital for maintaining compatibility with existing systems and applications. This post explores ALTR's FPE, the technical details of the FF3-1 encryption algorithm, and the benefits and challenges associated with using padding in FPE.

Format Preserving Encryption is a cryptographic technique that encrypts data while preserving its original format. This means that if the plaintext data is a 16-digit credit card number, the ciphertext will also be a 16-digit number. This property is essential for systems where data format consistency is critical, such as databases, legacy applications, and regulatory compliance scenarios.

The FF3-1 encryption algorithm is a format-preserving encryption method that follows the guidelines established by the National Institute of Standards and Technology (NIST). It is part of the NIST Special Publication 800-38G and is a variant of the Feistel network, which is widely used in various cryptographic applications. Here’s a technical breakdown of how FF3-1 works:

1. Feistel Network: FF3-1 is based on a Feistel network, a symmetric structure used in many block cipher designs. A Feistel network divides the plaintext into two halves and processes them through multiple rounds of encryption, using a subkey derived from the main key in each round.

2. Rounds: FF3-1 typically uses 8 rounds of encryption, where each round applies a round function to one half of the data and then combines it with the other half using an XOR operation. This process is repeated, alternating between the halves.

3. Key Scheduling: FF3-1 uses a key scheduling algorithm to generate a series of subkeys from the main encryption key. These subkeys are used in each round of the Feistel network to ensure security.

4. Tweakable Block Cipher: FF3-1 includes a tweakable block cipher mechanism, where a tweak (an additional input parameter) is used along with the key to add an extra layer of security. This makes it resistant to certain types of cryptographic attacks.

5. Format Preservation: The algorithm ensures that the ciphertext retains the same format as the plaintext. For example, if the input is a numeric string like a phone number, the output will also be a numeric string of the same length, also appearing like a phone number.

1. Initialization: The plaintext is divided into two halves, and an initial tweak is applied. The tweak is often derived from additional data, such as the position of the data within a larger dataset, to ensure uniqueness.

2. Round Function: In each round, the round function takes one half of the data and a subkey as inputs. The round function typically includes modular addition, bitwise operations, and table lookups to produce a pseudorandom output.

3. Combining Halves: The output of the round function is XORed with the other half of the data. The halves are then swapped, and the process repeats for the specified number of rounds.

4. Finalization: After the final round, the halves are recombined to form the final ciphertext, which maintains the same format as the original plaintext.

Implementing FPE provides numerous benefits to organizations:

1. Compatibility with Existing Systems: Since FPE maintains the original data format, it can be integrated into existing systems without requiring significant changes. This reduces the risk of errors and system disruptions.

2. Improved Performance: FPE algorithms like FF3-1 are designed to be efficient, ensuring minimal impact on system performance. This is crucial for applications where speed and responsiveness are critical.

3. Simplified Data Migration: FPE allows for the secure migration of data between systems while preserving its format, simplifying the process and ensuring compatibility and functionality.

4. Enhanced Data Security: By encrypting sensitive data, FPE protects it from unauthorized access, reducing the risk of data breaches and ensuring compliance with data protection regulations.

5. Creation of production-like data for lower trust environments: Using a product like ALTR’s FPE, data engineers can use the cipher-text of production data to create useful mock datasets for consumption by developers in lower-trust development and test environments.

Padding is a technique used in encryption to ensure that the plaintext data meets the required minimum length for the encryption algorithm. While padding is beneficial in maintaining data structure, it presents both advantages and challenges in the context of FPE:

1. Consistency in Data Length: Padding ensures that the data conforms to the required minimum length, which is necessary for the encryption algorithm to function correctly.

2. Preservation of Data Format: Padding helps maintain the original data format, which is crucial for systems that rely on specific data structures.

3. Enhanced Security: By adding extra data, padding can make it more difficult for attackers to infer information about the original data from the ciphertext.

1. Increased Complexity: The use of padding adds complexity to the encryption and decryption processes, which can increase the risk of implementation errors.

2. Potential Information Leakage: If not implemented correctly, padding schemes can potentially leak information about the original data, compromising security.

3. Handling of Padding in Decryption: Ensuring that the padding is correctly handled during decryption is crucial to avoid errors and data corruption.

ALTR's Format Preserving Encryption, powered by the technically robust FF3-1 algorithm and married with legendary ALTR policy, offers a comprehensive solution for encrypting sensitive data while maintaining its usability and format. This approach ensures compatibility with existing systems, enhances data security, and supports regulatory compliance. However, the use of padding in FPE, while beneficial in preserving data structure, introduces additional complexity and potential security challenges that must be carefully managed. By leveraging ALTR’s FPE, organizations can effectively protect their sensitive data without sacrificing functionality or performance.

For more information about ALTR’s Format Preserving Encryption and other data security solutions, visit the ALTR documentation

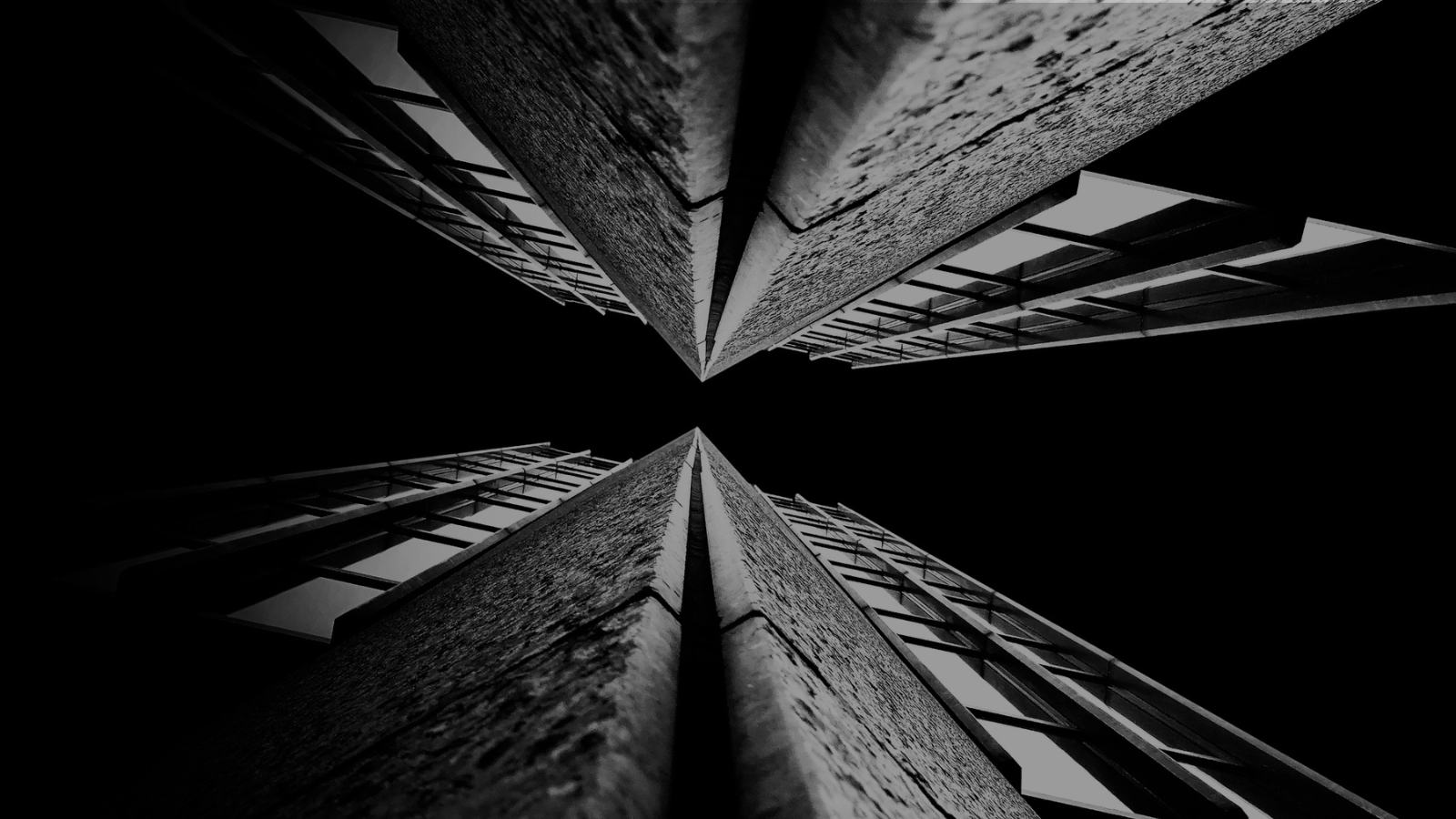

For years (even decades) sensitive information has lived in transactional and analytical databases in the data center. Firewalls, VPNs, Database Activity Monitors, Encryption solutions, Access Control solutions, Privileged Access Management and Data Loss Prevention tools were all purchased and assembled to sit in front of, and around, the databases housing this sensitive information.

Even with all of the above solutions in place, CISO’s and security teams were still a nervous wreck. The goal of delivering data to the business was met, but that does not mean the teams were happy with their solutions. But we got by.

The advent of Big Data and now Generative AI are causing businesses to come to terms with the limitations of these on-prem analytical data stores. It’s hard to scale these systems when the compute and storage are tightly coupled. Sharing data with trusted parties outside the walls of the data center securely is clunky at best, downright dangerous in most cases. And forget running your own GenAI models in your datacenter unless you can outbid Larry, Sam, Satya, and Elon at the Nvidia store. These limits have brought on the era of cloud data platforms. These cloud platforms address the business needs and operational challenges, but they also present whole new security and compliance challenges.

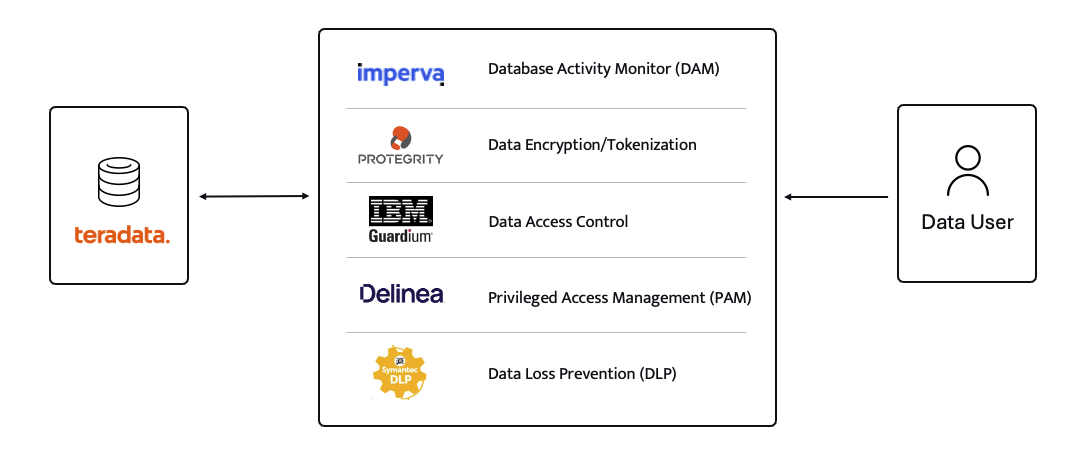

ALTR’s platform has been purpose-built to recreate and enhance these protections required to use Teradata for Snowflake. Our cutting-edge SaaS architecture is revolutionizing data migrations from Teradata to Snowflake, making it seamless for organizations of all sizes, across industries, to unlock the full potential of their data.

What spurred this blog is that a company reached out to ALTR to help them with data security on Snowflake. Cool! A member of the Data & Analytics team who tried our product and found love at first sight. The features were exactly what was needed to control access to sensitive data. Our Format-Preserving Encryption sets the standard for securing data at rest, offering unmatched protection with pricing that's accessible for businesses of any size. Win-win, which is the way it should be.

Our team collaborated closely with this person on use cases, identifying time and cost savings, and mapping out a plan to prove the solution’s value to their organization. Typically, we engage with the CISO at this stage, and those conversations are highly successful. However, this was not the case this time. The CISO did not want to meet with our team and practically stalled our progress.

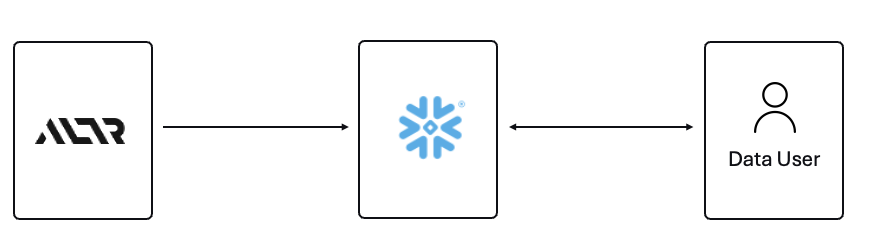

The CISO’s point of view was that ALTR’s security solution could be completely disabled, removed, and would not be helpful in the case of a compromised ACCOUNTADMIN account in Snowflake. I agree with the CISO, all of those things are possible. Here is what I wanted to say to the CISO if they had given me the chance to meet with them!

The ACCOUNTADMIN role has a very simple definition, yet powerful and long-reaching implications of its use:

One of the main points I would have liked to make to the CISO is that as a user of Snowflake, their responsibility to secure that ACCOUNTADMIN role is squarely in their court. By now I’m sure you have all seen the news and responses to the Snowflake compromised accounts that happened earlier this year. It is proven that unsecured accounts by Snowflake customers caused the data theft. There have been dozens of articles and recommendations on how to secure your accounts with Snowflake and even a mandate of minimum authentication standards going forward for Snowflake accounts. You can read more information here, around securing the ACCOUNTADMIN role in Snowflake.

I felt the CISO was missing the point of the ALTR solution, and I wanted the chance to explain my perspective.

ALTR is not meant to secure the ACCOUNTADMIN account in Snowflake. That’s not where the real risk lies when using Snowflake (and yes, I know—“tell that to Ticketmaster.” Well, I did. Check out my write-up on how ALTR could have mitigated or even reduced the data theft, even with compromised accounts). The risk to data in Snowflake comes from all the OTHER accounts that are created and given access to data.

The ACCOUNTADMIN role is limited to one or two people in an organization. These are trusted folks who are smart and don’t want to get in trouble (99% of the time). On the other hand, you will have potentially thousands of non-ACCOUNTADMIN users accessing data, sharing data, screensharing dashboards, re-using passwords, etc. This is the purpose of ALTR’s Data Security Platform, to help you get a handle on part of the problem which is so large it can cause companies to abandon the benefits of Snowflake entirely.

There are three major issues outside of the ACCOUNTADMIN role that companies have to address when using Snowflake:

1. You must understand where your sensitive is inside of Snowflake. Data changes rapidly. You must keep up.

2. You must be able to prove to the business that you have a least privileged access mechanism. Data is accessed only when there is a valid business purpose.

3. You must be able to protect data at rest and in motion within Snowflake. This means cell level encryption using a BYOK approach, near-real-time data activity monitoring, and data theft prevention in the form of DLP.

The three issues mentioned above are incredibly difficult for 95% of businesses to solve, largely due to the sheer scale and complexity of these challenges. Terabytes of data and growing daily, more users with more applications, trusted third parties who want to collaborate with your data. All of this leads to an unmanageable set of internal processes that slow down the business and provide risk.

ALTR’s easy-to-use solution allows Virgin Pulse Data, Reporting, and Analytics teams to automatically apply data masking to thousands of tagged columns across multiple Snowflake databases. We’re able to store PII/PHI data securely and privately with a complete audit trail. Our internal users gain insight from this masked data and change lives for good.

- Andrew Bartley, Director of Data Governance

I believed the CISO at this company was either too focused on the ACCOUNTADMIN problem to understand their other risks, or felt he had control over the other non-admin accounts. In either case I would have liked to learn more!

There was a reason someone from the Data & Analytics team sought out a product like ALTR. Data teams are afraid of screwing up. People are scared to store and use sensitive data in Snowflake. That is what ALTR solves for, not the task of ACCOUNTADMIN security. I wanted to be able to walk the CISO through the risks and how others have solved for them using ALTR.

The tools that Snowflake provides to secure and lock down the ACCOUNTADMIN role are robust and simple to use. Ensure network policies are in place. Ensure MFA is enabled. Ensure you have logging of ACCOUNTADMIN activity to watch all access.

I wish I could have been on the conversation with the CISO to ask a simple question, “If I show you how to control the ACCOUNTADMIN role on your own, would that change your tone on your teams use of ALTR?” I don’t know the answer they would have given, but I know the answer most CISO’s would give.

Nothing will ever be 100% secure and I am by no means saying ALTR can protect your Snowflake data 100% by using our platform. Data security is all about reducing risk. Control the things you can, monitor closely and respond to the things you cannot control. That is what ALTR provides day in and day out to our customers. You can control your ACCOUNTADMIN on your own. Let us control and monitor the things you cannot do on your own.

Since 2015 the migration of corporate data to the cloud has rapidly accelerated. At the time it was estimated that 30% of the corporate data was in the cloud compared to 2022 where it doubled to 60% in a mere seven years. Here we are in 2024, and this trend has not slowed down.

Over time, as more and more data has moved to the cloud, new challenges have presented themselves to organizations. New vendor onboarding, spend analysis, and new units of measure for billing. This brought on different cloud computer-related cost structures and new skillsets with new job titles. Vendor lock-in, skill gaps, performance and latency and data governance all became more intricate paired with the move to the cloud. Both operational and transactional data were in scope to reap the benefits promised by cloud computing, organizational cost savings, data analytics and, of course, AI.

The most critical of these new challenges revolve around a focus on Data Security and Privacy. The migration of on-premises data workloads to the Cloud Data Warehouses included sensitive, confidential, and personal information. Corporations like Microsoft, Google, Meta, Apple, Amazon were capturing every movement, purchase, keystroke, conversation and what feels like thought we ever made. These same cloud service providers made this easier for their enterprise customers to do the same. Along came Big Data and the need for it to be cataloged, analyzed, and used with the promise of making our personal lives better for a cost. The world's population readily sacrificed privacy for convenience.

The moral and ethical conversation would then begin, and world governments responded with regulations such as GDPR, CCPA and now most recently the European Union’s AI Act. The risk and fines have been in the billions. This is a story we already know well. Thus, Data Security and Privacy have become a critical function primarily for the obvious use case, compliance, and regulation. Yet only 11% of organizations have encrypted over 80% of their sensitive data.

With new challenges also came new capabilities and business opportunities. Real time analytics across distributed data sources (IoT, social media, transactional systems) enabling real time supply chain visibility, dynamic changes to pricing strategies, and enabling organizations to launch products to market faster than ever. On premise applications could not handle the volume of data that exists in today’s economy.

Data sharing between partners and customers became a strategic capability. Without having to copy or move data, organizations were enabled to build data monetization strategies leading to new business models. Now building and training Machine Learning models on demand is faster and easier than ever before.

To reap the benefits of the new data world, while remaining compliant, effective organizations have been prioritizing Data Security as a business enabler. Format Preserving Encryption (FPE) has become an accepted encryption option to enforce security and privacy policies. It is increasingly popular as it can address many of the challenges of the cloud while enabling new business capabilities. Let’s look at a few examples now:

Real Time Analytics - Because FPE is an encryption method that returns data in the original format, the data remains useful in the same length, structure, so that more data engineers, scientists and analysts can work with the data without being exposed to sensitive information.

Data Sharing – FPE enables data sharing of sensitive information both personal and confidential, enabling secure information, collaboration, and innovation alike.

Proactive Data Security– FPE allows for the anonymization of sensitive information, proactively protecting against data breaches and bad actors. Good holding to ransom a company that takes a more proactive approach using FPE and other Data Security Platform features in combination.

Empowered Data Engineering – with FPE data engineers can still build, test and deploy data transformations as user defined functions and logic in stored procedures or complied code will run without failure. Data validations and data quality checks for formats, lengths and more can be written and tested without exposing sensitive information. Federated, aggregation and range queries can still run without fail without the need for decryption. Dynamic ABAC and RBAC controls can be combined to decrypt at runtime for users with proper rights to see the original values of data.

Cost Management – While FPE does not come close to solving Cost Management in its entirety, it can definitely contribute. We are seeing a need for FPE as an option instead of replicating data in the cloud to development, test, and production support environments. With data transfer, storage and compute costs, moving data across regions and environments can be really expensive. With FPE, data can be encrypted and decrypted with compute that is a less expensive option than organizations' current antiquated data replication jobs. Thus, making FPE a viable cost savings option for producing production ready data in non-production environments. Look for a future blog on this topic and all the benefits that come along.

FPE is not a silver bullet for protecting sensitive information or enabled these business use cases. There are well documented challenges in the FF1 and FF3-1 algorithms (another blog on that to come). A blend of features including data discovery, dynamic data masking, tokenization, role and attribute-based access controls and data activity monitoring will be needed to have a proactive approach towards security within your modern data stack. This is why Gartner considers a Data Security Platform, like ALTR, to be one of the most advanced and proactive solutions for Data security leaders in your industry.

Securing sensitive information is now more critical than ever for all types of organizations as there have been many high-profile data breaches recently. There are several ways to secure the data including restricting access, masking, encrypting or tokenization. These can pose some challenges when using the data downstream. This is where Format Preserving Encryption (FPE) helps.

This blog will cover what Format Preserving Encryption is, how it works and where it is useful.

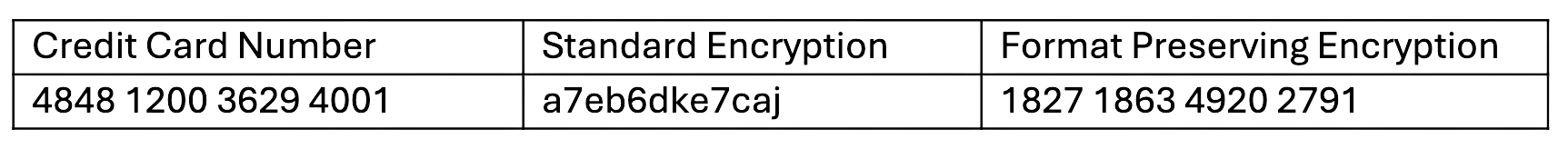

Whereas traditional encryption methods generate ciphertext that doesn't look like the original data, Format Preserving Encryption (FPE) encrypts data whilst maintaining the original data format. Changing the format can be an issue for systems or humans that expect data in a specific format. Let's look at an example of encrypting a 16-digit credit card number:

As you can see with a Standard Encryption type the result is a completely different output. This may result in it being incompatible with systems which require or expect a 16-digit numerical format. Using FPE the encrypted data still looks like a valid 16-digit number. This is extremely useful for where data must stay in a specific format for compatibility, compliance, or usability reasons.

Format Preserving Encryption in ALTR works by first analyzing the column to understand the input format and length. Next the NIST algorithm is applied to encrypt the data with the given key and tweak. ALTR applies regular key rotation to maximize security. We also support customers bringing their own keys (BYOK). Data can then selectively be decrypted using ALTR’s access policies.

FPE offers several benefits for organizations that deal with structured data:

1. Adds extra layer of protection: Even if a system or database is breached the encryption makes sensitive data harder to access.

2. Original Data Format Maintained: FPE preserves the original data structure. This is critical when the data format cannot be changed due to system limitations or compliance regulations.

3. Improves Usability: Encrypted data in an expected format is easier to use, display and transform.

4. Simplifies Compliance: Many regulations like PCI-DSS, HIPAA, and GDPR will mandate safeguarding, such as encryption, of sensitive data. FPE allows you to apply encryption without disrupting data flows or reporting, all while still meeting regulatory requirements.

FPE is widely adopted in industries that regularly handle sensitive data. Here are a few common use cases:

ALTR offers various masking, tokenization and encryption options to keep all your Snowflake data secure. Our customers are seeing the benefit of Format Preserving Encryption to enhance their data protection efforts while maintaining operational efficiency and compliance. For more information, schedule a product tour or visit the Snowflake Marketplace.

Snowflake makes moving your data to the cloud quick and easy. But hosting sensitive data in Snowflake can add a layer of complexity due to governance and security concerns that could slow down or even grind your project to a halt.

Ask yourself these questions to find out if ALTR can help control and secure your sensitive data in Snowflake, moving your data projects along and getting to Snowflake value more quickly:

When adding new data or new databases, it can be difficult to really know what you're bringing in. If you have hundreds or thousands of databases or millions of rows and columns of data, how can you know which have sensitive data? Column names? Not so fast – some databases use indecipherable codes as column names and there’s no guarantee the column name matches the data in the column. Manual review? Sure, if you don’t want to do anything else for the rest of your life. But if you don’t know which data is sensitive or regulated, how can you safeguard it?

Once you find all that sensitive data (wherever it its), the next step is to get it categorized and tagged so that you can automatically apply the appropriate privacy and access policies. Maybe only HR should have access to employee SS #s or maybe only marketing actually needs customer date of birth. Are you going to manually go in and grant or deny access to those columns or rows for each user? What a headache. If you’re doing this, keep reading.

After you have that data classified and tagged, you want to be able to see who accesses it – when and how much. If you don’t have this in an easy-to-understand dashboard, it makes comprehending normal data usage very difficult and identifying anomalies and outliers just about impossible. Not to mention, complying with audit requests for regulated data access becomes another onerous, time-consuming task.

Developers do love coding, but is writing (and worse, updating!) access controls the best use of your time in Snowflake? What do you do when new data is added? Or new users? What if you want to hand off maintenance to someone on the governance team or a line of business data owner because they set the policy? Do they have to learn to code SQL? Unlikely.

As more and more companies strive to become “data-driven” and more and more people across the company understand the value data analytics can provide, requests for data access can explode. While in the past it may have been one or two requests a week, it can easily grow to hundreds or thousands in the largest companies. Granting data access to new users can quickly become a full time job. Is that what you signed up for?

Snowflake provides powerful native capabilities for masking data, but the catch is that you need to know SQL to use them. This means coders whose time would be better spent on other, more high value activities, spend time writing and rewriting masking policies. Wouldn’t it be nice to have an interface over those native features you can activate and update in just a few clicks. (*hint* ALTR has this).

Privileged users and credentialed access present a significant risk to PII, PHI and PCI. Even if you trust the user, credentials can be lost, stolen or hacked. And even trusted employees can become disgruntled or sense an opportunity. It’s better to assume that credentials are always compromised and put a system in place that ensures even the most privileged user can’t do too much damage.

Snowflake and Tableau make a powerful combination for data analytics. Instead of granting users access to data directly to Snowflake, many organizations choose to offer it through a business intelligence tool like Tableau. This comes with setting up Tableau user accounts for each, but to save time/maintenance they sometimes use a single Tableau service account to access Snowflake. So from a data governance perspective, without some additional help, admins can’t see who is accessing what data.

When your data governance is part of a compliance program focused on meeting regulatory requirements, it’s not enough to set up the policies. You have to prove that they’re working correctly and no one who shouldn’t have access did access the data. Can you prove this, without spending hours or weeks sifting through data access logs?

Why should you have to pull all this info together manually by scanning through text access logs or go in and update SQL access policies line by line? These activities are all very critical to governing and securing your data in Snowflake but they’re really just administrative tasks. Is this why you became a DBA or data engineering? Probably not. What you really want to do is pull in new data streams, offer up new analytics opportunities and find ways to extract more value from your data. Why don’t you do that instead?

If you answered “No” to even one of these questions, ALTR’s Free plan can help you today. Unlike legacy data governance solutions that take 6 months to implement and cost at least 6 figures to start, we built our solution to simplify data control and security for busy data architects, engineers and DBAs so you can focus on getting value from data without disruption. In just 10 minutes you can control 10 columns of sensitive data in Snowflake and get visibility into all your data usage with role-based heat maps and analytics dashboards!

The heightened regulatory environment kicked off with GDPR and CCPA in 2018, the unrelenting PII data breaches, and the acceleration of data centralization across enterprises into the cloud have created enormous new risks for companies. This has skyrocketed the importance of “data governance” and transformed what was once a wonky business process into a hot topic and an even hotter technology category. But some software companies are self-servingly narrowing the definition in a way that leaves customers’ sensitive data exposed.

There are a few different definitions of the concept floating around. The Data Governance Institute defines it as “a system of decision rights and accountabilities for information-related processes, executed according to agreed-upon models which describe who can take what actions with what information, and when, under what circumstances, using what methods.” The Data Management Association (DAMA) International defines it as “planning, oversight, and control over management of data and the use of data and data-related sources.”

Both are pretty broad and very focused on process. Technologies can help at various stages though, and we’re seeing software companies make their claims as “data governance” solutions. But unfortunately, too many are narrowly focused on the initial steps of the data governance process—data discovery and classification—and act as if that’s enough. It’s not even close to being enough.

Data discovery and classification are critical first steps of the data governance process. You must know where sensitive data is and what it is before you can be prepared to govern or secure it. Creating a “card catalog” for data that puts all the metadata about that data at a user’s fingertips, like many solutions do, is extremely useful. But if you’ve ever used a card catalog you know that the card tells you about the book, but it’s not the book itself. For valuable books, you may have to take the card to the librarian to retrieve the book for you. Or if the book is part of a rare books collection, it may even be locked away in a vault. The card catalog itself is a read-only reference that does nothing to make sure the most valuable books are protected.

It doesn’t stop the librarian from accessing sensitive data themselves or using their credentials to get into the locked rare books room. And if the librarian loses their credentials or has them stolen, there’s no way to stop a thief from taking off with irreplaceable texts.

It’s similar with data governance tools. Knowing where the data is and providing information around it are necessary pre-conditions, but they’re not governance.

Software solutions that say they provide “data governance” but don’t go further than data discovery and classification leave their customers asking, “What next?” It’s a little like this video. Shouldn’t a vendor help you with a full solution instead of just identifying the problem? Recently some have taken one next step into access controls and masking, but the way they’ve implemented this may cause additional pain for users. If the solution requires data to be cloned and copied into a proprietary database in order to be protected, it leaves the original data exposed. Or if users have to write SQL code access controls, it puts a burden on DBAs. These are not full solutions.

For complete data governance, policy enforcement needs to be automated, require no code to implement, and focus on the original data (not a copy) to ensure only the people who should have access do. Companies then need visibility into who is consuming the data, when, and how much, in a visualization tool that makes it easy to see both baseline activity and out-of-the-norm spikes. Finally, in order to truly protect data, companies need a solution that takes the next crucial step into real data security that limits the potential damage of credentialed access threats. This means consumption limits and thresholds where abnormal utilization triggers an alert and access can be halted in real-time, and the power to limit data theft by tokenizing the most critical and valuable data.

All of these steps—data intelligence, discovery, classification, access and consumption control, tokenization—are necessary to proper data governance. To faithfully live up to the responsibility created by collecting and storing sensitive data, companies need a solution like ALTR’s complete Data Governance to keep sensitive data safe.

In the mad rush to become the next winner of the “data-driven” race, many companies have focused on enabling data access across the business. More and more data are used, viewed and consumed throughout the company by more and more groups and more and more individuals. But sometimes a critical piece of information is missing: who’s consuming what data, where, when, why, and how much. Without this data observability, companies are flying blind.

When you don’t know what “normal” is, everything is “abnormal”. And how can you effectively run a business that way?

Being able to observe data consumption and truly comprehending how data is being utilized brings tremendous value to an enterprise. When you have the full context around how your sensitive PII, PHI and PCI data is consumed by whom, in what roles, at what time of day, on what day of week, in what patterns across time, you can start to understand what “normal” looks like. When you have this level of operational data visibility, you can compare normal to abnormal and begin to drive valuable insights. You can create custom views and reports that show how the data is being consumed for groups across the enterprise. And you can start to make better decisions for your business.

For “risk” executives – in other words, people who look at risk to the business at a high level and care about what happens to the brand – you can create visualizations that show how often data that is covered by regulatory or privacy requirements is accessed and by whom. For execs who have to sign privacy and compliance attestations you can tailor visualizations that give them clear information they need in order to feel confident signing their name.

These groups may discover anomalies like DBAs looking at Social Security numbers or developers accessing customer PII. They can then set up more stringent governance policies to correct that access. They can also create purpose-based access controls based on normal usage. For example, they can set controls that allow access within those normal constraints, but any access outside that will be flagged and an alert sent in real-time to the Security Team

These teams may want to look at the value the company is getting out of its data consumption. They will want to ensure that people who legitimately need access to data get as much as they legitimately need without any friction. Comprehensive observability into how the company is using data, what roles or lines of business are using what data, and how they’re using it can help data teams optimize data consumption to deliver the most value.

If data sharing and monetization are part of your business, observability over data consumption is key. You can start to see which data and which data sets are valuable to which customer roles, segregated by company size, location, industry and more. For example, one of the companies I’m working with has seen that midsize companies are interested in a specific data set, but enterprise size companies look at a completely different data set. Knowing this could lead to data product optimizations like carving out the enterprise class data and potentially charging a higher price.

ALTR provides several features with Snowflake that make data observability easy. You can classify which columns contain sensitive data and focus observation only on those, so you’re not drowning in an unnecessary flood of “abnormal” alerts. You can step back and look at a data usage heatmap that gives you an overview of consumption by individual so you can see normal usage over time and easily spot and dig into any outliers. And our Tableau user governance capability allows you to collect individual consumption information on end users even when they’re using a shared service account.

I believe observability is one of the most valuable things we do — everything else we effect, evoke or instrument is driven by knowing who is consuming what data. It’s a powerful foundation for our complete Data Governance solution and can be powerful tool to drive insights and action for your company.

SQL: Structured Query Language.

In this blog, we’re going to explain why SQL is so important without getting too technical. It’s the query language for relational databases from Oracle, Microsoft, IBM, Snowflake, and others that primarily store and process sensitive information like personally identifiable information (PII), PHI health information, and PCI data.

Let’s use Snowflake (who, coincidentally, has their own version of SQL) for example. They have one of the best examples of a secure data environment: SSO, 2FA, RBAC, secure views, you name it. That makes it difficult to misuse data access... but not impossible. The aforementioned security features are entirely dependent upon and trusting of the identity of the user. If someone can present the correct sequence of bytes over the internet to Snowflake, then they can pretend to be someone else.

In a world where you can hardly trust your food to be delivered with integrity, how can you trust a solution that depends entirely upon the validity of the user? If someone will steal your lunch, then someone with access to your data will certainly steal, or get targeted by criminals, for a number of reasons far more lucrative than your $3 taco.

Extend the idea of Zero Trust into the SQL layer, of course! For an in-depth look at Zero Trust, you can check out our webinar with Forrester Analyst Heidi Shey (Forrester coined the term Zero Trust, for reference). But for our purposes here, let’s just define it as “never trust, always verify”. In other words, each time someone wants access to data, confirm they should be able to do that. Think of it like an ATM - you walk up to an ATM and verify your identity in order to withdraw cash. Even after your identity is verified, you can still only get out a certain amount. If you go across town to a different ATM, you’ve got to verify your identity again to request money. But if you have reached your daily limit of withdraw then you are done. It doesn't matter that it is actually you asking for the money, the bank assumes it isn't you.

Verifying a user’s identity means a lot of things other than just 2FA, SSO, and RBAC. If someone’s credentials get stolen, you’d think it’s virtually impossible to know, right? Nope.

Every time a user wants to access data – regardless of their identity, role or title – you should check their previous use of data (per minute, hour, day, week, etc.) and other factors like what device or application they’re coming from. If it doesn’t match up with typical user patterns, then you know there’s a problem.

Regardless of what information the user is trying to request, if the rate of data consumption breaks a limit that the business deems appropriate, then the user (whoever they are) should not get the data. Period. Even if their title is CEO, they should not be able to access all the PII in the table in one query. Why? Because more access = more risk.

Having the ability to not only know when too much data is being queried but also being able to stop it in real time is game-changing and will effectively subdue the threat of credentialed access breaches.

Snowflake Cloud Data Warehouse has shown the world that separating compute of data from storage of data is the best path forward to scale data work loads. They also have an extensive security policy which should make any CISO/CIO comfortable moving data to Snowflake.

So why would Snowflake need Zero Trust at the SQL layer given the statement above? It comes down to the shared customer responsibility model that comes with using IaaS, PaaS, or, like Snowflake, SaaS. As you can see below, with any SaaS provider the customer still has two very important problems left to solve: identity and data access.

.png)

Okta, for example, does a great job of solving for the identity portion of the matrix. And Snowflake has done everything possible to help with the data consumption side (I would say that using Snowflake is the safest way today to store and access data), but there is still this last remaining “what if” out there: what if someone steals my credentials? Or decides they want to do something malicious? The insider threat to data is very difficult for any organization to handle on their own.

It starts by enhancing your Snowflake account with a solution that can detect and respond to abnormal data consumption in real-time. This will give your organization complete control over each user’s data consumption, regardless of access point (due to cloud integration).

This means that every time an authorized user requests data within Snowflake, they get evaluated and verified by a Zero Trust risk engine (like the ATM example). If abnormal consumption is detected based on the policies of the governing risk engine, then you can cut off access in real-time. The best part is that because it is integrated into Snowflake, users don’t see any changes to their day to day, your Snowflake bill won’t increase, and your security team can finally stop credentialed breaches and SQL injection attacks for good.

The 2023 Verizon Data Breach Investigations Report is out and once again, people are a key security weakness. "74% of all breaches include the human element through Error, Privilege Misuse, Use of stolen credentials or Social Engineering," according to the report.

So, why haven’t we (“we” meaning all of us who care about protecting data) stopped the credentialed access threat? Do we not realize it’s happening (despite Verizon’s annual reminder), do we not see it as a priority, or is there another cause? And, if we’re not going to stop credential theft, how can we make sure data is secure despite the danger?

Usernames and passwords have been used since there have been log ins to digital accounts. It’s actually not a bad way to secure access. The problem is mostly people.

You’ll notice that these all require users to make an extra effort. Some of the best passwords are essentially a long, completely random string of characters – that also turn out to be almost impossible for a normal person to remember. Unfortunately, easy-to-remember passwords are also easy to hack. And if people do use a strong password, they reuse it, diminishing its effectiveness. A Google survey found 65% of people reuse the same password across multiple sites. The same Google survey found that only 24% of people use a password manager.

Almost the opposite is true for clicking on phishing emails. It may not be that people aren’t careful, but that they too quickly respond and do as they’re asked. Phishing emails are now more customized than ever, utilizing info shared on social networks or purporting to be from high level company execs. When an employee receives an urgent email demanding they log into a seemingly familiar tool, using their company username and password, to carry out a CEO request, too many people just want to do what they need to do to stay in the CEO’s good graces.

Multi-factor authentication (MFA) has its own struggles. At the RSA 2020 security conference, MSFT said that more than 99.9 percent of Microsoft enterprise accounts invaded by hackers didn’t use MFA, and only 11% of enterprise accounts had MFA enabled. There are several reasons a company might not utilize MFA: it’s not seen as priority, the quality and security can vary between solutions, and employee pushback. Sixty-three percent of respondents in a security survey said they experience resistance from employees who don’t want to download and use a mobile app for MFA. Fifty-nine percent are implementing 2FA/MFA in stages due to employees’ reluctance to change their behavior.

Knowing that the easiest and best ways to stop credentialed access threats are undermined by people being people, we’re simply better off assuming all credentials are compromised. Stolen credentials are the most dangerous if, once an account gets through the front door it has access to the entire house including the kitchen sink. Instead of treating the network as having one front door, with one lock, we need to require authorization in order to enter each room of the house. This is actually Forrester’s “Zero Trust” security model – no single log in or identity or device is trusted enough to be given unlimited access.

This is especially important as more data moves outside the traditional corporate security perimeter and into the cloud, where anyone with the right username and password can log in. While cloud vendors do deliver enterprise class security against cyber threats, credentialed access is their biggest weakness. It’s nearly impossible for a SaaS-hosted database to know if an authorized user should really have access or not. Identity access and data management are up to the companies utilizing the cloud platform.

Omer Singer, Head of Cyber Security Strategy at Snowflake, explains why it’s important to take a shared responsibility approach to protecting your data in the cloud.

That means companies need a tool which doesn’t just try (and often fail) to stop threats at the door. You need a cloud-based data access control solution like ALTR that never believes a user is who their credentials say they are. Every time an apparently “authorized” user requests data in the platform, the request is evaluated and verified against the data governance policies in place. If abnormal consumption is detected, then access can be cut off in real-time. Even seemingly authorized users aren’t allowed to take whatever they want.

The U.S. has a long history of solving big problems – we came together during WWII to ramp up wartime production of military supplies and equipment, and more recently, we helped fund the miraculous creation of the COVID-19 vaccine in less than a year. It’s a little baffling that we continue to allow the credentialed access threat to harm our industries and damage data security. It’s a solvable problem that I hope we start taking seriously. And, I hope we see a different threat at the top of next year’s Verizon report.

Pete Martin is an ALTR co-founder and Director of Product Marketing, and James Beecham is a co-founder and ALTR’s Chief Technology Officer. Since establishing ALTR with their other partners, the two have been immersed in the world of data, data governance and data security. After listening to their passionate exchanges about the shifting industry, the exploding ecosystem and where they see it all going, we decided to invite an audience to join their conversations every Friday as part of our new LinkedIn Live program: The Data Planet.

In our premiere episode last Friday, the guys covered topics including:

In future episodes the guys will also host industry partners and leaders as guests. Take a look, and we hope you can join us weekly to discuss the rapidly shifting data landscape. Attend our next episode here.

Since its launch in 2014, Snowflake has been focused on enabling the data cloud—innovating with their speed, approach to cloud scaling, and consumption-based business model. But they have also led the way with their investment in extensibility and support for the programming languages, frameworks, and data science tools and technologies its users prefer. This partnership with complementary technologies helps deliver great benefits to Snowflake customers. As one of those technology partners, we’d like to congratulate Snowflake on its announcement of new their extensibility features: Snowpark developer experience and Java UDFs. These additions will allow developers and data scientists to leverage Snowflake’s powerful platform capabilities and the benefits of Snowflake’s Data Cloud throughout their data science, integration, quality, and BI projects for increased efficiency and insights.

ALTR is also leading the way in taking advantage of Snowflake’s commitment to extensibility by delivering our cloud-based data governance and security solutions through direct integration with Snowflake. ALTR taps into Snowflake’s existing masking policies, user-defined functions, (UDFs) and external functions in order to securely protect sensitive data. The powerful and flexible masking policies are connected to columns identified as sensitive. Whenever a masked column is queried, from anywhere inside or outside Snowflake, a UDF within Snowflake is called to act as a bridge to the external function which communicates with ALTR. ALTR then provides direction for how the data in that column should be governed based on established rules and policies.

“ALTR is an innovator in using Snowflake’s extensibility features,” said Tarik Dwiek, Head of Technology Alliances at Snowflake. “By utilizing these features, they’re able to deliver powerful data protection and security natively integrated, allowing our customers to get more value from their Snowflake investment.”

ALTR’s integration with these extensibility features allows Snowflake customers to add governance and security more quickly—without any software having to be deployed or code written. They can add sensitive data faster, more securely and deliver more flexible data access because natively-embedded security policies will always be invoked. More data with more flexible access allows data insights to come faster for the business. And this kind of native integration enables the cloud-based, highly scalable Snowflake data platform to interact directly with the cloud-based, highly scalable ALTR data governance and security platform, with no outside components to impede or impair the combined power of the two solutions.

We believe that this is where the cloud data ecosystem is going—native integrations enabled by extensible platform trailblazers like Snowflake. And we’re thrilled to see Snowflake continue leading the way.

As an increasing number of companies join the Snowflake Data Cloud to extract business value and insights from data, the ability to utilize and govern sensitive data on the platform has become a critical priority. Since kicking off our partnership in 2020, launching our cloud-native integration in February 2021, and now as a Snowflake Premier Partner, ALTR has been laser-focused on delivering enterprise-class Snowflake data governance solutions that make governing data as simple and easy as possible for Snowflake customers.

By leveraging Snowflake’s native data governance capabilities, we’re able to deliver unprecedented data usage visibility and analytics, automated policy-based access controls, and the highest-levels of data protection—all with no code required to implement, maintain, or manage. Removing the roadblocks to protecting sensitive data means Snowflake users can extract the most value from their data and maximize their investment in the platform.

We’re proud to join the initiative to make Snowflake data governance a top focus and enable powerful new features for our shared customers as a member of the Snowflake Data Governance Accelerated Program.

ALTR is a natural fit for the Snowflake Governance Accelerated Program because we’ve tightly integrated with Snowflake’s native capabilities to enable no-code data governance at scale from day one. As Snowflake continues to make new features and capabilities available, we integrate them into our platform, allowing customers to leverage Snowflake's native capabilities in an easier, more scalable way.

ALTR simplifies how organizations implement and maintain data masking policies to protect private information in Snowflake. With ALTR, you can see the data and roles your policies are applied to and implement new or modify existing masking policies all without writing any SQL code.

ALTR has pre-built support for Snowflake’s upcoming native Classification feature, which classifies data and tags objects directly in Snowflake without customer data leaving the platform. Using ALTR, customers can automate the discovery and classification process without writing any code.

ALTR automatically visualizes data from Snowflake’s Access History in both a heatmap and timeline view. You can use this intelligence to audit and report on data access, confirm your governance policies are being enforced correctly, and identify areas where new policies can be applied.

Through the powerful Snowflake data governance capabilities, Snowflake and ALTR enable organizations to effectively secure, govern, and unlock the full potential of their data. In addition, ALTR’s SaaS platform, no-code technology, and free plan democratize data governance, removing the barriers of long, complex implementations, coding knowledge, and high costs, making it even easier for Snowflake customers to protect their data and get full value from their investment in Snowflake.

Snowflake, the Web's hottest cloud data platform, has enjoyed a rapid rise to popularity because of two major forces in business. The first is the cold reality that businesses of all shapes and sizes must become data-driven or face significant disadvantages in competing with those who are. The second is that, mostly due to the Cloud, users' expectations have changed forever. Technology must be simple: simple to use, simple to buy, simple to grow.

One by one traditional software categories have moved to the Cloud, and this is as true for parts of the cybersecurity software world as any. Still, Data Security stands as one of the last holdouts. For decades it has been dominated by solutions that were difficult and complex to implement, involving infrastructure components that have to be integrated, configured and maintained. They are difficult to administer, requiring complex role/access analysis and maintenance as people and data move constantly in and out of the company. And most of them aren't built to scale massively the way a platform like Snowflake is. This means that when you connect a traditional data security product to Snowflake it is, in the words of a colleague of mine, "like connecting a bicycle to the back of a sports car."

At ALTR we continue to make it our mission to provide the world's most secure cloud platform for data consumption intelligence, governance, and protection in a way that is exceptionally simple. This is why we're so pleased to announce the general availability of our direct cloud integration with Snowflake.

This new integration means you can plug ALTR into your Snowflake instance seamlessly by providing only two pieces of information: the URL for your instance, and credentials that allow ALTR to access it. From there, you simply log into ALTR to instantly gain deep insight into your consumption of data and put in place smart access and consumption controls that protect your business against even the most privileged privacy and security threats.

Because ALTR is integrated directly into the Snowflake platform, there is no proxy server in between you and your data. You can change anything you do to access data, from using Snowflake itself to using any variety of third party analytics tools or inbound or outbound ETL processes. You can be in any Snowflake deployment, from AWS to Azure to GCP. You can scale massively up, or down. As things change, ALTR will keep pace transparently, continuing to give you observability and control over who is accessing data, and how much of it, in real time.

ALTR + Snowflake. Building out your focus on data, and doing so safely, has never been so simple.

If you'd like to learn more, request a demo or see first-hand how ALTR can work for you by signing up for a free 7-day trial.

Back in September, Lou Senko, Chief Availability Officer at Q2, put out a paper in ABA entitled “Leaping the Innovation Chasm by Securing the Data". In the 12-page article, he outlines how, too often, security and compliance are viewed as opposing forces to innovation and speed. But when done right, more security and resilience actually leads to faster innovation with less friction and less risk. Here are a few highlights from Lou's article as well as an update on how Q2 is continuing to leverage a security-first approach.

Understandably, Lou didn’t want to end up in the headlines as the latest software company to suffer a breach, yet he knew traditional methods of security were no longer working. Company after company keeps bolting on more and more security solutions that take endless resources to manage, hinder performance, and still leave data exposed. Lou decided to take a radical leap. His team at Q2 asked themselves, “What if we just assumed the network was already breached and the bad actors were already inside (a key principle of Zero Trust)? If the data is both the target and the risk point, what if we simply removed it?” That was the “ah ha!” moment: the best way to keep the data safe is to not have the data at all.

Resolved to remove all of Q2’s sensitive data from their own environment and securely store it in the cloud, his team needed a solution that could isolate and protect all their sensitive data in the cloud while providing intelligence and control over how data is being consumed. They chose ALTR for our proprietary tokenization as a service and for our shared belief that you should treat your sensitive data how your treat your money; using ALTR as an ATM for their data, Q2 could:

With ALTR, Lou’s vision came to life. Their data was secure plus they had better visibility and control over data consumption. To learn more about how Q2 uses ALTR to protect their data, read the full case study here.

But that’s not the end of it.

Being as innovation-driven as they are, it’s not surprising that Q2 continues to grow rapidly. Looking to maximize the value of their data, they invested in Snowflake’s Data Cloud, and Lou was once again thinking about security and risk efforts associated with a project like this. Coincidentally, their investment in Snowflake corresponded with the availability of ALTR’s new cloud integration for Snowflake.

To hear the rest of the story and learn how to achieve a security-first approach to re-platforming data in the cloud, sign up for our upcoming webinar with Lou and Snowflake’s Head of Cybersecurity Strategy Omer Singer.

I recently received a gift card to a popular coffee shop – score, right? When I tried to add it to the app to take advantage of the power and convenience of technology, it required my home address! Why would it need to know where I live in order to let me use a gift card I already had? No explanation, but it’s just another example of the kinds of data retailers are gathering. Maybe in the past I would have simply gone along, but like many other consumers, I’m increasingly skeptical of requests for data. This is making it harder for retailers, but also presents an opportunity to build a brand advantage.

Retailers and CPG companies can do amazing things for customers with data. At the Snowflake Retail and CPG Data Analytics Forum, I heard how companies can use hurricane forecasts to predict peanut butter purchases – I'm sure buyers making the trip to the store ahead of the storm appreciate having enough jars for everyone! I also heard how values-driven MOD Pizza used Snowflake, Tableau and a focus on privacy to enable their shift to new order channels, support employees, and deliver data-driven family and bundle offers to customers during the COVID-19 pandemic. Collecting and utilizing data to provide better service to customers can build affinity for the brand and deliver a powerful competitive advantage.

But after the increasing number of consumer data breaches in the headlines in recent years, personal data collection can also raise customer privacy alarms. In an eye-opening 2019 Pew Research Center study, 81% of Americans said that the potential risks they face because of data collection by companies outweigh the benefits. This might be because 72% say they personally benefit very little or not at all from the data companies gather about them. Additionally, 79% of adults are not confident that companies will admit mistakes and take responsibility if they misuse or compromise personal information, and 70% say their personal data is less secure than it was five years ago.

A recent McKinsey survey showed that consumers are more likely to trust companies that only ask for information relevant to the transaction and react quickly to hacks and breaches or actively disclose incidents. Consumers had a higher level of trust for industries with a history of handling sensitive data – financial and healthcare – but lower in other industries including retail.

Some retailers don’t quite realize the risk, or the opportunity. A separate McKinsey survey showed that 64 percent of retail marketing leaders don’t think regulations will limit current practices, and 51 percent said they don’t think consumers will limit access to their data. This has already been disproven with Virginia and Colorado rolling out state-level privacy regulations in 2021 and proposed federal data protection laws bubbling back up. And Apple’s recent deployment of privacy features including App Tracking Transparency empower consumers to control what information apps gather about them on their phones.

It’s clear that retailers can’t continue to gather data at will with no consequences – consumers are awake to the risks now and demanding more. This gives retailers a chance to strengthen the relationship with their customers by meeting and exceeding their expectations around privacy.

If personalization creates a bond with customers, imagine how much more powerful that will be if consumers also trust you.

Luckily, based on the surveys and studies above we have insights into what customers want and how you can deliver that:

McKinsey asks the critical question: “How are you managing your data to derive value-creating analytic insight from personalization without causing value-destroying financial or operational loss due to privacy or security incidents?”

This isn’t just about consumer feelings or preferences – this is about risk to your business. All the value created by utilizing data for personalization can be wiped out in a second with one data incident. Make sure you’re prepared to minimize that risk and actually move your brand forward by building trust with your customers.

One of the most emotional, exciting and often intimidating journey in one’s life is the process of starting a family. Couples and singles in the process of seeking reproductive assistance place complete trust in fertility organizations to help grow their families. They want to know that their most critical and personal information is safe from the reach of bad actors.

Egg donor and surrogate search service Donor Concierge recently partnered with us to oversee the data security and privacy integration of its FRTYL platform. The FRTYL platform is a state-of-the-art centralized database that brings a growing repository of surrogates and more than 15,000 egg donors together in one place so they can be matched more quickly with intended parents. The company’s patient-first mission is to create a global software platform that transforms how prospective parents use technology to find third-party fertility options by consolidating services such as sperm donation, embryo adoption, surrogacy, cryobanking, implantation, and pharmaceuticals. FRTYL streamlines every step of the donor matching process, including initial application, acceptance, registration and image uploads, all while reducing the administrative burden that comes with making the right match.

FRTYL’S NEED FOR SECURITY

All of the information being stored, sorted and shared between donors, surrogates, recipients and practitioners is extremely sensitive. And today’s HIPAA regulations impose strict guidelines about how this information can be stored. FRTYL knew that it needed cutting-edge technology that would safeguard their users’ privacy. Security solutions that simply stack software on top of an application only introduce more gaps for a breach of data.

Consider that the cost of cyberattacks last year reached more than 1 trillion dollars, with organizations in the U.S. spending more on cybersecurity than on natural disaster recovery. On top of that, credentialed individuals instigated 58 percent of breaches, making them the leading suspects for insider threats. For FRTYL, the solution had to be bulletproof.

TOKENIZING DATA THROUGH THE CLOUD

Enter ALTR. Through our partnership, FRTYL is the first donor matching service to leverage the powerful data security capabilities of blockchain. We’ve enabled FRTYL to safely store and retrieve data, as well as anonymize it so that it can be used as a trusted resource for parents seeking to build their families. This is done by removing sensitive information from the database, fragmenting and scattering it across nodes in our cloud-based vault called the ALTRchain. All that remains are nondescript tokens that point back to the first piece of the blockchain; there’s literally nothing left to steal from the database. This secure network of nodes makes the data self-describing, quantum-safe and protected from any threat, including those posed by insiders. Since there is no key or map to be stolen like traditional tokenization and encryption methods, FRTYL ensures their customers sensitive data is always safe.

This pioneering approach to protecting FRTYL through tokenization is made possible through Amazon Web Services, the world’s most comprehensive and broadly adopted cloud platform. Because we utilize the AWS cloud to power our technology, we can guarantee our Data Security as a Service (DSaaS) customers that their information will stay private and protected. While the cloud may be formidable to some, our approach constitutes the cloud a safe place to do business: data is fragmented across storage locations and is unable to be assembled by anyone other than the customer, even those with privileged access to the cloud infrastructure.

We’re helping FRTYL actualize its goal of transforming the way prospective parents use technology to find third-party fertility options. Gail Sexton Anderson, the founder of Donor Concierge and one of the fertility industry’s leading innovators and creative thinkers, told us that our work with FRTYL will give couples and singles “repose that their most precious personal information is protected.”

USING THE CLOUD TO MAKE CLOUD SAFER

With the benefits of the cloud being undeniable, many organizations today feel like they are giving up privacy, control and security for efficiency and scalability. FRTYL is a great example of how our DSaaS approach means you don’t have to sacrifice a thing.