Format-Preserving Encryption: A Deep Dive into FF3-1 Encryption Algorithm

In the ever-evolving landscape of data security, protecting sensitive information while maintaining its usability is crucial. ALTR’s Format Preserving Encryption (FPE) is an industry disrupting solution designed to address this need. FPE ensures that encrypted data retains the same format as the original plaintext, which is vital for maintaining compatibility with existing systems and applications. This post explores ALTR's FPE, the technical details of the FF3-1 encryption algorithm, and the benefits and challenges associated with using padding in FPE.

Format Preserving Encryption is a cryptographic technique that encrypts data while preserving its original format. This means that if the plaintext data is a 16-digit credit card number, the ciphertext will also be a 16-digit number. This property is essential for systems where data format consistency is critical, such as databases, legacy applications, and regulatory compliance scenarios.

The FF3-1 encryption algorithm is a format-preserving encryption method that follows the guidelines established by the National Institute of Standards and Technology (NIST). It is part of the NIST Special Publication 800-38G and is a variant of the Feistel network, which is widely used in various cryptographic applications. Here’s a technical breakdown of how FF3-1 works:

1. Feistel Network: FF3-1 is based on a Feistel network, a symmetric structure used in many block cipher designs. A Feistel network divides the plaintext into two halves and processes them through multiple rounds of encryption, using a subkey derived from the main key in each round.

2. Rounds: FF3-1 typically uses 8 rounds of encryption, where each round applies a round function to one half of the data and then combines it with the other half using an XOR operation. This process is repeated, alternating between the halves.

3. Key Scheduling: FF3-1 uses a key scheduling algorithm to generate a series of subkeys from the main encryption key. These subkeys are used in each round of the Feistel network to ensure security.

4. Tweakable Block Cipher: FF3-1 includes a tweakable block cipher mechanism, where a tweak (an additional input parameter) is used along with the key to add an extra layer of security. This makes it resistant to certain types of cryptographic attacks.

5. Format Preservation: The algorithm ensures that the ciphertext retains the same format as the plaintext. For example, if the input is a numeric string like a phone number, the output will also be a numeric string of the same length, also appearing like a phone number.

1. Initialization: The plaintext is divided into two halves, and an initial tweak is applied. The tweak is often derived from additional data, such as the position of the data within a larger dataset, to ensure uniqueness.

2. Round Function: In each round, the round function takes one half of the data and a subkey as inputs. The round function typically includes modular addition, bitwise operations, and table lookups to produce a pseudorandom output.

3. Combining Halves: The output of the round function is XORed with the other half of the data. The halves are then swapped, and the process repeats for the specified number of rounds.

4. Finalization: After the final round, the halves are recombined to form the final ciphertext, which maintains the same format as the original plaintext.

Implementing FPE provides numerous benefits to organizations:

1. Compatibility with Existing Systems: Since FPE maintains the original data format, it can be integrated into existing systems without requiring significant changes. This reduces the risk of errors and system disruptions.

2. Improved Performance: FPE algorithms like FF3-1 are designed to be efficient, ensuring minimal impact on system performance. This is crucial for applications where speed and responsiveness are critical.

3. Simplified Data Migration: FPE allows for the secure migration of data between systems while preserving its format, simplifying the process and ensuring compatibility and functionality.

4. Enhanced Data Security: By encrypting sensitive data, FPE protects it from unauthorized access, reducing the risk of data breaches and ensuring compliance with data protection regulations.

5. Creation of production-like data for lower trust environments: Using a product like ALTR’s FPE, data engineers can use the cipher-text of production data to create useful mock datasets for consumption by developers in lower-trust development and test environments.

Padding is a technique used in encryption to ensure that the plaintext data meets the required minimum length for the encryption algorithm. While padding is beneficial in maintaining data structure, it presents both advantages and challenges in the context of FPE:

1. Consistency in Data Length: Padding ensures that the data conforms to the required minimum length, which is necessary for the encryption algorithm to function correctly.

2. Preservation of Data Format: Padding helps maintain the original data format, which is crucial for systems that rely on specific data structures.

3. Enhanced Security: By adding extra data, padding can make it more difficult for attackers to infer information about the original data from the ciphertext.

1. Increased Complexity: The use of padding adds complexity to the encryption and decryption processes, which can increase the risk of implementation errors.

2. Potential Information Leakage: If not implemented correctly, padding schemes can potentially leak information about the original data, compromising security.

3. Handling of Padding in Decryption: Ensuring that the padding is correctly handled during decryption is crucial to avoid errors and data corruption.

ALTR's Format Preserving Encryption, powered by the technically robust FF3-1 algorithm and married with legendary ALTR policy, offers a comprehensive solution for encrypting sensitive data while maintaining its usability and format. This approach ensures compatibility with existing systems, enhances data security, and supports regulatory compliance. However, the use of padding in FPE, while beneficial in preserving data structure, introduces additional complexity and potential security challenges that must be carefully managed. By leveraging ALTR’s FPE, organizations can effectively protect their sensitive data without sacrificing functionality or performance.

For more information about ALTR’s Format Preserving Encryption and other data security solutions, visit the ALTR documentation

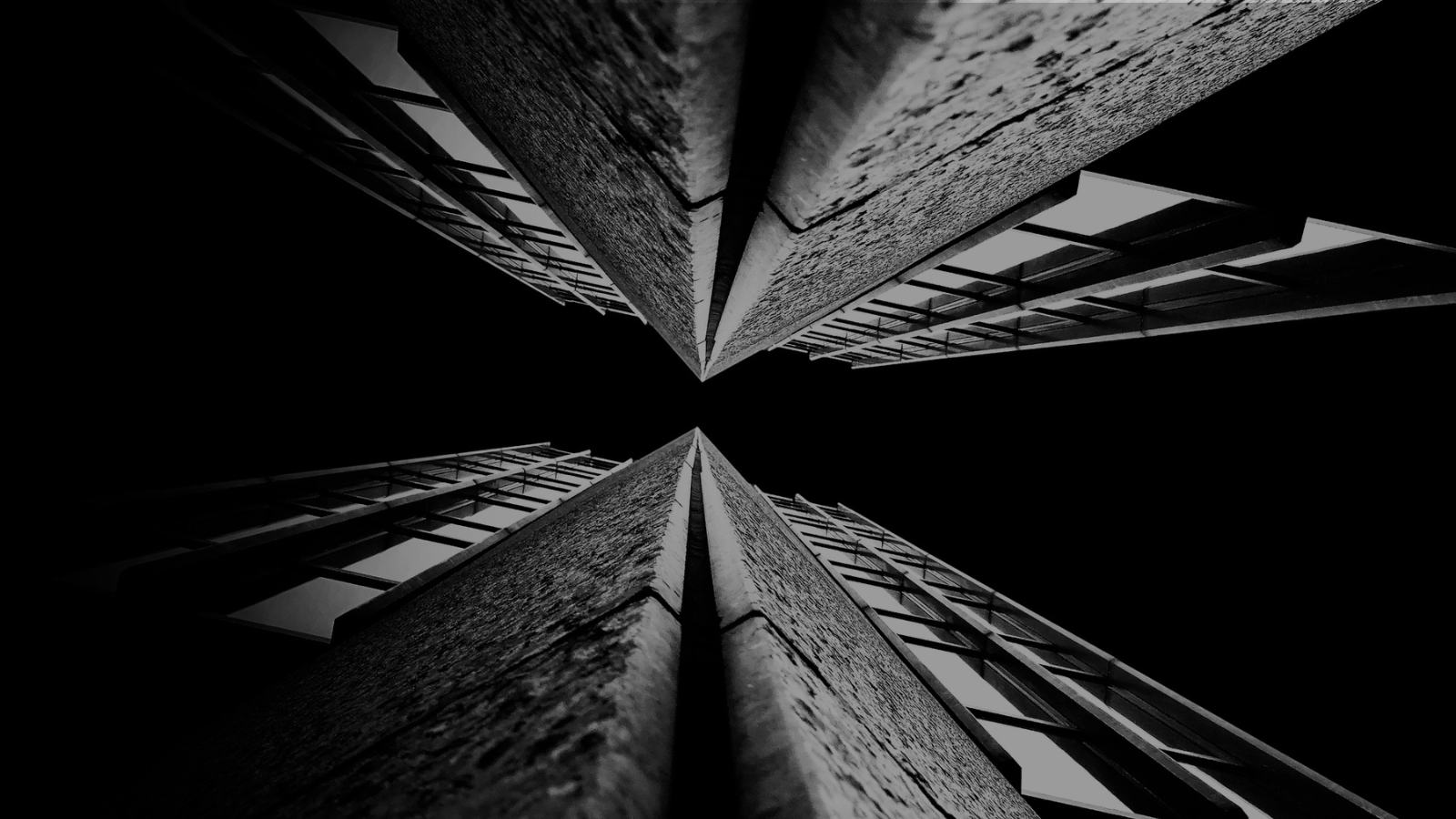

For years (even decades) sensitive information has lived in transactional and analytical databases in the data center. Firewalls, VPNs, Database Activity Monitors, Encryption solutions, Access Control solutions, Privileged Access Management and Data Loss Prevention tools were all purchased and assembled to sit in front of, and around, the databases housing this sensitive information.

Even with all of the above solutions in place, CISO’s and security teams were still a nervous wreck. The goal of delivering data to the business was met, but that does not mean the teams were happy with their solutions. But we got by.

The advent of Big Data and now Generative AI are causing businesses to come to terms with the limitations of these on-prem analytical data stores. It’s hard to scale these systems when the compute and storage are tightly coupled. Sharing data with trusted parties outside the walls of the data center securely is clunky at best, downright dangerous in most cases. And forget running your own GenAI models in your datacenter unless you can outbid Larry, Sam, Satya, and Elon at the Nvidia store. These limits have brought on the era of cloud data platforms. These cloud platforms address the business needs and operational challenges, but they also present whole new security and compliance challenges.

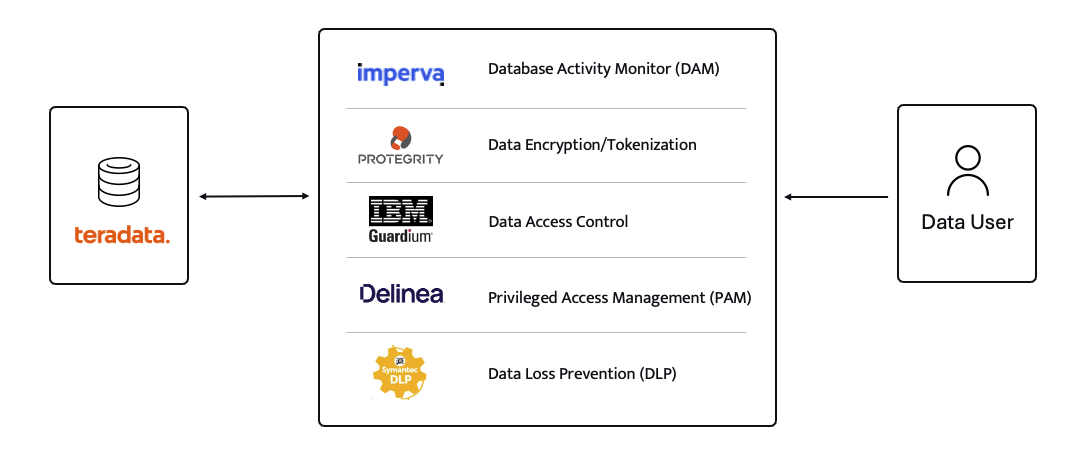

ALTR’s platform has been purpose-built to recreate and enhance these protections required to use Teradata for Snowflake. Our cutting-edge SaaS architecture is revolutionizing data migrations from Teradata to Snowflake, making it seamless for organizations of all sizes, across industries, to unlock the full potential of their data.

What spurred this blog is that a company reached out to ALTR to help them with data security on Snowflake. Cool! A member of the Data & Analytics team who tried our product and found love at first sight. The features were exactly what was needed to control access to sensitive data. Our Format-Preserving Encryption sets the standard for securing data at rest, offering unmatched protection with pricing that's accessible for businesses of any size. Win-win, which is the way it should be.

Our team collaborated closely with this person on use cases, identifying time and cost savings, and mapping out a plan to prove the solution’s value to their organization. Typically, we engage with the CISO at this stage, and those conversations are highly successful. However, this was not the case this time. The CISO did not want to meet with our team and practically stalled our progress.

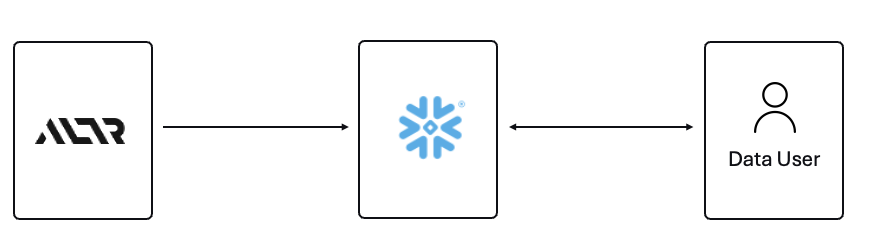

The CISO’s point of view was that ALTR’s security solution could be completely disabled, removed, and would not be helpful in the case of a compromised ACCOUNTADMIN account in Snowflake. I agree with the CISO, all of those things are possible. Here is what I wanted to say to the CISO if they had given me the chance to meet with them!

The ACCOUNTADMIN role has a very simple definition, yet powerful and long-reaching implications of its use:

One of the main points I would have liked to make to the CISO is that as a user of Snowflake, their responsibility to secure that ACCOUNTADMIN role is squarely in their court. By now I’m sure you have all seen the news and responses to the Snowflake compromised accounts that happened earlier this year. It is proven that unsecured accounts by Snowflake customers caused the data theft. There have been dozens of articles and recommendations on how to secure your accounts with Snowflake and even a mandate of minimum authentication standards going forward for Snowflake accounts. You can read more information here, around securing the ACCOUNTADMIN role in Snowflake.

I felt the CISO was missing the point of the ALTR solution, and I wanted the chance to explain my perspective.

ALTR is not meant to secure the ACCOUNTADMIN account in Snowflake. That’s not where the real risk lies when using Snowflake (and yes, I know—“tell that to Ticketmaster.” Well, I did. Check out my write-up on how ALTR could have mitigated or even reduced the data theft, even with compromised accounts). The risk to data in Snowflake comes from all the OTHER accounts that are created and given access to data.

The ACCOUNTADMIN role is limited to one or two people in an organization. These are trusted folks who are smart and don’t want to get in trouble (99% of the time). On the other hand, you will have potentially thousands of non-ACCOUNTADMIN users accessing data, sharing data, screensharing dashboards, re-using passwords, etc. This is the purpose of ALTR’s Data Security Platform, to help you get a handle on part of the problem which is so large it can cause companies to abandon the benefits of Snowflake entirely.

There are three major issues outside of the ACCOUNTADMIN role that companies have to address when using Snowflake:

1. You must understand where your sensitive is inside of Snowflake. Data changes rapidly. You must keep up.

2. You must be able to prove to the business that you have a least privileged access mechanism. Data is accessed only when there is a valid business purpose.

3. You must be able to protect data at rest and in motion within Snowflake. This means cell level encryption using a BYOK approach, near-real-time data activity monitoring, and data theft prevention in the form of DLP.

The three issues mentioned above are incredibly difficult for 95% of businesses to solve, largely due to the sheer scale and complexity of these challenges. Terabytes of data and growing daily, more users with more applications, trusted third parties who want to collaborate with your data. All of this leads to an unmanageable set of internal processes that slow down the business and provide risk.

ALTR’s easy-to-use solution allows Virgin Pulse Data, Reporting, and Analytics teams to automatically apply data masking to thousands of tagged columns across multiple Snowflake databases. We’re able to store PII/PHI data securely and privately with a complete audit trail. Our internal users gain insight from this masked data and change lives for good.

- Andrew Bartley, Director of Data Governance

I believed the CISO at this company was either too focused on the ACCOUNTADMIN problem to understand their other risks, or felt he had control over the other non-admin accounts. In either case I would have liked to learn more!

There was a reason someone from the Data & Analytics team sought out a product like ALTR. Data teams are afraid of screwing up. People are scared to store and use sensitive data in Snowflake. That is what ALTR solves for, not the task of ACCOUNTADMIN security. I wanted to be able to walk the CISO through the risks and how others have solved for them using ALTR.

The tools that Snowflake provides to secure and lock down the ACCOUNTADMIN role are robust and simple to use. Ensure network policies are in place. Ensure MFA is enabled. Ensure you have logging of ACCOUNTADMIN activity to watch all access.

I wish I could have been on the conversation with the CISO to ask a simple question, “If I show you how to control the ACCOUNTADMIN role on your own, would that change your tone on your teams use of ALTR?” I don’t know the answer they would have given, but I know the answer most CISO’s would give.

Nothing will ever be 100% secure and I am by no means saying ALTR can protect your Snowflake data 100% by using our platform. Data security is all about reducing risk. Control the things you can, monitor closely and respond to the things you cannot control. That is what ALTR provides day in and day out to our customers. You can control your ACCOUNTADMIN on your own. Let us control and monitor the things you cannot do on your own.

Since 2015 the migration of corporate data to the cloud has rapidly accelerated. At the time it was estimated that 30% of the corporate data was in the cloud compared to 2022 where it doubled to 60% in a mere seven years. Here we are in 2024, and this trend has not slowed down.

Over time, as more and more data has moved to the cloud, new challenges have presented themselves to organizations. New vendor onboarding, spend analysis, and new units of measure for billing. This brought on different cloud computer-related cost structures and new skillsets with new job titles. Vendor lock-in, skill gaps, performance and latency and data governance all became more intricate paired with the move to the cloud. Both operational and transactional data were in scope to reap the benefits promised by cloud computing, organizational cost savings, data analytics and, of course, AI.

The most critical of these new challenges revolve around a focus on Data Security and Privacy. The migration of on-premises data workloads to the Cloud Data Warehouses included sensitive, confidential, and personal information. Corporations like Microsoft, Google, Meta, Apple, Amazon were capturing every movement, purchase, keystroke, conversation and what feels like thought we ever made. These same cloud service providers made this easier for their enterprise customers to do the same. Along came Big Data and the need for it to be cataloged, analyzed, and used with the promise of making our personal lives better for a cost. The world's population readily sacrificed privacy for convenience.

The moral and ethical conversation would then begin, and world governments responded with regulations such as GDPR, CCPA and now most recently the European Union’s AI Act. The risk and fines have been in the billions. This is a story we already know well. Thus, Data Security and Privacy have become a critical function primarily for the obvious use case, compliance, and regulation. Yet only 11% of organizations have encrypted over 80% of their sensitive data.

With new challenges also came new capabilities and business opportunities. Real time analytics across distributed data sources (IoT, social media, transactional systems) enabling real time supply chain visibility, dynamic changes to pricing strategies, and enabling organizations to launch products to market faster than ever. On premise applications could not handle the volume of data that exists in today’s economy.

Data sharing between partners and customers became a strategic capability. Without having to copy or move data, organizations were enabled to build data monetization strategies leading to new business models. Now building and training Machine Learning models on demand is faster and easier than ever before.

To reap the benefits of the new data world, while remaining compliant, effective organizations have been prioritizing Data Security as a business enabler. Format Preserving Encryption (FPE) has become an accepted encryption option to enforce security and privacy policies. It is increasingly popular as it can address many of the challenges of the cloud while enabling new business capabilities. Let’s look at a few examples now:

Real Time Analytics - Because FPE is an encryption method that returns data in the original format, the data remains useful in the same length, structure, so that more data engineers, scientists and analysts can work with the data without being exposed to sensitive information.

Data Sharing – FPE enables data sharing of sensitive information both personal and confidential, enabling secure information, collaboration, and innovation alike.

Proactive Data Security– FPE allows for the anonymization of sensitive information, proactively protecting against data breaches and bad actors. Good holding to ransom a company that takes a more proactive approach using FPE and other Data Security Platform features in combination.

Empowered Data Engineering – with FPE data engineers can still build, test and deploy data transformations as user defined functions and logic in stored procedures or complied code will run without failure. Data validations and data quality checks for formats, lengths and more can be written and tested without exposing sensitive information. Federated, aggregation and range queries can still run without fail without the need for decryption. Dynamic ABAC and RBAC controls can be combined to decrypt at runtime for users with proper rights to see the original values of data.

Cost Management – While FPE does not come close to solving Cost Management in its entirety, it can definitely contribute. We are seeing a need for FPE as an option instead of replicating data in the cloud to development, test, and production support environments. With data transfer, storage and compute costs, moving data across regions and environments can be really expensive. With FPE, data can be encrypted and decrypted with compute that is a less expensive option than organizations' current antiquated data replication jobs. Thus, making FPE a viable cost savings option for producing production ready data in non-production environments. Look for a future blog on this topic and all the benefits that come along.

FPE is not a silver bullet for protecting sensitive information or enabled these business use cases. There are well documented challenges in the FF1 and FF3-1 algorithms (another blog on that to come). A blend of features including data discovery, dynamic data masking, tokenization, role and attribute-based access controls and data activity monitoring will be needed to have a proactive approach towards security within your modern data stack. This is why Gartner considers a Data Security Platform, like ALTR, to be one of the most advanced and proactive solutions for Data security leaders in your industry.

Securing sensitive information is now more critical than ever for all types of organizations as there have been many high-profile data breaches recently. There are several ways to secure the data including restricting access, masking, encrypting or tokenization. These can pose some challenges when using the data downstream. This is where Format Preserving Encryption (FPE) helps.

This blog will cover what Format Preserving Encryption is, how it works and where it is useful.

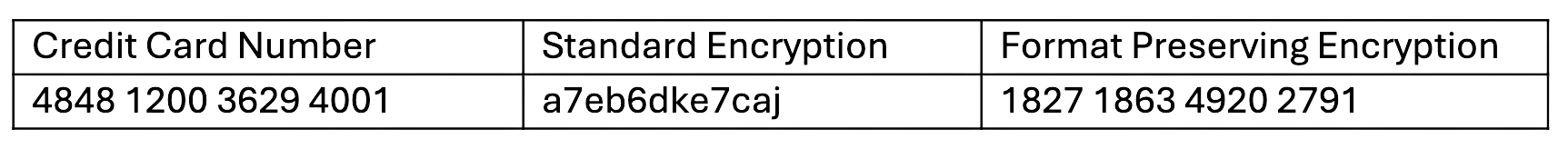

Whereas traditional encryption methods generate ciphertext that doesn't look like the original data, Format Preserving Encryption (FPE) encrypts data whilst maintaining the original data format. Changing the format can be an issue for systems or humans that expect data in a specific format. Let's look at an example of encrypting a 16-digit credit card number:

As you can see with a Standard Encryption type the result is a completely different output. This may result in it being incompatible with systems which require or expect a 16-digit numerical format. Using FPE the encrypted data still looks like a valid 16-digit number. This is extremely useful for where data must stay in a specific format for compatibility, compliance, or usability reasons.

Format Preserving Encryption in ALTR works by first analyzing the column to understand the input format and length. Next the NIST algorithm is applied to encrypt the data with the given key and tweak. ALTR applies regular key rotation to maximize security. We also support customers bringing their own keys (BYOK). Data can then selectively be decrypted using ALTR’s access policies.

FPE offers several benefits for organizations that deal with structured data:

1. Adds extra layer of protection: Even if a system or database is breached the encryption makes sensitive data harder to access.

2. Original Data Format Maintained: FPE preserves the original data structure. This is critical when the data format cannot be changed due to system limitations or compliance regulations.

3. Improves Usability: Encrypted data in an expected format is easier to use, display and transform.

4. Simplifies Compliance: Many regulations like PCI-DSS, HIPAA, and GDPR will mandate safeguarding, such as encryption, of sensitive data. FPE allows you to apply encryption without disrupting data flows or reporting, all while still meeting regulatory requirements.

FPE is widely adopted in industries that regularly handle sensitive data. Here are a few common use cases:

ALTR offers various masking, tokenization and encryption options to keep all your Snowflake data secure. Our customers are seeing the benefit of Format Preserving Encryption to enhance their data protection efforts while maintaining operational efficiency and compliance. For more information, schedule a product tour or visit the Snowflake Marketplace.

The data deluge is absolute. Organizations are swimming in an ever-growing sea of information, struggling to keep their heads above water. With its rigid processes and bureaucratic burdens, traditional data governance often feels like a leaky life raft – inadequate for navigating the dynamic currents of the modern data landscape.

Enter agile data governance, the data governance equivalent of a high-performance catamaran, swift and adaptable, ready to tackle any challenge the data ocean throws its way.

Traditional data governance often operates siloed, with lengthy planning cycles and a one-size-fits-all approach. Agile data governance throws this rigidity overboard. It's a modern, flexible methodology that views data governance as a collaborative, iterative process.

Here's the critical distinction: While traditional data governance focuses on control, agile data governance emphasizes empowerment. It fosters a data-savvy workforce, breaks down silos, and prioritizes continuous improvement to ensure data governance practices remain relevant and impactful.

Gone are the days of data governance operating in isolation. Agile fosters a spirit of teamwork, breaking down silos and bringing together data owners, analysts, business users, and IT professionals. Everyone plays a role in shaping data governance practices, ensuring they are relevant and meet real-world needs.

Forget lengthy upfront planning that quickly becomes outdated in the face of evolving data needs. Agile embraces a "test and learn" mentality, favoring iterative cycles. Processes are continuously refined based on ongoing feedback, data insights, and changing business priorities.

The data landscape is a living, breathing entity, constantly shifting and evolving. Agile data governance recognizes this reality. It's designed to bend and adapt, adjusting sails (figuratively) to navigate new regulations, integrate novel data sources, or align with evolving business strategies.

Agile data governance is not about control; it's about empowerment. It fosters a data-savvy workforce by prioritizing training programs that equip employees across the organization with the skills to understand, use, and govern data responsibly. Business users become active participants, not passive consumers, of data insights.

Agile data governance thrives on a culture of constant improvement. Regular assessments evaluate the effectiveness of data governance practices, identifying areas for refinement and ensuring that the program remains relevant and impactful.

Repetitive, mundane tasks are automated wherever possible. This frees up valuable human resources for higher-value activities like data quality analysis, user training, and strategic planning. Data classification, access control management, and dynamic data masking are prime candidates for automation.

Agile thrives on data-driven decision-making. Metrics and measurement are woven into the fabric of the program. Key performance indicators (KPIs) track the effectiveness of data governance initiatives, providing valuable insights to guide continuous improvement efforts. These metrics can encompass data quality measures, access control compliance rates, user satisfaction levels with data discoverability, and the impact of data insights on business outcomes.

The data landscape in 2024 is a rapidly evolving ecosystem. Here's why agile data governance is no longer optional but a strategic imperative:

Regulatory environments are becoming more dynamic than ever. Agile data governance allows organizations to adapt their practices swiftly to ensure continuous compliance with evolving regulations like data privacy laws (GDPR, CCPA) and industry-specific regulations.

Artificial intelligence (AI) is transforming decision-making across industries. Agile data governance ensures high-quality data feeds reliable AI models. The focus on clear data lineage and ownership within agile data governance aligns perfectly with the growing need for explainable AI.

Agile data governance empowers business users to access, understand, and utilize data for informed decision-making. This fosters a data-driven culture where valuable insights are readily available to those who need them most, driving innovation and improving business outcomes.

The iterative approach and automation focus of agile data governance streamline processes and free up valuable resources for higher-value activities. This allows organizations to navigate the complexities of the data landscape with efficiency.

Are your current data governance practices keeping pace with the ever-changing data landscape? Here are ten questions to assess your organization's agility:

1. Do different departments (IT, business users, data owners) collaborate to define and implement data governance practices?

2. Can your data governance processes adapt to accommodate new data sources, changing regulations, and evolving business needs?

3. Are business users encouraged to access and utilize data for decision-making?

4. Do you regularly evaluate the effectiveness of your data governance program and make adjustments as needed?

5. Are repetitive tasks like data lineage tracking and access control automated?

6. Do you track key metrics to measure the success of your data governance program?

7. Do you utilize an iterative approach with short planning, implementation, and improvement cycles?

8. Does your organization prioritize training programs to equip employees with data analysis and interpretation skills?

9. Are data governance policies and procedures clear, concise, and accessible to all relevant stakeholders?

10. Do business users feel confident finding and understanding the data they need to make informed decisions?

By honestly answering these questions, you can gain valuable insights into the agility of your data governance program. If your answers reveal a rigid, one-size-fits-all approach, it might be time to embrace the transformative power of agile data governance.

Agile data governance is not just a trendy buzzword; it's a critical approach for organizations in 2024 and beyond. By embracing its principles and building a flexible framework, organizations can transform their data from a burden into a powerful asset, propelling them toward a successful data-driven future.

Our customers are confused. Given the state of the world, it’s safe to say everyone is a little confused now. The confusion we’re concerned with today is about the markets ALTR plays in and how the analysts of the world – particularly Gartner – are breaking those down and making recommendations. What we’ll aim to do here is analyze the analysis. We’ll lay out the questions customers are asking about the markets and solutions for Data Security Posture Management (DSPM) and Data Security Platform (DSP), see what Gartner is saying about those today, offer some reasons why we think they are right, and finally show why the confusion is real.

Maybe that seems like a contradictory stance to take, but let’s not forget what F. Scott Fitzgerald told us: “The test of a first-rate intelligence is the ability to hold two opposing ideas in mind at the same time and still retain the ability to function.” By the end of this post, it should be clear that Gartner and others have only correctly identified a confusing time in data governance and security; they have not made things any more confusing.

Let’s start out where customers have told us they get confused. We’ll go right to the source and quote from Gartner’s own public statements on DSPM and DSP. First, let’s look at how they define Data Security Posture Management:

Data security posture management (DSPM) provides visibility as to where sensitive data is, who has access to that data, how it has been used, and what the security posture of the data stored, or application is.

We could pick that apart right away, but instead let’s immediately compare it with their definition of a Data Security Platform:

Data security platforms (DSPs) combine data discovery, policy definition and policy enforcement across data silos. Policy enforcement capabilities include format-preserving encryption, tokenization and dynamic data masking.

At first glance, these seem incredibly similar – and they are. However, there are important differences in the definitions’ text, in their implied targets, and in the implications of these factors. The easiest place to see a distinction is in the second part of the DSP definition: “policy definition and policy enforcement." The Data Security Platform does not only look at the “Posture” of that system. It is going to deliver a security solution for the data systems where it’s applied.

When talking to customers about this, they will often point out two details. First, they will say that if the DSP can’t do the discovery of at least the policy of the data systems then it isn’t much good that they give you ways to manage the protection. The subtlety here is that controlling the data policy implies that the solution would discover the current policy in order to control it going forward. (While it’s possible that some solution may give you policy control without policy discovery, ALTR gives you all those capabilities, so we don't have to worry about that.) The second thing they point out is that many of the vendors who are in the DSPM category also supply “policy definition and policy enforcement” in some way. That brings us to discussing the targets of these systems.

Something you will note as a common thread for the DSPM systems is how incredibly broad their support is for target platforms. They tend to support everything from on-prem storage systems all the way through cloud platforms doing AI and analytics like Snowflake. The trick they use to do this is that they are not concerned with the actual enforcement at that broad range, and that’s appropriate. Many of the systems they target, especially those on-prem, will have complicated systems that do policy definition and enforcement. Whether that’s something like Active Directory for unstructured data stored on disk or major platforms like SAP’s built-in security management capabilities, they are not looking for outside systems to get involved. However, the value of seeing the permissions and access people use at that broad scope can be very important. Seeing the posture of these systems is the point of the DSPM.

Of course, a subset of the systems will allow the DSPM to make changes that can be effective easily without requiring them to get too deep. If it’s about a simple API call or changing a single group membership, then the DSPM can likely do it. However, in systems where there are especially complex policies those simple, single API calls become about the “policy definition and policy enforcement" in the Data Security Platform definition. The DSP will get deep within the systems they target. Often, part of the core value of a DSP is that it will simplify what are extremely complicated policy engines and give ways to plug these policy definition steps into the larger scope of systems building or the SDLC. That focus and depth on the actual controls in targeted systems is the main difference between DSPM and DSP. The Data Security Platform narrows the scope, but it deepens the capabilities to control policies and to deliver security and governance results.

The other important aspect of the distinction between these solutions is the Data Security Platform capabilities for Data Protection. That’s the “format-preserving encryption, tokenization and dynamic data masking” part of the DSP definition. Many data systems will have built-in solutions for data masking. Almost none will have built-in tokenization or format-preserving encryption (FPE). If these capabilities are crucial to delivering the data products and solutions an organization needs, then DSP is where they will look for solutions. This not only impacts data use in production settings, but often is associated with development and testing use cases where use of sensitive information is forbidden but use of realistic data is required.

Let’s recognize the elephant in the analysis: DSPM and DSP are going to have overlap. If you’ve been around long enough or have read deeply enough, that should be as shocking as the fact that (if you’re in an English-speaking part of the world) the name of this day ends in “y.” Could the DSP forgo all the core capabilities of DSPM and just deliver the deeper policy and data protection features? If the DSM vendors could be sure that every customer will have DSPM to integrate with, sure. That isn’t always the case. Even if it were, it’s not guaranteed that the politics and process at an organization would make such integration possible even if it is technically possible. Could DSPM simply expand to cover all the depth of DSP including the Data Protection features? The crucial word in there is “simply.” If it were simple they would have done it already.

It’s sure that you will see consolidation of the market over time with players merging, expanding, and being bought to make suites. Right now, organizations have real-world challenges, and they need solutions despite the overlaps. So DSPM and DSP will stay independent until market forces make it necessary for them to change.

The overlaps, the similar goals, and the limits of language in describing Data Security Posture Management and Data Security Platforms are the source of the confusion. Hopefully, it’s now clear that DSP is the deeper solution that gives you everything you need to solve problems all the way down to Data Protection. DSPM will continue to add more platforms to grow horizontally. DSP will continue to dive deeply into the platforms they support today and cautiously add new platforms to dive more deeply into as the market needs them to. If you started this a little mad at the Gartners of the world, maybe you now see how they are right to give you two different markets with so much in common. Like with many things in life, if you are confused, it only means you are sane and paying attention. You keep paying attention, and we’ll keep helping you stay sane.

Data privacy laws are not just a legal hurdle – they're the key to building trust with your customers and avoiding a PR nightmare. The US, however, doesn't have one single, unified rulebook. It's more like a labyrinth – complex and ever-changing.

Don't worry; we've got your back. This guide will be your compass, helping you navigate the key federal regulations and state-level laws that are critical for compliance in 2024.

Data breaches are costly and damaging. But even worse is losing the trust of your customers. Strong data privacy practices demonstrate your commitment to safeguarding their information, a surefire way to build loyalty in a world where privacy concerns are at an all-time high.

Think of it this way: complying with data privacy laws isn't just about checking boxes. It's about putting your customers first and building a solid foundation for your business in the digital age.

The US regulatory landscape is an intricate web of federal statutes and state-specific legislation. Here's a breakdown of some of the key players:

These laws set the baseline for data privacy across the country.

Privacy Act of 1974 restricts how federal agencies can collect, use, and disclose personal information. It grants individuals the right to access and amend their records held by federal agencies.

Health Insurance Portability and Accountability Act (HIPAA) (1996) sets national standards for protecting individuals' medical records and other health information. It applies to healthcare providers, health plans, and healthcare clearinghouses.

Gramm-Leach-Bliley Act (GLBA) (1999): Also known as the Financial Services Modernization Act, GLBA safeguards the privacy of your financial information. Financial institutions must disclose their information-sharing practices and implement safeguards for sensitive data.

Children's Online Privacy Protection Act (COPPA) (2000) protects the privacy of children under 13 by regulating the online collection of personal information from them. Websites and online services must obtain verifiable parental consent before collecting, using, or disclosing personal information from a child under 13.

Driver's Privacy Protection Act (DPPA) (1994) restricts the disclosure and use of personal information obtained from state motor vehicle records. It limits the use of this information for specific purposes, such as law enforcement activities or vehicle safety recalls.

Video Privacy Protection Act (VPPA) (1988) prohibits the disclosure of individuals' video rental or sale records without their consent. This law aims to safeguard people's viewing habits and protect their privacy.

The Cable Communications Policy Act of 1984 includes provisions for protecting cable television subscribers' privacy. It restricts the disclosure of personally identifiable information without authorization.

Fair Credit Reporting Act (FCRA) (1970) regulates consumer credit information collection, dissemination, and use. It ensures fairness, accuracy, and privacy in credit reporting by giving consumers the right to access and dispute their credit reports.

Telephone Consumer Protection Act (TCPA) (1991)combats unwanted calls by imposing restrictions on unsolicited telemarketing calls, automated dialing systems, and text messages sent to mobile phones without consent.

Controlling the Assault of Non-Solicited Pornography and Marketing Act of 2023 (CAN-SPAM Act) establishes rules for commercial email, requiring senders to provide opt-out mechanisms and identify their messages as advertisements.

Family Educational Rights and Privacy Act (FERPA) (1974) protects the privacy of students' educational records. It grants students and their parents the right to inspect and amend these records while restricting their disclosure without consent.

Many states are taking matters into their own hands with comprehensive data privacy laws. California, Virginia, and Colorado are leading the charge, with more states following suit. These laws often grant consumers rights to access, delete, and opt out of the sale of their personal information. Here are some of the critical state laws to consider:

California Consumer Privacy Act (CCPA) (2018) was a landmark piece of legislation establishing a new baseline for consumer data privacy rights in the US. It grants California residents the right to:

Colorado Privacy Act (2021): Similar to the CCPA, it provides consumers with rights to manage their data and imposes obligations on businesses for data protection.

Connecticut Personal Data Privacy and Online Monitoring Act (2023) specifies consumer rights regarding personal data, online monitoring, and data privacy.

Delaware Personal Data Privacy Act (2022) outlines consumer rights and requirements for personal data protection.

Florida Digital Bill of Rights (2023) focuses on entities generating significant revenue from online advertising, outlining consumer privacy rights.

Indiana Consumer Data Protection Act (2023) details consumer rights and requirements for data protection.

Iowa Consumer Data Protection Act (2022) describes consumer rights and requirements for data protection.

Montana Consumer Data Privacy Act (2023) applies to entities conducting business in Montana, outlining consumer data protection requirements.

New Hampshire Privacy Act (2023): This act applies to entities conducting business in New Hampshire, outlining consumer data protection requirements.

New Jersey Data Protection Act (2023): This act applies to entities conducting business in New Jersey, outlining consumer data protection requirements.

Oregon Consumer Privacy Act (2022): This act details consumer rights and rules for data protection.

Tennessee Information Protection Act (2021) governs data protection and breach reporting.

Texas Data Privacy and Security Act (2023) describes consumer rights and data protection requirements for businesses.

Utah Consumer Privacy Act (2023) provides consumer rights and emphasizes data protection assessments and security measures.

Virginia Consumer Data Protection Act (2021) grants consumers rights to access, correct, delete, and opt out of their data processing.

Data doesn't respect borders. The EU's General Data Protection Regulation (GDPR) is a robust international regulation that applies to any organization handling the data of EU residents. Understanding the GDPR's requirements for consent, data security, and data subject rights is essential for businesses operating globally.

Conquering the data privacy maze requires vigilance and a proactive approach. Here are some critical steps:

Identify which federal and state laws apply to your business and understand their specific requirements. Conduct a comprehensive data inventory to understand what personal information you collect, store, and use.

Develop clear and concise data privacy policies that outline your data collection practices and how you safeguard information. Make these policies readily available to your customers.

Give your customers control over their data by providing mechanisms to access, delete, and opt out of data sharing. Be upfront about how you use their data and respect their choices.

Implement robust security measures to protect customer data from unauthorized access, disclosure, or destruction.

The data privacy landscape is constantly evolving. Regularly review and update your policies and procedures to comply with emerging regulations. Appoint a team within your organization to stay abreast of these changes.

The data privacy landscape is complex and constantly evolving, but it doesn't have to be overwhelming. By understanding the key regulations, taking a proactive approach, and building a culture of compliance, you can emerge as a more vital, trusted organization. In today's data-driven world, prioritizing data privacy isn't just good practice – it's essential for building lasting customer relationships and achieving long-term success.

Data has undeniably become the new gold in the swiftly evolving digital transformation landscape. Organizations across the globe are mining this precious resource, aiming to extract actionable insights that can drive innovation, enhance customer experiences, and sharpen competitive edges. However, the journey to unlock the true value of data is fraught with challenges, often likened to navigating a complex labyrinth where every turn could lead to new discoveries or unforeseen obstacles. This journey necessitates a robust data infrastructure, a skilled ensemble of data engineers, analysts, and scientists, and a meticulous data consumption management process. Yet, as data operations teams forge ahead, making strides in harnessing the power of data, they frequently encounter a paradoxical scenario: the more progress they make, the more the demand for data escalates, leading to a cycle of growth pains and inefficiencies.

One of the most significant bottlenecks in this cycle is the considerable amount of time and resources devoted to data governance tasks. Traditionally, data control and protection responsibility has been shouldered by data engineers, data architects and Database Administrators (DBAs). On the surface, this seems logical – these individuals maneuver data from one repository to another and possess the necessary expertise in SQL coding, a skill most tools require to grant and restrict access. But is this alignment of responsibilities the most efficient use of their time and talents?

The answer, increasingly, is no.

While data engineers, DBAs and data architects are undoubtedly skilled, their actual value lies in their ability to design complex data pipelines, craft intricate algorithms, and build sophisticated data models. Relegating them to mundane data governance tasks underutilizes their potential and diverts their focus from activities that could yield far greater strategic value.

Imagine the scenario: A data scientist, brimming with the potential to unlock groundbreaking customer insights through advanced machine learning techniques, finds themself bogged down in the mire of access control requests, data masking procedures, and security audit downloads.

This misallocation of expertise significantly hinders the ability of data teams to extract the true potential from the organization's data reserves.

Enter the paradigm shift: data governance automation. This transformative approach empowers organizations to delegate the routine tasks of data governance and security to dedicated teams equipped with no-code control and protection solutions.

Solutions like ALTR offer a platform that empowers data teams to quickly and easily check off complex data governance task including:

Leverage automated, tag-based, column and row access controls on PII/PHI/PCI data.

Protect sensitive data with column-based and row-based access policies and dynamic data masking and scale policy creation with attribute-based and tag-based access control.

Maintain a comprehensive data access and usage patterns record, facilitating security audits and regulatory compliance.

Receive real-time data activity monitoring, policy anomalies, and alerts and notifications.

With more time at their disposal, data teams can delve deeper into data, employing advanced analytics techniques and AI models to uncover previously elusive insights. This could lead to breakthrough innovations, more personalized customer experiences, and data-driven decision-making across the organization.

Data teams can spearhead initiatives to democratize data access, enabling a broader base of users to engage with data directly. Organizations can cultivate a data-driven culture where insights fuel every department's decision-making processes by implementing intuitive, self-service analytics platforms and conducting data literacy workshops.

Attention can be turned towards optimizing the data infrastructure for scalability, performance, and cost-efficiency. This includes adopting cloud-native services, containerization, and serverless architectures that can dynamically scale to meet the fluctuating demands of data workloads.

With the foundational tasks of data governance automated, data teams can focus on developing new data products and services. This could range from predictive analytics tools for internal use to data-driven applications that enhance customer engagement or open new revenue streams.

Finally, data teams could invest time in building collaborative ecosystems and forging partnerships with other organizations, academia, and open-source communities. These ecosystems can foster innovation, accelerate the adoption of best practices, and enhance the organization's capabilities through shared knowledge and resources.

Automating data governance tasks presents a golden opportunity for data teams to realign their focus toward activities that maximize the strategic value of data. By embracing this shift, organizations can alleviate the growing pains associated with data management and pave the way for a future where data becomes the linchpin of innovation, growth, and competitive advantage. The question then is not whether data teams should adopt data governance automation but how quickly they can do so to unlock their full potential.

Let's face it: your current data security strategy is probably as outdated as a dial-up modem. You're still relying on clunky, manual processes, struggling to keep pace with ever-evolving regulations, and dreading the thought of a potential data breach. It's time to ditch the Stone Age tools and step into the ALTR era.

ALTR isn't just another data security platform; it's a game-changer. It's the excalibur you've been searching for, ready to slay the dragons of data security challenges and protect your kingdom (read: organization) from the ever-present threats.

Data classification is where the battle lines are drawn in data security. Yet, many organizations are stuck with rudimentary checkbox approaches that barely scrape the surface of what's needed. ALTR challenges this status quo by offering an intelligent, dynamic data classification system that doesn't just identify sensitive data but understands it. With ALTR, you're not just tagging data; you're gaining deep insights into its nature, usage, and risk profile. This isn't just classification; it's a strategic reconnaissance of your data landscape, enabling precise, informed decisions about access and security policies.

In data protection, static defenses are as outdated as castle moats. ALTR brings the agility and adaptability of dynamic data masking to the forefront. Imagine your sensitive data cloaked in real-time, visible only to those with the right 'magical' keys. This isn't just about hiding data; it's about creating a flexible, responsive shield that adjusts to context, user, and data sensitivity, ensuring that your data remains protected in storage and in use.

With ALTR, database activity monitoring evolves from a passive logbook to an active, all-seeing eye that watches over your data landscape. This feature isn't just about tracking access; it's about understanding behavior, detecting anomalies, and preempting threats before they manifest. ALTR doesn't just alert you to breaches; it helps prevent them by offering insights into data access patterns, ensuring that any deviation from the norm is detected and dealt with in real-time.

In a world where data breaches are a matter of when, not if, ALTR's tokenization vault offers the ultimate sleight of hand—making your sensitive data vanish, replaced by indecipherable tokens. This is more than encryption; it's a transformation that renders data useless to thieves, all while maintaining its utility for your business processes. With ALTR, tokenization isn't just a security measure; it's a strategic move that protects your data without compromising performance or functionality.

ALTR's Format Preserving Encryption (FPE) challenges the traditional trade-offs between data usability and security. With FPE, your data remains operational, retaining its original form and function, yet securely encrypted to ward off prying eyes. This feature is a game-changer, ensuring that your data can continue fueling business processes and insights while securely locked away from unauthorized access.

Data access governance with ALTR is not about looking back at what went wrong; it's about looking ahead and preventing breaches before they happen. This is governance with teeth, offering not just oversight but foresight, enabling you to anticipate risks, enforce policies proactively, and ensure that every access to sensitive data is justified, monitored, and compliant with the highest security standards.

It's time to shed the cumbersome, outdated tools and strategies holding your data governance efforts back. The era of treating data security and compliance as burdensome chores is over. With ALTR, you're not just upgrading your technology stack; you're revolutionizing your entire approach to data governance. This isn't just a step forward; it's a leap into a new realm of possibilities where data security becomes your strength, not your headache.

Your data is the prize in the digital battlefield, and ALTR is your ultimate defence mechanism. By embracing ALTR, you're not just mitigating the risk of data breaches; you're rendering your data fortress impregnable. With dynamic data masking, tokenization, and format-preserving encryption, sensitive information becomes a moving target, elusive and indecipherable to unauthorized entities. This is data security reimagined, where your defences evolve in real-time, staying several steps ahead of potential threats.

The labyrinth of data protection regulations can be daunting, with every misstep risking heavy penalties and reputational damage. ALTR transforms this maze into a clear path, simplifying compliance with its intelligent data governance framework. Whether GDPR, HIPAA, CCPA, or any other regulatory acronym, ALTR equips you to meet and exceed these standards with minimal effort. Say goodbye to the endless compliance checklists and welcome a solution that embeds regulatory adherence into the very fabric of your data governance strategy.

In the past, enhancing data security often meant compromising efficiency, but ALTR changed the game. By automating data classification, access governance, and policy enforcement, ALTR frees your teams from the quagmire of manual processes. This means less time spent on routine data governance tasks and more time available for strategic initiatives that drive business growth. Operational efficiency isn't just about doing things faster; it's about doing them more innovative, and that's precisely what ALTR enables.

Knowledge is power, especially when managing and protecting your data. ALTR doesn't just secure your data; it shines a light on it, offering unprecedented insights into how, when, and by whom your data is accessed. These insights aren't just numbers and graphs; they're actionable intelligence that can inform your data governance policies, identify potential security risks, and uncover opportunities to optimize data usage. With ALTR, data insights become a strategic asset, driving informed decision-making across the organization.

Stop struggling with the relics of the past. It's time to embrace the future of data governance with ALTR, where data security, compliance, efficiency, and insights converge to propel your organization into a new era of digital excellence.

In an era where digital footprints are more significant than ever, the question isn't whether you should revisit your data security policy but how urgently you need to do so. With escalating cyber threats, evolving compliance landscapes, and sophisticated hacking techniques, the sanctity of data security has never been more precarious. As we navigate this digital dilemma, it's imperative to ask: Is your data security policy robust enough to withstand the challenges of today's cyber ecosystem?

Recent years have witnessed an unprecedented spike in cyberattacks, targeting not just large corporations but small businesses and individuals alike. From ransomware attacks that lock out users from their own data to phishing scams that trick individuals into handing over sensitive information, the arsenal of cybercriminals is both vast and evolving. The question remains: Is your current data security policy equipped to fend off these modern-day digital marauders?

As if the threat landscape wasn't daunting enough, businesses today also grapple with a labyrinth of regulatory requirements. GDPR, CCPA, and HIPAA - the alphabet soup of data protection laws- are confusing and comprehensive. Each of these regulations mandates stringent data protection measures, and non-compliance can result in hefty fines and irreparable damage to reputation. It's crucial for your data security policy to not only protect against cyber threats but also ensure compliance with these ever-changing legal frameworks.

Perhaps the most unpredictable aspect of data security is the human element. Studies suggest that many data breaches result from human error or insider threats. Whether a well-meaning employee clicking on a malicious link or a disgruntled worker leaking sensitive information, the human factor can often be the weakest link in your data security chain. A robust data security policy must address this variability, incorporating comprehensive training programs and strict access controls to mitigate the risk of human-induced breaches.

The rapid advancement of technology brings with it new challenges in data security. The rise of IoT devices, the proliferation of cloud computing, and the advent of AI and machine learning have opened new frontiers for cybercriminals to exploit. Each of these technologies, while transformative, also introduces new vulnerabilities. Data security policies must evolve in tandem with these technological advancements, ensuring they address the unique challenges posed by each new wave of innovation.

So, what does a robust data security policy look like today? Here are the key elements:

Clearly defines the reasons behind the policy, such as protecting sensitive information, ensuring privacy, and complying with legal and regulatory requirements.

Outlines the extent of the policy's applicability, specifying which data, systems, personnel, and departments are covered. It should clarify whether the policy applies to all data types or only specific classifications and whether it includes both digital and physical data formats.

Establishes categories for data based on its sensitivity and the level of protection it requires. Common classifications include Public, Internal Use Only, Confidential, and Highly Confidential.

Specifies handling requirements for each classification level, including storage, transmission, and sharing protocols. This ensures that more sensitive data receives higher levels of protection.

Identifies individuals or departments responsible for different types of data, outlining their responsibilities regarding data accuracy, access control, and compliance with the security policy.

Defines the role of the security team or Chief Information Security Officer (CISO) in overseeing and enforcing the data security policy.

Clarifies the responsibilities of general users, including adherence to security practices, reporting suspected breaches, and understanding the implications of policy violations.

Details the mechanisms for granting, reviewing, and revoking access to data, ensuring that individuals have access only to the data necessary for their role.

Outlines the authentication protocols required to access different types of data, including multi-factor authentication, passwords, and biometric verification.

Specifies when and how data should be encrypted, particularly for sensitive information in transit and at rest.

Addresses the protection of physical assets, including servers, data centers, and paper records, outlining measures like access control systems and surveillance.

Covers security measures for user devices that access the organization's network, including antivirus software, firewalls, and secure configurations.

Defines how long different types of data should be retained based on legal, regulatory, and business requirements.

Details methods for securely disposing of no longer needed data, ensuring that it cannot be recovered or reconstructed.

A clear, step-by-step guide for responding to data security incidents, including identification, containment, eradication, recovery, and post-incident analysis.

Outlines the procedure for reporting security incidents, including who should be notified and in what timeframe.

Mandates ongoing security awareness training for all employees, tailored to their specific roles and the data they handle.

Includes initiatives to keep data security in mind for employees, such as regular updates, posters, and security tips.

Establishes a regular schedule for reviewing and updating the data security policy to ensure it remains relevant in changing threats, technologies, and business practices.

Describes the process for proposing, reviewing, and implementing amendments to the policy, ensuring that changes are documented and communicated to all relevant parties.

Identifies relevant legal and regulatory requirements that the policy helps to address, such as GDPR, HIPAA, or PCI DSS.

Outlines the legal implications of policy violations for the organization and individual employees, including potential penalties and disciplinary actions.

In light of the evolving threat landscape and the complex regulatory environment, revisiting your data security policy is not just advisable; it's imperative. The cost of complacency can be catastrophic, ranging from financial losses to a tarnished reputation and legal repercussions. The time to act is now. By fortifying your defenses, staying abreast of regulatory changes, and fostering a culture of security, you can safeguard your organization against the multifaceted threats of the digital age. Remember, in data security, vigilance is not just a virtue; it's a necessity.

Protecting sensitive data is paramount in today's digital landscape. But choosing the proper armor for the job can be confusing. Two major contenders dominate the data governance and data security ring: Format-preserving Encryption (FPE) and Tokenization. While both seek to safeguard information, their mechanisms and target scenarios differ significantly.

Format-preserving encryption is a cryptographic technique that secures sensitive data while preserving its original structure and layout. FPE achieves this by transforming plaintext data into ciphertext within the same format, ensuring compatibility with existing data structures and applications. Unlike traditional encryption methods, which often produce ciphertext of different lengths and formats, FPE generates ciphertext that mirrors the length and character set of the original plaintext.

FPE allows companies to encrypt sensitive data while preserving the format required by existing systems, applications, or databases. This means they can integrate encryption without needing to extensively modify their data structures or application logic, minimizing disruption and avoiding potential errors or system failures arising from significant changes to established data formats or application workflows.

In some cases, the functionality of applications or systems may rely on specific data formats. FPE allows companies to encrypt data while preserving this functionality, ensuring that encrypted data can still be used effectively by applications and processes.

FPE algorithms are designed to be efficient and fast, allowing for encryption and decryption operations to be performed with minimal impact on system performance. This is particularly important for applications and systems where performance is critical.

When migrating data between different systems or platforms, maintaining the original data format can be essential to ensure compatibility and functionality. FPE allows companies to encrypt data during migration while preserving its format, simplifying the migration process.

Tokenization is a data protection technique that replaces sensitive information with randomly generated tokens. Unlike format-preserving encryption, which uses algorithms to transform data into ciphertext, tokenization uses a non-mathematical approach. Instead, it generates a unique token for each piece of sensitive information and stores sensitive information in a secure database or token vault (read more about ALTR's PCI compliant vaulted tokenization offering). The original data is then replaced with the corresponding token, removing any direct association between the sensitive information and its tokenized form.

Tokenization helps improve security by replacing sensitive data such as credit card numbers, bank account details, or personal identification information with tokens. Since tokens have no intrinsic value and are meaningless outside the system they're used in, malicious actors cannot exploit them even if intercepted.

Scalability is a crucial strength of tokenization systems, stemming from their straightforward mapping of original data to tokens. This simplicity enables easy management and facilitates seamless scalability, empowering companies to manage substantial transaction volumes and data loads without compromising security or performance, all while minimizing overhead. This scalability is especially vital in sectors with high transaction rates, like finance and e-commerce, where robust and efficient data handling is paramount.

Tokenization can facilitate interoperability between different systems and platforms by providing a standardized method for representing and exchanging sensitive data without compromising security.

Tokenization systems often offer straightforward integration with existing IT infrastructure and applications. Many tokenization solutions provide APIs or libraries, allowing developers to incorporate tokenization into their systems easily. This ease of integration can simplify adoption and reduce development time drastically.

Consider a financial institution that needs to securely store and process credit card numbers for various internal systems and applications. Instead of encrypting the credit card numbers, which could potentially disrupt downstream processes that rely on the original format, the company opts for tokenization.

Here's how it could work: When a credit card number is created or updated, the unique and identifiable numbers are replaced with randomly generated tokens. These tokens are then used to reference the original sensitive information, securely stored in a separate database or system with strict access controls.

When authorized personnel need to access or use the encrypted credit card numbers for legitimate purposes, they can retrieve the tokens and use them to access the stored sensitive information. This allows the company to maintain compatibility with existing systems and processes that rely on the specific format of credit card numbers, such as payment processing or customer account management.

By implementing tokenization in this scenario, the organization can streamline access to data while ensuring that sensitive information remains protected.

One scenario where a company might choose format-preserving encryption (FPE) over tokenization is in the context of protecting sensitive data while preserving its format and structure for specific business processes.

Imagine a healthcare organization that needs to securely store and share patient records containing personally identifiable information, such as names, addresses, and medical histories. Instead of tokenizing the entire document, which could slow down access and processing times, the organization decided to encrypt specific fields within the documents containing sensitive information.

Here's how it could work: When a patient record is entered into the system, FPE is applied to encrypt sensitive fields, such as patient name, address, and medical record number, while preserving its original format. The encrypted data maintains the same structure, length, and validation rules as the original fields.

When authorized personnel need to access the patient records for legitimate purposes , they can decrypt them using the appropriate encryption keys. This allows for efficient retrieval and processing of data without compromising security.

By using FPE in this scenario, the company can ensure that sensitive data remains protected while maintaining the integrity and usability of the data within its business operations. This approach balances security and functionality, allowing the company to meet data protection requirements without sacrificing operational efficiency or compatibility with existing systems.

Format-Preserving Encryption (FPE) and Tokenization offer practical strategies for securing sensitive data. By understanding each technique's unique advantages and considerations, organizations can make informed decisions to safeguard their data, protect against potential threats, and foster trust with customers and stakeholders.

In the ever-evolving landscape of data security, the debate between Vault and Vaultless tokenization has gained prominence. Both methods aim to protect sensitive information, but they take distinct approaches, each with different sets of advantages and limitations. In this blog, we will dive into the core differences that organizations consider when choosing an approach and how ALTR makes it easier to leverage the enhanced security of Vault Tokenization while still allowing for the scalability you'd typically find with Vaultless Tokenization. This decision ultimately comes down to performance, scalability, security, compliance, and total cost of ownership.

Tokenization (both Vaulted and Vaultless), at its core, is the process of replacing sensitive data with unique identifiers or tokens. This ensures that even if a token is intercepted, it holds no intrinsic value to the interceptor without the corresponding key, which is stored in a secure vault or system.

Vaulted (or “Vault”) tokenization relies on a centralized repository, known as a vault, to store the original data. The tokenization process involves generating a unique token for each piece of sensitive information, while securely storing the actual data in the vault. Access to the vault is tightly controlled, ensuring only authorized entities can retrieve or decrypt the original data. For maximum security, the token should have no mathematical relationship to the underlying data; thus, preventing brute force algorithmic hacking, as can be possible when purely relying on encryption. Securing data in a vault helps reduce the surface area of systems that need to remain in regulatory compliance (ex. SOC 2, PCI- DSS, HIPAA, etc.), by ensuring the sensitive data located in the source system is fully replaced with non-sensitive values, thus requiring no compliance controls to maintain security.

The primary technical differentiator between Vaulted and Vaultless Tokenization is the centralization of data storage in a secure vault. This centralized storing method guarantees security and simplifies management and control, but may lead to concerns around scalability, and performance.

Vaulted tokenization shines in scenarios where centralized control and compliance are paramount. Industries with stringent regulatory requirements often find comfort in the centralized security model of vaulted tokenization.

Vaultless tokenization, on the other hand, distributes the responsibility of tokenization across various endpoints or systems all within the core source data repository. In this approach, the generation and management of tokens occurs locally, eliminating the need for a centralized vault to store the original data. Each endpoint independently tokenizes and detokenizes data without relying on a central authority. While Vaultless Tokenization has a technically secure approach, this solution relies on tokenizing and detokenizing data from within the same source system. Similarly, this solution is less standardized across the industry and may result in vulnerability to compliance requirements around observability and proving that data stored locally is sufficiently protected.

The decentralized nature of Vaultless tokenization enhances fault tolerance and reduces the risk of a single point of failure from a compromised vault. However, it introduces the challenge of ensuring consistent tokenization across distributed systems and guaranteeing data security and regulatory compliance.

While each approach has its merits, the ideal data security solution lies in striking a balance that combines the security of Vaulted Tokenization with the performance and scalability of Vaultless Tokenization. A hybrid model aims to leverage the strengths of both methods, offering robust protection without sacrificing efficiency, performance, industry norms, or compliance regulations.

ALTR’s Vault tokenization solution is a REST API based approach for interacting with our highly secure and performant Vault. As a pure SaaS offering, utilizing ALTR’s tokenization tool requires zero physical installation, and enables users to begin tokenizing or detokenizing their data in minutes. ALTR’s solution leverages the auto-scaling nature of the cloud, enabling on-demand performance that can immediately scale up or down based on usage.

ALTR’s Vaulted Tokenization enhances the security and performance of sensitive data by being a SaaS delivered tool and having an advanced relationship with Amazon Web Services. Because of ALTR’s interoperability, many constraints of Vaulted Tokenization have been removed by properly building a scalable vault using cloud resources. ALTR can perform millions of tokenization and detokenization operations per minute per client basis without having the need for a Vaultless type of local implementation.

In conclusion, the relative differences between Vaulted and Vaultless Tokenization underscore the importance of a nuanced approach to data security. The evolving landscape calls for solutions that marry the robust protection of a vault with the agility and scalability of a cloud-native SaaS model. ALTR’s Vault tokenization solution enables this unique offering by combining cloud-native scalability and ease-of setup / maintenance, with a tightly controlled, compliance optimized vault (PCI Level 1 DSS and SOC 2 type 2 certifications). Striking this balance ensures that organizations can navigate the complexities of modern data handling, safeguarding sensitive information without compromising performance or scalability.

In today's digital age, data is the lifeblood of businesses and organizations. Safeguarding its integrity and ensuring it stays in the right hands is paramount. The responsibility for this critical task falls squarely on the shoulders of effective data access control systems, which govern who can access, modify, or delete sensitive information. However, like any security system, access controls can weaken over time, exposing and making your data vulnerable. So, how can you spot the warning signs of a deteriorating data access control process? In this blog, we'll uncover the telltale indicators that your data access control is on shaky ground.

It's undeniable that a data breach or leak is the most glaring and alarming indicator of your data access control's downfall. When unauthorized parties manage to infiltrate your sensitive information, it's akin to waving a red flag and shouting, "Wake up!" The unmistakable sign points to glaring vulnerabilities within your access control systems. These breaches bring dire consequences, including reputational damage, hefty fines, and the substantial erosion of customer trust. With the global average cost of a data breach at a staggering USD 4.45 million, it's most certainly something you want to avoid.

Do you find yourself with pockets of data hidden in different departments or applications, making it inaccessible to those who genuinely need it? This phenomenon creates data silos that obstruct collaboration and efficiency. Moreover, it complicates access control management, as each data silo may function under its own potentially inconsistent set of rules and protocols.

Does anyone within your organization "own" data, ensuring its proper use and security? Vague ownership fosters a culture where everyone feels entitled to access, making it difficult to track user activity, identify responsible parties in case of misuse, and enforce access control policies.

If access permissions are manually granted and updated, it's a clear sign that your access control system is outdated. Manual processes are time-consuming, error-prone, and hardly scalable. They create bottlenecks that delay legitimate users' access while increasing the risk of inadvertently granting unauthorized access. It's high time to transition to automated access control solutions to keep pace with the evolving demands of data security.