Format-Preserving Encryption: A Deep Dive into FF3-1 Encryption Algorithm

In the ever-evolving landscape of data security, protecting sensitive information while maintaining its usability is crucial. ALTR’s Format Preserving Encryption (FPE) is an industry disrupting solution designed to address this need. FPE ensures that encrypted data retains the same format as the original plaintext, which is vital for maintaining compatibility with existing systems and applications. This post explores ALTR's FPE, the technical details of the FF3-1 encryption algorithm, and the benefits and challenges associated with using padding in FPE.

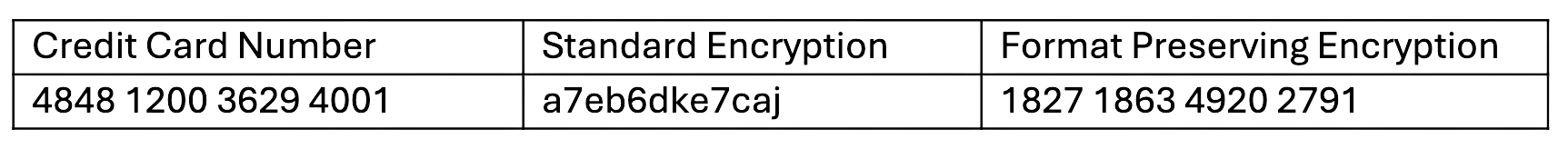

Format Preserving Encryption is a cryptographic technique that encrypts data while preserving its original format. This means that if the plaintext data is a 16-digit credit card number, the ciphertext will also be a 16-digit number. This property is essential for systems where data format consistency is critical, such as databases, legacy applications, and regulatory compliance scenarios.

The FF3-1 encryption algorithm is a format-preserving encryption method that follows the guidelines established by the National Institute of Standards and Technology (NIST). It is part of the NIST Special Publication 800-38G and is a variant of the Feistel network, which is widely used in various cryptographic applications. Here’s a technical breakdown of how FF3-1 works:

1. Feistel Network: FF3-1 is based on a Feistel network, a symmetric structure used in many block cipher designs. A Feistel network divides the plaintext into two halves and processes them through multiple rounds of encryption, using a subkey derived from the main key in each round.

2. Rounds: FF3-1 typically uses 8 rounds of encryption, where each round applies a round function to one half of the data and then combines it with the other half using an XOR operation. This process is repeated, alternating between the halves.

3. Key Scheduling: FF3-1 uses a key scheduling algorithm to generate a series of subkeys from the main encryption key. These subkeys are used in each round of the Feistel network to ensure security.

4. Tweakable Block Cipher: FF3-1 includes a tweakable block cipher mechanism, where a tweak (an additional input parameter) is used along with the key to add an extra layer of security. This makes it resistant to certain types of cryptographic attacks.

5. Format Preservation: The algorithm ensures that the ciphertext retains the same format as the plaintext. For example, if the input is a numeric string like a phone number, the output will also be a numeric string of the same length, also appearing like a phone number.

1. Initialization: The plaintext is divided into two halves, and an initial tweak is applied. The tweak is often derived from additional data, such as the position of the data within a larger dataset, to ensure uniqueness.

2. Round Function: In each round, the round function takes one half of the data and a subkey as inputs. The round function typically includes modular addition, bitwise operations, and table lookups to produce a pseudorandom output.

3. Combining Halves: The output of the round function is XORed with the other half of the data. The halves are then swapped, and the process repeats for the specified number of rounds.

4. Finalization: After the final round, the halves are recombined to form the final ciphertext, which maintains the same format as the original plaintext.

Implementing FPE provides numerous benefits to organizations:

1. Compatibility with Existing Systems: Since FPE maintains the original data format, it can be integrated into existing systems without requiring significant changes. This reduces the risk of errors and system disruptions.

2. Improved Performance: FPE algorithms like FF3-1 are designed to be efficient, ensuring minimal impact on system performance. This is crucial for applications where speed and responsiveness are critical.

3. Simplified Data Migration: FPE allows for the secure migration of data between systems while preserving its format, simplifying the process and ensuring compatibility and functionality.

4. Enhanced Data Security: By encrypting sensitive data, FPE protects it from unauthorized access, reducing the risk of data breaches and ensuring compliance with data protection regulations.

5. Creation of production-like data for lower trust environments: Using a product like ALTR’s FPE, data engineers can use the cipher-text of production data to create useful mock datasets for consumption by developers in lower-trust development and test environments.

Padding is a technique used in encryption to ensure that the plaintext data meets the required minimum length for the encryption algorithm. While padding is beneficial in maintaining data structure, it presents both advantages and challenges in the context of FPE:

1. Consistency in Data Length: Padding ensures that the data conforms to the required minimum length, which is necessary for the encryption algorithm to function correctly.

2. Preservation of Data Format: Padding helps maintain the original data format, which is crucial for systems that rely on specific data structures.

3. Enhanced Security: By adding extra data, padding can make it more difficult for attackers to infer information about the original data from the ciphertext.

1. Increased Complexity: The use of padding adds complexity to the encryption and decryption processes, which can increase the risk of implementation errors.

2. Potential Information Leakage: If not implemented correctly, padding schemes can potentially leak information about the original data, compromising security.

3. Handling of Padding in Decryption: Ensuring that the padding is correctly handled during decryption is crucial to avoid errors and data corruption.

ALTR's Format Preserving Encryption, powered by the technically robust FF3-1 algorithm and married with legendary ALTR policy, offers a comprehensive solution for encrypting sensitive data while maintaining its usability and format. This approach ensures compatibility with existing systems, enhances data security, and supports regulatory compliance. However, the use of padding in FPE, while beneficial in preserving data structure, introduces additional complexity and potential security challenges that must be carefully managed. By leveraging ALTR’s FPE, organizations can effectively protect their sensitive data without sacrificing functionality or performance.

For more information about ALTR’s Format Preserving Encryption and other data security solutions, visit the ALTR documentation

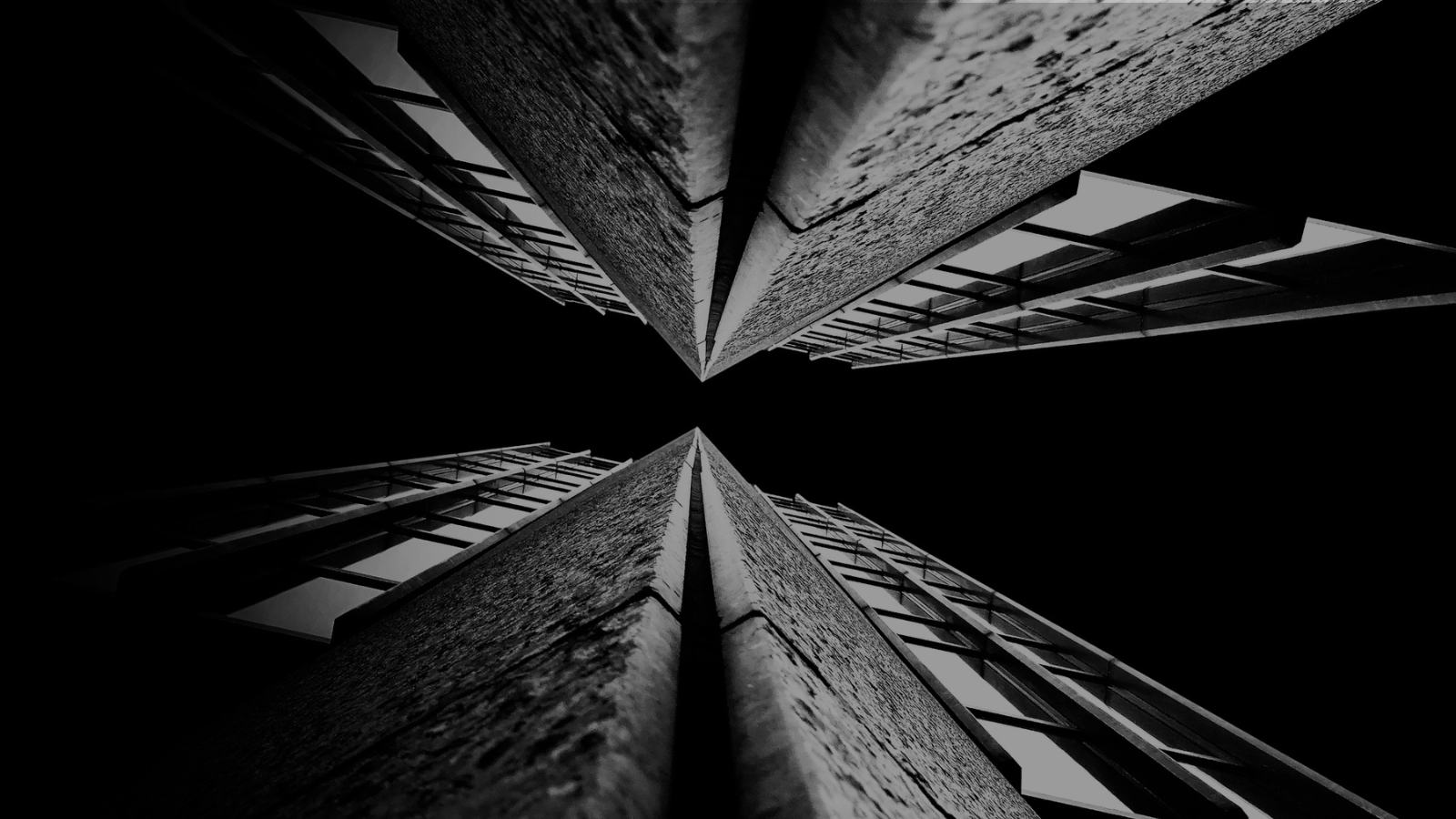

For years (even decades) sensitive information has lived in transactional and analytical databases in the data center. Firewalls, VPNs, Database Activity Monitors, Encryption solutions, Access Control solutions, Privileged Access Management and Data Loss Prevention tools were all purchased and assembled to sit in front of, and around, the databases housing this sensitive information.

Even with all of the above solutions in place, CISO’s and security teams were still a nervous wreck. The goal of delivering data to the business was met, but that does not mean the teams were happy with their solutions. But we got by.

The advent of Big Data and now Generative AI are causing businesses to come to terms with the limitations of these on-prem analytical data stores. It’s hard to scale these systems when the compute and storage are tightly coupled. Sharing data with trusted parties outside the walls of the data center securely is clunky at best, downright dangerous in most cases. And forget running your own GenAI models in your datacenter unless you can outbid Larry, Sam, Satya, and Elon at the Nvidia store. These limits have brought on the era of cloud data platforms. These cloud platforms address the business needs and operational challenges, but they also present whole new security and compliance challenges.

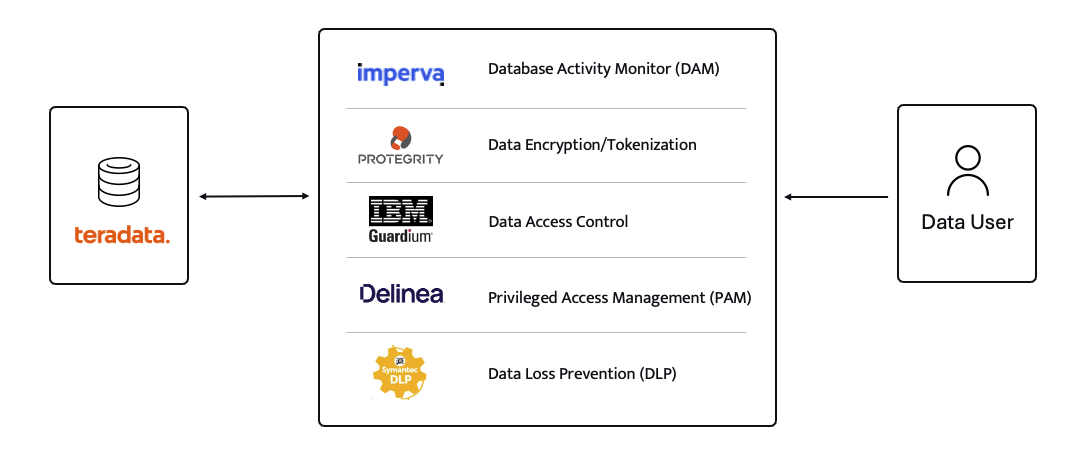

ALTR’s platform has been purpose-built to recreate and enhance these protections required to use Teradata for Snowflake. Our cutting-edge SaaS architecture is revolutionizing data migrations from Teradata to Snowflake, making it seamless for organizations of all sizes, across industries, to unlock the full potential of their data.

What spurred this blog is that a company reached out to ALTR to help them with data security on Snowflake. Cool! A member of the Data & Analytics team who tried our product and found love at first sight. The features were exactly what was needed to control access to sensitive data. Our Format-Preserving Encryption sets the standard for securing data at rest, offering unmatched protection with pricing that's accessible for businesses of any size. Win-win, which is the way it should be.

Our team collaborated closely with this person on use cases, identifying time and cost savings, and mapping out a plan to prove the solution’s value to their organization. Typically, we engage with the CISO at this stage, and those conversations are highly successful. However, this was not the case this time. The CISO did not want to meet with our team and practically stalled our progress.

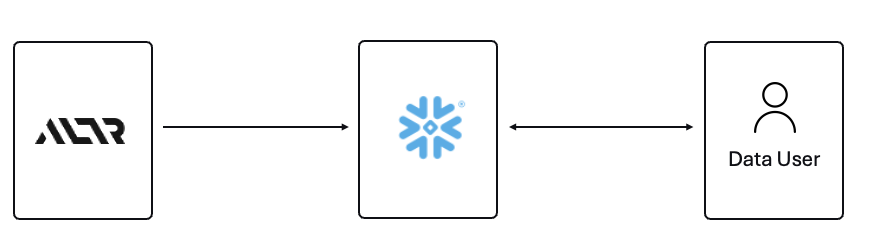

The CISO’s point of view was that ALTR’s security solution could be completely disabled, removed, and would not be helpful in the case of a compromised ACCOUNTADMIN account in Snowflake. I agree with the CISO, all of those things are possible. Here is what I wanted to say to the CISO if they had given me the chance to meet with them!

The ACCOUNTADMIN role has a very simple definition, yet powerful and long-reaching implications of its use:

One of the main points I would have liked to make to the CISO is that as a user of Snowflake, their responsibility to secure that ACCOUNTADMIN role is squarely in their court. By now I’m sure you have all seen the news and responses to the Snowflake compromised accounts that happened earlier this year. It is proven that unsecured accounts by Snowflake customers caused the data theft. There have been dozens of articles and recommendations on how to secure your accounts with Snowflake and even a mandate of minimum authentication standards going forward for Snowflake accounts. You can read more information here, around securing the ACCOUNTADMIN role in Snowflake.

I felt the CISO was missing the point of the ALTR solution, and I wanted the chance to explain my perspective.

ALTR is not meant to secure the ACCOUNTADMIN account in Snowflake. That’s not where the real risk lies when using Snowflake (and yes, I know—“tell that to Ticketmaster.” Well, I did. Check out my write-up on how ALTR could have mitigated or even reduced the data theft, even with compromised accounts). The risk to data in Snowflake comes from all the OTHER accounts that are created and given access to data.

The ACCOUNTADMIN role is limited to one or two people in an organization. These are trusted folks who are smart and don’t want to get in trouble (99% of the time). On the other hand, you will have potentially thousands of non-ACCOUNTADMIN users accessing data, sharing data, screensharing dashboards, re-using passwords, etc. This is the purpose of ALTR’s Data Security Platform, to help you get a handle on part of the problem which is so large it can cause companies to abandon the benefits of Snowflake entirely.

There are three major issues outside of the ACCOUNTADMIN role that companies have to address when using Snowflake:

1. You must understand where your sensitive is inside of Snowflake. Data changes rapidly. You must keep up.

2. You must be able to prove to the business that you have a least privileged access mechanism. Data is accessed only when there is a valid business purpose.

3. You must be able to protect data at rest and in motion within Snowflake. This means cell level encryption using a BYOK approach, near-real-time data activity monitoring, and data theft prevention in the form of DLP.

The three issues mentioned above are incredibly difficult for 95% of businesses to solve, largely due to the sheer scale and complexity of these challenges. Terabytes of data and growing daily, more users with more applications, trusted third parties who want to collaborate with your data. All of this leads to an unmanageable set of internal processes that slow down the business and provide risk.

ALTR’s easy-to-use solution allows Virgin Pulse Data, Reporting, and Analytics teams to automatically apply data masking to thousands of tagged columns across multiple Snowflake databases. We’re able to store PII/PHI data securely and privately with a complete audit trail. Our internal users gain insight from this masked data and change lives for good.

- Andrew Bartley, Director of Data Governance

I believed the CISO at this company was either too focused on the ACCOUNTADMIN problem to understand their other risks, or felt he had control over the other non-admin accounts. In either case I would have liked to learn more!

There was a reason someone from the Data & Analytics team sought out a product like ALTR. Data teams are afraid of screwing up. People are scared to store and use sensitive data in Snowflake. That is what ALTR solves for, not the task of ACCOUNTADMIN security. I wanted to be able to walk the CISO through the risks and how others have solved for them using ALTR.

The tools that Snowflake provides to secure and lock down the ACCOUNTADMIN role are robust and simple to use. Ensure network policies are in place. Ensure MFA is enabled. Ensure you have logging of ACCOUNTADMIN activity to watch all access.

I wish I could have been on the conversation with the CISO to ask a simple question, “If I show you how to control the ACCOUNTADMIN role on your own, would that change your tone on your teams use of ALTR?” I don’t know the answer they would have given, but I know the answer most CISO’s would give.

Nothing will ever be 100% secure and I am by no means saying ALTR can protect your Snowflake data 100% by using our platform. Data security is all about reducing risk. Control the things you can, monitor closely and respond to the things you cannot control. That is what ALTR provides day in and day out to our customers. You can control your ACCOUNTADMIN on your own. Let us control and monitor the things you cannot do on your own.

Since 2015 the migration of corporate data to the cloud has rapidly accelerated. At the time it was estimated that 30% of the corporate data was in the cloud compared to 2022 where it doubled to 60% in a mere seven years. Here we are in 2024, and this trend has not slowed down.

Over time, as more and more data has moved to the cloud, new challenges have presented themselves to organizations. New vendor onboarding, spend analysis, and new units of measure for billing. This brought on different cloud computer-related cost structures and new skillsets with new job titles. Vendor lock-in, skill gaps, performance and latency and data governance all became more intricate paired with the move to the cloud. Both operational and transactional data were in scope to reap the benefits promised by cloud computing, organizational cost savings, data analytics and, of course, AI.

The most critical of these new challenges revolve around a focus on Data Security and Privacy. The migration of on-premises data workloads to the Cloud Data Warehouses included sensitive, confidential, and personal information. Corporations like Microsoft, Google, Meta, Apple, Amazon were capturing every movement, purchase, keystroke, conversation and what feels like thought we ever made. These same cloud service providers made this easier for their enterprise customers to do the same. Along came Big Data and the need for it to be cataloged, analyzed, and used with the promise of making our personal lives better for a cost. The world's population readily sacrificed privacy for convenience.

The moral and ethical conversation would then begin, and world governments responded with regulations such as GDPR, CCPA and now most recently the European Union’s AI Act. The risk and fines have been in the billions. This is a story we already know well. Thus, Data Security and Privacy have become a critical function primarily for the obvious use case, compliance, and regulation. Yet only 11% of organizations have encrypted over 80% of their sensitive data.

With new challenges also came new capabilities and business opportunities. Real time analytics across distributed data sources (IoT, social media, transactional systems) enabling real time supply chain visibility, dynamic changes to pricing strategies, and enabling organizations to launch products to market faster than ever. On premise applications could not handle the volume of data that exists in today’s economy.

Data sharing between partners and customers became a strategic capability. Without having to copy or move data, organizations were enabled to build data monetization strategies leading to new business models. Now building and training Machine Learning models on demand is faster and easier than ever before.

To reap the benefits of the new data world, while remaining compliant, effective organizations have been prioritizing Data Security as a business enabler. Format Preserving Encryption (FPE) has become an accepted encryption option to enforce security and privacy policies. It is increasingly popular as it can address many of the challenges of the cloud while enabling new business capabilities. Let’s look at a few examples now:

Real Time Analytics - Because FPE is an encryption method that returns data in the original format, the data remains useful in the same length, structure, so that more data engineers, scientists and analysts can work with the data without being exposed to sensitive information.

Data Sharing – FPE enables data sharing of sensitive information both personal and confidential, enabling secure information, collaboration, and innovation alike.

Proactive Data Security– FPE allows for the anonymization of sensitive information, proactively protecting against data breaches and bad actors. Good holding to ransom a company that takes a more proactive approach using FPE and other Data Security Platform features in combination.

Empowered Data Engineering – with FPE data engineers can still build, test and deploy data transformations as user defined functions and logic in stored procedures or complied code will run without failure. Data validations and data quality checks for formats, lengths and more can be written and tested without exposing sensitive information. Federated, aggregation and range queries can still run without fail without the need for decryption. Dynamic ABAC and RBAC controls can be combined to decrypt at runtime for users with proper rights to see the original values of data.

Cost Management – While FPE does not come close to solving Cost Management in its entirety, it can definitely contribute. We are seeing a need for FPE as an option instead of replicating data in the cloud to development, test, and production support environments. With data transfer, storage and compute costs, moving data across regions and environments can be really expensive. With FPE, data can be encrypted and decrypted with compute that is a less expensive option than organizations' current antiquated data replication jobs. Thus, making FPE a viable cost savings option for producing production ready data in non-production environments. Look for a future blog on this topic and all the benefits that come along.

FPE is not a silver bullet for protecting sensitive information or enabled these business use cases. There are well documented challenges in the FF1 and FF3-1 algorithms (another blog on that to come). A blend of features including data discovery, dynamic data masking, tokenization, role and attribute-based access controls and data activity monitoring will be needed to have a proactive approach towards security within your modern data stack. This is why Gartner considers a Data Security Platform, like ALTR, to be one of the most advanced and proactive solutions for Data security leaders in your industry.

Securing sensitive information is now more critical than ever for all types of organizations as there have been many high-profile data breaches recently. There are several ways to secure the data including restricting access, masking, encrypting or tokenization. These can pose some challenges when using the data downstream. This is where Format Preserving Encryption (FPE) helps.

This blog will cover what Format Preserving Encryption is, how it works and where it is useful.

Whereas traditional encryption methods generate ciphertext that doesn't look like the original data, Format Preserving Encryption (FPE) encrypts data whilst maintaining the original data format. Changing the format can be an issue for systems or humans that expect data in a specific format. Let's look at an example of encrypting a 16-digit credit card number:

As you can see with a Standard Encryption type the result is a completely different output. This may result in it being incompatible with systems which require or expect a 16-digit numerical format. Using FPE the encrypted data still looks like a valid 16-digit number. This is extremely useful for where data must stay in a specific format for compatibility, compliance, or usability reasons.

Format Preserving Encryption in ALTR works by first analyzing the column to understand the input format and length. Next the NIST algorithm is applied to encrypt the data with the given key and tweak. ALTR applies regular key rotation to maximize security. We also support customers bringing their own keys (BYOK). Data can then selectively be decrypted using ALTR’s access policies.

FPE offers several benefits for organizations that deal with structured data:

1. Adds extra layer of protection: Even if a system or database is breached the encryption makes sensitive data harder to access.

2. Original Data Format Maintained: FPE preserves the original data structure. This is critical when the data format cannot be changed due to system limitations or compliance regulations.

3. Improves Usability: Encrypted data in an expected format is easier to use, display and transform.

4. Simplifies Compliance: Many regulations like PCI-DSS, HIPAA, and GDPR will mandate safeguarding, such as encryption, of sensitive data. FPE allows you to apply encryption without disrupting data flows or reporting, all while still meeting regulatory requirements.

FPE is widely adopted in industries that regularly handle sensitive data. Here are a few common use cases:

ALTR offers various masking, tokenization and encryption options to keep all your Snowflake data secure. Our customers are seeing the benefit of Format Preserving Encryption to enhance their data protection efforts while maintaining operational efficiency and compliance. For more information, schedule a product tour or visit the Snowflake Marketplace.

As part of our Expert Panel Series on LinkedIn, we asked experts in the modern data ecosystem what they think is one bad habit data governance teams and data security teams should break? Here’s what we heard…

Believing there is a wall between the two teams. More and more governance and security are becoming the Business Prevention Teams(trademark) because they refuse or cannot work together. The winners going forward will have these two teams working hand-in-hand with data pipeline engineers to place active security and meaningful meta data collection to use directly in the pipeline. This means classify data as soon as you pull it from source, have automated rules to encrypt or tokenize based on classification, leverage tags and metadata to land data in the cloud data warehouse with all the necessary information to plug into the RBAC model etc. The Spiderman finger pointing memes have to end internally...

I think security and governance have to be engrained in data teams from day 1 as non-negotiable. Otherwise, there will always be a back and forth argument over priorities.

Organizing efforts in large groups - especially with governance councils and people/process improvement. Start these things with a small group of people, make everyone accountable for something important, and expand it over time. There's nothing more ineffective in a steering committee than one that's so big that nobody is accountable for making the changes needed to push the organization forward.

Stop taking an ivory tower approach. Similar to enterprise architecture, these practice areas affect a firm's DNA and ability to change. These things should be treated as a team sport. I really appreciate what Fred Bliss shared - there should be a small core team serving as the central pole for the big tent of data governance and security. Starting with everything and everyone is a recipe for ineffectiveness or outright failure, but the intersections and dependencies with other enterprise-shaping areas must be covered to mitigate silos and finger-pointing, to James Beecham's point.

Perhaps, stop treating data as a commodity and start treating it as a service.

Instead of starting with “no,” data governance teams should work from an enablement perspective – taking time to understand the data use and put the proper safeguards in place for governance, security, and access.

Manage the rule… not the exceptions.

Be on the lookout for the next installment of our Expert Panel Series on LinkedIn this month!

The ALTR Team is just a few days out from taking off to Las Vegas for Snowflake Summit 2023. Members of our team – from Product Designers, to Engineers, to Business Development Execs – are anticipating an exciting event ahead. In preparation for Summit 2023, we asked a few members of our team what they are most looking forward to.

I am looking forward to seeing our whole “data” ecosystem around Snowflake in one place at Snowflake Summit. It will be a great opportunity to connect with individuals from other companies in the ecosystem. At the same time, I look forward to meeting Snowflake customers and prospects to discover their needs.

- Ami Ikanovic, Application Engineer

As a software engineer dealing a lot with our Snowflake integrations, I'm looking forward to several of the Snowflake Summit speaking sessions that feature Snowflake engineers and team members. Specifically, the ones around SnowSQL improvements over the past year, what's new in data governance and privacy, open-source drivers and the Snowflake API ecosystem.

- Ryan DeBerardino, Senior Software Engineer

I am eagerly anticipating attending this year's Snowflake Summit for several reasons. Firstly, it offers a fantastic opportunity for collaboration with my colleagues, enabling us to exchange ideas and insights that will enhance our work. Secondly, I am excited about the prospect of meeting and engaging with peer executives from Alation, Analytics8, Collibra, Matillion, Passerelle, and Snowflake as their expertise and experiences can provide valuable perspectives for our own business strategies. Additionally, I am eager to learn about the latest technologies showcased at the Summit, as incorporating these advancements into our partner strategy will undoubtedly accelerate our growth and success.

- Stephen Campbell, VP Partnerships and Alliances

I am most excited to see what new features and offerings each of the different providers have produced in the last year. We work in such a dynamic and fast-moving industry, and conferences like Snowflake Summit are an amazing, concentrated way to showcase the speed of innovation.

- Ted Sandler, Technical Project Manager

We’re anticipating Snowflake Summit 2023 to be a huge success. With the waves that ALTR has made in the data ecosystem this year, I’m excited to get to connect with our customers and partners in the greater data community. I get to share a speaking session with Laura Malins, VP of Product at Matillion on Thursday, June 29 at 11am about the convergence of Analytics, Governance and Security in West Theatre B. I am looking forward to collaborating with Laura in this session and connecting with attendees throughout the event.

- James Beecham, Founder, CEO

I’m looking forward to seeing, in person, the countless Snowflake customers ALTR works with day in and day out. Having the opportunity for face-to-face discussions about new challenges they are tackling and how ALTR may be able to help is what makes Snowflake Summit the best event of the year for us.

- Paul Franz, VP Business Development

I couldn’t be more excited about ALTR’s 2nd year attending Snowflake Summit and our 1st year sponsoring after-hours events. We’ll be going from dawn till dusk on Tuesday June 27 with our sponsorship of Passerelle’s Data Oasis and the big Matillion Fiesta in the Clouds. It’s such a great opportunity to meet up with some of our favorite partners and customers as we dance the night away. I hope we see you there!

- Kim Cook, VP Marketing

With data governance being such a hurdle for many companies, there are many speaking sessions covering this topic at Snowflake Summit. These governance sessions themselves, the questions asked in them, and the follow-up conversations that we participate in are the most interesting. We have great current and future customers that help drive what we build, but listening to a broad range of voices and seeing what everyone else is doing is what makes me very excited for Summit 2023.

- Kevin Rose, Director of Engineering

This will be my first-time attending Snowflake Summit and I’m so excited to meet and mingle with folks in person! As a product designer, it’ll be so valuable to speak with some of our users firsthand, get feedback on how ALTR’s platform has made data governance more accessible, and catch up on all the exciting developments in the Snowflake community.

-Geneva Boyett, Product Designer

We’re looking forward to all that Snowflake Summit 2023 will teach us, and we hope to connect with you there. Below are all of the places you’ll be able to find us in Vegas next week! See you soon!

Booth #2242

James Beecham + Laura Malins Speaking Session: June 29 @ 11am – West Theatre B

Data Oasis with Passerelle: June 27 @ 6pm – The Loft NV above Cabo Wabo

Fiesta in the Clouds with Matillion: June 27 @ 8pm – Chayo Mexican Kitchen and Tequila Bar

ALTR is constantly looking to solve problems for our customers. A recent challenge some of our customers were running into is governing and securing a Snowflake data share. ALTR’s creative solution enables this through governing views on Snowflake data shares. Here’s how:

In Snowflake, companies may have a primary production database or even a general data source that multiple groups need. This data often sits in a Snowflake account that may be accessible to only a handful of people or none at all. Snowflake admins can “share” read-only portions of that database to different groups on different Snowflake accounts internally across the company. This enables data consumers to utilize the data without risking changes or corruption to the source data or inconsistencies across the data in different accounts. If Snowflake admins are leveraging the native Snowflake governance features to apply governing policies such as data masking or column-level access controls with SQL on the primary database, those controls will be maintained in the shared database.

However, admins offering a Snowflake data share run into the same issues every Snowflake customer faces: managing those SQL policies manually at scale. It’s time-consuming, error-prone and risky. Generally, companies going down the primary/shared database path are large enough to have hundreds of databases and thousands of users. ALTR is the best solution to implement, maintain and enforce these policies at scale in Snowflake. But because these are “shares” (i.e. read-only), Snowflake does not allow data-level protections to be applied. So, we wanted to provide our customers with the ability to extend ALTR’s enforcement across Snowflake data shares in different accounts.

Let’s start with: what is a “view” in Snowflake? You can think of it as a faux table that is defined by a query against other tables. In other words, it’s a query saved, named, and displayed as a table. For example, you could write “Select * from customer data where Country Code = US” and run that each time you need to pull a list of customers from the US. Or you could create and save a view in Snowflake based on that query and access it each time you need the same info. Creating a view is especially useful for complex queries pulling various fields from multiple databases. It allows you to just run a query against the saved view rather than writing out all the queries that go into the master query. Finally, it has the advantage of limiting the data available to table-viewers. Some companies even standardize on only offering data via views.

What this means is that Snowflake admins or even the data-consuming or data-owning line of business users (depending on your company’s approach to data management) can create a second database that can be modified in their own account and create Snowflake views on that database that reference the unmodifiable data share. The view enables limits on what data is pulled by the saved query, but ALTR can then also apply governance and data security policy on those views so that only approved data is accessible in specific formats according to the existing rules. You can set up tag-based policies, column-based access controls, dynamic data masking, and daily rate limits or thresholds as well as de-tokenize data. In other words, by governing the view, you can govern Snowflake data shares the way you would the primary database.

Common Snowflake data share use cases include a data source that many groups across the company need to access with different permissions: employee database, sales records, customer PII. Or sometimes, companies have a development database, a testing database, and a production database in separate accounts where the data needs to be up-to-date and consistent across them but modifiable only in one. This also makes it possible for companies that have standardized on views for all data delivery to govern those views across their Snowflake usage.

With ALTR, Snowflake admins or various account owners can leverage sensitive data shares in Snowflake and secure them from their single ALTR account. They can set up row- and column-level controls and then apply the applicable policies to the appropriate views to ensure end data share users only see the data they should, automatically.

Just like every other industry today, credit unions are working to figure out how they can best utilize member data to optimize their experiences. But credit unions do face some unique challenges around privacy regulations, member service expectations and an often traditional hardware and software data infrastructure. We interviewed Adam Roderick, CEO @ Datateer, to learn how credit unions can make data more useful and valuable to their organizations.

Visibility and speed.

Credit unions have many different types of products and stakeholders. This necessarily means they have many processes in place, with applications and databases to support them. Each of these systems contains a partial view of the organization. A siloed slice of the whole, a glimpse.

But none of them provides a complete picture, an ability to answer questions using data from multiple sources.

Efforts like Member 360 bring data together from multiple places into a single, centralized location. This creates visibility across the entire organization! Questions that previously could not be answered, or took days (or weeks!), can now be answered real-time, on demand.

It is a magic moment when something like that comes to life. When you can see trends and compare metrics. When you can explore and get a clear picture of the credit union’s members and operations.

So much of the sluggishness in any organization is due to lack of information, or slow information. And most of that is because data is scattered across so many places.

Focus and traction.

Regarding focus, the biggest challenges have nothing to do with technology or data. There is a tendency to want to boil the ocean–to make a large, encompassing effort. The organization buys into the big vision, and then tries to execute a huge, complicated project. You can imagine the results. I don’t think this is unique to credit unions.

On the other hand, the sheer size of potential impact and number of potential applications of data analytics can create paralysis.

The need to focus is critical to getting early wins, building momentum, and growing in maturity and capability. Think big, but take small steps, learn, and iterate.

At Datateer, we follow a framework we call Simpler Analytics. A core tenet is to treat data sources, metrics, and data products as assets–trackable things with lifecycles and measurements of their own. And it ensures each data asset is aligned with a particular audience and purpose it is intended to serve.

Regarding traction, the challenge is how to get moving and stay organized. With so many moving parts, any data architecture is at risk of becoming bloated, cumbersome, and difficult to maintain.

How many potential questions could data answer in a single credit union? How many KPIs and metrics might be part of a mature system? How many reports, dashboards, embedded analytics, or other data products?

At Datateer, we address this complexity in two ways.

To get going, we treat each new effort with a crawl-walk-run approach. Anyone surveying the modern data marketplace will quickly become overwhelmed with all the tools. But the basic modern data stack is proven and not complicated. I described an approach for this, including product recommendations, in a recent article.

As things mature, the number of reports, metrics, etc. can get out of control. This can happen relatively early. We rely again on the data asset inventory I mentioned earlier. This inventory keeps things manageable.

It can get overwhelming as reports and dashboards proliferate, more tooling gets implemented, more processes and procedures are put in place. All of these artifacts and procedures can reference the data asset inventory to stay aligned with what matters and provide a point of reference.

Security and governance boil down to ensuring data is available to only the right people, for the right reasons, at the right time.

The biggest challenge here is the balance between privacy and making good use of data. While governance and policy are essential, they slow things down. It’s necessary to find a balance between compliance and risk mitigation, with moving forward and making an impact.

Good security practices can only go so far. Up to 88% of data breaches are caused by human error. With the personal and financial data credit unions must protect, a culture and training around data protection are critical.

A solid data governance plan provides a backstop against human error.

Your credit union will have unique requirements, but not at the foundational level. Embrace the basic modern data stack and get moving. Don’t get hung up on defining everything up front.

Stakeholders no longer have the patience to wait months for a big project to show results. And, you don’t have to go it alone. Datateer pioneered the concept of managed analytics and fractional analytics teams. Our model allows companies to get going quickly and confidently, creating business value immediately.

Embrace the cloud. It’s where all the product and tool innovation will continue to happen. Many people get hung up on two risks: potential cost overruns and security concerns.

What I see for mid-sized credit unions is actually the opposite.

I conceptualize modern data architecture as a set of components. Each has a responsibility and a set of tools and processes.

You may come across the phrase “modern data stack” which treats these components as layers that stack or build on each other.

Here is how I define the core components:

I hope that the credit union cloud adoption trend will continue. The potential benefits are huge for speed and visibility into members and product performance.

Credit unions that focus on Member 360 efforts will have such an advantage over those that don’t invest there. Member 360 is just a buzzword, but it encompasses efforts to truly understand each member by bringing together data from multiple areas of the organization.

Credit unions that do invest here will try to balance the insights data analytics can provide with the personalized service and relationships they already have with members.

Data replication will become easier. Some large vendors are already sharing data into your warehouse automatically–no replication needed.

Last, we will see generative AI become a natural language interface on top of data, allowing easier and faster access to information. This will be exciting because it opens up data to more people. But it will only be useful to credit unions who have a solid data foundation already in place.

--

Adam Roderick is CEO of Datateer, where he helps companies make their data useful and valuable. He is the creator of the Simpler Analytics framework and the founder of the Open Metrics Project. Adam lives in Colorado with his wife and five amazing daughters, raising them to live life to the fullest in one of the most beautiful places on earth.

Protecting sensitive data is becoming a critical aspect of any organization’s data processes. Sensitive information, such as financial data, personal information, and confidential business information, must be kept secure to prevent unauthorized access, theft or misuse.

Of course, by implementing robust security measures and technologies, such as data loss prevention tools, network protection, and strong access controls, companies can significantly reduce the risk of a breach and protect sensitive data.

Tokenization can come on top of ‘traditional’ security measures to protect sensitive data, by physically replacing the original data at the database level using a unique identifier or token. This token can be used to revert the process to see the original data on the fly.

Sounds like masking data? Yes and no… While the data remains clear when applying a mask, tokenization physically alters the underneath data… So, it goes one step further than simply masking data.

Detokenization is the process of reversing tokenization by taking the token and returning the original data. This process is typically only done in secure systems where the data is needed for legitimate purposes, such as for a financial transaction.

Codex Consulting prioritizes protection of sensitive data and is dedicated to implementing tokenization and detokenization techniques in a straightforward manner, without the need for complex protocol.

In this blog, ALTR is the go-to solution for data security, data governance and monitoring. Matillion is the data integration and productivity tool for streamlining data pipelines and delivering promised protection to organizations. Snowflake is the Data Cloud platform on which we want to add another layer of security and protection.

Therefore, our goal is to convey our expertise on seamlessly incorporating tokenization and detokenization to secure sensitive data within your Snowflake environment.

Let’s take the example where customer emails require protection and only specific roles have access to the clear data.

The purpose of this function is to detokenize sensitive data that has been previously tokenized using the ALTR_PROTECT_TOKENIZE function.

Let’s create a script (SQL component in Matillion) to call the Snowflake Function we just created.

We run the script below in the Snowflake environment with component SQL script and will want to choose the email data we want to protect.

But we also want to make sure that only authorized groups can see the data in clear.

As per our observations, the email data has been physically modified in Snowflake so that only specific groups can access the unencrypted data while the data remains tokenized for others. Is it possible to reverse this process and restore the data to its original state? Yes, definitely!

The script in the component SQL:

Ultimately, tokenization and detokenization are effective and effortless using Matillion and ALTR.

The automation offered by these tools is remarkable and saves a lot of time for data engineers, allowing them to access and utilize cloud data in a matter of minutes.

Data governance doesn’t have a magic bullet or even a well-defined goal or end date. It’s a never-ending, ongoing process of trial, error and optimization as your business, your data and your data users change. If you’re like most practitioners responsible for governing and securing data, you’re always looking for better ways to overcome data governance challenges or ensure your systems are compliant.

"A company's data governance posture really starts with inspecting the organization's culture and identifying how data is important to the firm's short- and long-term objectives. Companies need to be honest in assessing their data literacy, talent, and data-related needs, pain points, and opportunities. These inputs are vital to establishing or updating a data governance framework that aligns to its desired posture and outcomes.

Then, it's about reinforcing the overall message of the operational and strategic value of data, addressing talent needs, and providing ongoing examples of how data governance is enabling both quick wins and more time-intensive efforts for data-led value creation and realization.

Ongoing monitoring and auditing is a must, and I'm excited to continue partnering with ALTR to help us transform static data governance policies into active, observable, and kinetic aspects of our business."

“Ethan Pack’s comment about how ALTR helps 'transform static data governance policies into active, observable, and kinetic aspects of our business' is right on.

Additionally, identify all data sources, classify data based on sensitivity and importance, and define a glossary of terms and ownership. To promote sustainability & efficiency, organizations should look at automating aspects of data governance wherever possible.”

“Ensure the entire business is on board. At the Gartner Data & Analytics conference earlier this year, I heard someone from a very large company say 'Data governance has become a third rail because not everyone at the table cares' and then continued on to declare why she thoughts other stakeholders didn’t care. It all came back to leadership. Ensure your leadership is empowering and communicating the needs of the business to everyone at the same time, in the same room.”

"Automate data controls as much as possible!"

In today’s environment, you’re aware that data security and governance are critical to prevent data breaches that can put your company in jeopardy of being fined, sued, and possibly shut down. This kind of data security is especially important in cloud data platforms like Snowflake where lots of sensitive data can be consolidated into a single data pool. A surefire way to outsmart bad actors who attempt to compromise your data is through a “switcheroo” tactic otherwise known as Snowflake Data Tokenization. If you’re unfamiliar with it, then think of data tokenization (metaphorically) as valet service offered at an upscale restaurant or formal event that you might have attended. Think of your car keys that the attendant exchanged for the valet ticket you were given, as how data tokenization works. If someone were to steal your valet ticket, it would be useless to them because they aren’t the actual keys to access your car. The ticket only serves as a substitute for your keys and ‘marker’ to help the valet attendant (who has your keys) identify which car to return to you.

This blog provides a high-level explanation of what Snowflake tokenization is, the benefits it offers, how Snowflake tokenization can be done manually, and the steps to follow if you use ALTR to automate the process. There are other ways to secure data including Snowflake data encryption, but Snowflake tokenization with ALTR provides many benefits encryption cannot.

Data tokenization is a process where an element of sensitive data (for example a social security number) is replaced by a value called a token. This technology adds an extra means of securing your sensitive data. Here’s the most simplistic way to describe Snowflake Tokenization. Your data is replaced with a ‘substitute’ of non-sensitive data —that has no value and serves as a marker —to map back to your sensitive data when someone queries it. The ‘substitute’ is in the form of a random character string that is a ‘token’. For example, your customer’s bank account number would be replaced with this ‘token’ to make it impossible for someone among your staff to make purchases with their information. As a result, this added safeguard will help your company minimize data breaches and remain compliant to data governance laws.

Sometimes you might hear data tokenization and data encryption used interchangeably; however, while both technologies help to secure data, they are two different approaches to consider as part of your data security strategy. Tokenization replaces your sensitive data with a ‘token’ that cannot be deconstructed whereas encryption converts your data into a format (done by an encryption algorithm and key) that is impossible to read and understand.

A benefit of data tokenization is that it can be more secure than encryption because a token represents a value without being a function or derivative of that value. Another benefit it offers is by being simpler to manage because there are no encryption keys to oversee. However, when deciding if you should use tokenization or encryption, consider your specific business needs. Due to the benefits that tokenization offers in today’s environment, businesses in different industries are using it for a wide array of reasons. A few examples are commerce transactions to accept credit and debit card payments, the sale and tracking of certain assets such as digital art that’s recorded on a blockchain platform, and the protection of personal health information.

If you’re wondering how to tokenize your data manually inside of Snowflake, then the answer is, “You can’t.”

Snowflake does not have native built in tokenization capabilities, but it can support custom tokenization through its external function and column level security features as long as you have the resources available to write the code needed to implement tokenization, storage, and detokenization.

Lets take a look at what that would entail:

This service will need to be implemented in Amazon Web Services, Microsoft Azure, or Google Cloud Platform depending on which of those cloud providers you chose for your Snowflake instance.

There is a significant amount of effort required in this step that will require not just programming expertise but also expertise in how to use the storage, compute and networking capabilities of your cloud provider.

Also Snowflake expects data passed to and received from external functions to be provided in a specific format. Thus you will need to invest time in understanding this format and how to architect a solution that optimizes the exchange of a large amount of data.

This layer is also where you implement authentication to ensure that only valid requests from your Snowflake instance are processed.

One is a user defined function which will be called from within your SQL statements to tokenize / detokenize data.

And the other is an Integration object that holds the credentials allowing your snowflake instance to connect and make a call to the EFs implementation in your cloud providers environment. These two objects can be created using SQL.

As you can see that's a lot of extra time and effort. Let's see how you can avoid that by utilizing ALTR's tokenization solution.

NOTE: A tokenization API user is required to access our Vaulted Tokenization. Enterprise Plus customers can create tokenization API users on the API tab of the Applications page.

Let's compare this with using ALTR. If you use ALTR and Snowflake together tokenization is much easier because ALTR has done all the implementation work for you.

To use tokenization in ALTR you only need to create the Snowflake Integration object that points to our service and define an External Function in your database. We provide a SQL script that does this work for you with just a single SQL command.

You will need to generate an API key and secret from the ALTR portal.

This key and secret value are inputs to the SQL script we provide. Just run the script to create a Snowflake Integration object that represents a connection to ALTR’s external tokenization/detokenization implementation in the cloud. This script also creates two external functions that use this service. One to tokenize data, and another to detokenize.

Now we can look at tokenization in action.

As mentioned previously a best practice is to have sensitive values tokenized at rest in the database, preferably before they land in Snowflake. ALTR supports this through a library of open-source integrations to data movement tools like Matillion, Big ID and others.

If we run this query as an account admin we can see that we have two columns tokenized. The NAME and SSN columns. The tokens that you see here are the values on the disk within Snowflake in the cloud.

When it comes to detokenizing we want to only detokenize a value “on the fly”, when the data is queried, and we only want to detokenize the value for roles that are allowed to see the values.

With ALTR tokenization we do this for you automatically.

If we run this next query with the DATA_SCIENTIST role then we will see the values are de-tokenized and we see the original sensitive values instead of tokens. This is because in the ALTR portal, we have allowed the Data Scientist role to see these values.

If you use ALTR for tokenization you do not need to write any code or invest in developing a solution.

We can ensure your data is tokenized before it lands in Snowflake with our open source integrations for your ETL/ELT pipelines and we can automate detokenization to only users who you authorize through the ALTR portal.

Here are a couple of use case examples where ALTR’s automated data tokenization capability can benefit your business as it scales with Snowflake usage.

A pharmaceutical company wants your new research company to conduct clinical trials on their behalf. The personal identifiable data and research information from clinical study participants needs to be secure to stay in compliance with HIPAA laws and regulations. ALTR’s Data Tokenization would be the ideal method to incorporate as part of your compliant data governance strategy.

Your newly launched store accepts credit cards as one of your methods of payment from shoppers. To remain compliant to Payment Card Industry (PCI) standards, you need to ensure that your customer’s credit card information is handled securely. Data Tokenization would help you save administrative overhead costs and satisfy the PCI standards while storing the data in Snowflake.

As your business collects and stores more sensitive data in Snowflake, it is critical that Snowflake data tokenization is included as part of your data governance strategy. ALTR helps you with this process by providing our Vaulted Tokenization, BYOK for Vaulted Tokenization, and other capabilities to leverage.

Since Snowflake announced the general availability of Snowpark in November 2022, we've heard more and more ALTR customers express interest in utilizing it as part of their Snowflake platform. It provides developers with the capabilities to eliminate complexity and drive increased productivity by building applications and models, or even data pipelines, within one single data platform. We've done some validation and are happy to demonstrate that ALTR's policy enforcement carries over from Snowflake to Snowpark without a hitch.

It's essentially a separate execution environment within Snowflake where you can write data-intensive applications. You can use third-party dependencies, and you can process data with very complete programmatic capabilities. This execution environment runs next to the Snowflake SnowSQL interface. ALTR applies, automates, and enforces Snowflake's native data governance features (without requiring SQL) in the Snowflake environment so that when the data flows into Snowpark, the Snowpark environment gets the benefit of all those same policies and controls.

All the powerful ALTR data governance and security capabilities, including access monitoring, query logs, role-based access controls, dynamic data masking, rate limits, real-time access alerts, and even tokenization, are carried over to the users and data in Snowpark.

Data scientists are the most common users. If you wanted to use historical data to make a prediction like, for example, using the last ten years of rainfall info to predict the next ten years, you might use something like a statistical or machine learning module. Rather than writing your own, you can pull an existing, already-written module into Snowpark as a dependency and just plumb Snowpark through to build your analysis model. This means the data scientist running these models and analyses in Snowpark can only leverage the data they have permission to access in Snowflake. This kind of protection over sensitive data so approved users can utilize it is critical to financial services organizations. They need to access very sensitive financial data to build models to identify and prevent fraud. Have you ever been contacted by your bank when on vacation in another country to confirm it's really you making the transaction? That's probably a data model identifying anomalous activity.

It allows the business to place controls over sensitive data that can be used for data modeling activities. So, the data scientists no longer have to take a chunk of data into their own data silo, crunch on the data, spit out an answer. Through ALTR's single pane of glass, data admins will have total control over all data access in both Snowflake and Snowpark. This also allows data scientists to get the benefits of Snowpark's powerful data processing capabilities with all the Snowflake data they have permission to use – for a streamlined and secure experience.

Our compatibility with Snowpark is another example of ALTR's goal of providing governance and security over data wherever it is, but it's just the beginning of our Snowpark journey. Keep your eyes out for more details coming at Snowflake Summit 2023 in June.

Anywhere you want to or need to work with data, ALTR will be there.

In today’s digital age, data governance is essential for organizations looking to maintain the security of their sensitive data while maximizing data productivity. With large and dynamic data environments, it can be challenging to implement an effective data governance strategy that protects data from threats and promotes business growth. That’s where Matillion comes in, with our integration with ALTR, the only automated data access control and security solution for governing and protecting sensitive data in the cloud.

In this blog, we’ll explore the benefits of this integration and how it enables organizations to manage and safeguard their data more effectively. We’ll dive into how it can help organizations automate data access control, increase data visibility, and build deep trust through compliant and reliable data for confident data-driven decision-making. With Matillion’s integration with ALTR, organizations can enhance their data governance initiatives without ever leaving the Matillion interface.

Matillion’s integration with ALTR empowers organizations to manage and secure sensitive data assets and set centralized data security policies so they can be confident their data is protected at all times. It offers several key features to ensure comprehensive data governance, including automated, granular data access controls, real-time data monitoring and analytics, comprehensive audit trails, and secure tokenization.

Matillion’s integration with ALTR allows for the implementation of classification-based policies to control access to sensitive data and is user-friendly for multi-skilled teams with no coding required. With interactive Data Usage Heatmaps and Analytics Dashboards, organizations can track data usage and access by users, as well as monitor their data usage. Additionally, the integration further offers flexible data masking options for private information and provides auditable query logs to ensure privacy controls are working correctly.

Secure Sensitive Data with Matillion’s ALTR Integration

By integrating with ALTR, Matillion offers data-driven organizations a competitive advantage.

Turn policies into practice with automated data governance to manage risk and safeguard your bottom line. Easily control and secure sensitive data by using a central area to set policy, so organizations can proactively mitigate risk before it negatively affects them. ALTR’s shared job allows organizations to utilize ALTR’s data security and policy capabilities within Matillion, so data engineers can mask and tokenize data assets at the beginning of the data journey — even from the most privileged admin users — to protect highly sensitive data.

Improve observability to monitor and secure the data landscape. With Matillion’s integration with ALTR, organizations can quickly get visibility into their organization’s data usage. This helps break down silos and make previously hidden data visible so organizations can quickly spot abnormalities and reduce vulnerabilities. ALTR provides a detailed audit trail, including who accessed the data, when they accessed it, and what they did with it. This information is essential for regulatory compliance and helps organizations detect and investigate any suspicious activity, so they can visualize and understand their entire data landscape to secure it at scale.

Matillion’s integration with ALTR makes it simple to enforce data compliance around the clock by providing granular access controls, detailed audit trails, and data protection measures. Abiding by data regulations and laws helps safeguard data and establish standards for its access, use, and integrity while ensuring the entire organization is compliant when working within its own teams and cross-functionally. Matillion’s integration with ALTR uses real-time insights into data access and usage, enabling organizations to make informed and confident data-driven decisions based on accurate, up-to-date information.

Matillion’s integration with ALTR is designed to automate data access control and provide scalable security to help organizations increase their data productivity, security, and ultimately, revenue. This integration is a powerful tool for data-driven organizations looking to supercharge their governance policy, increase transparency, and make confident decisions.

Maybe you're just getting started with Snowflake, maybe you're well into your Snowflake project but are running into the "sensitive data roadblock," or maybe (and we won't tell your security team) you already have all your data (including that sensitive customer PII) in Snowflake, ready to be used and optimized.

Regardless of your data project maturity, Snowflake data governance and security must be on your mind. And perhaps you're at different stages with this as well. You may be leveraging Snowflake's native data governance features to tackle some tasks with SQL but leaving others on the back burner. Or you find it difficult to keep up with all the new data coming in and the users requesting access.

Wherever you are in your journey, it's never too late to think about how you're managing Snowflake data governance and how you and your team can leverage data governance best practice to most efficiently ensure your data stays private and secure. We developed this Snowflake Data Governance Best Practices Guide to help you review your checklist and ensure your bases are covered.

An essential Snowflake best practice in your data governance program is to examine the data and databases coming into your cloud data warehouse to identify sensitive or regulated data. It may seem self-evident that a column labeled "Social Security Numbers" contains, well, social security numbers, but you might be surprised! Data can be accidentally comingled, sometimes column headers can follow a completely unintelligible formula, or you might be surprised to see email addresses in a column called "Username." If you have just a handful of columns or rows, digging through your data could be an hour's work in a morning. But if you have hundreds or thousands of columns, with new databases being continually added, this data classification task can become not just a time suck but practically impossible. That doesn't make it any less important, unfortunately. You can't govern or secure sensitive data if you don't know where it is.

See a comparison of how you might do this yourself using Snowflake's native capabilities versus ALTR's automated solution.

Once you've identified (and hopefully tagged) columns holding your sensitive data, the next Snowflake best practice is to ensure that you have a way to see who is accessing that data, when and how much. Some companies have pushed so hard to become "data-driven" they might have opened up the data floodgates to the rest of the company clamoring for insights into their business units. While you can check data access manually with query logs in Snowflake, it can be an arduous task to turn that unstructured data into valuable insights. Having this visibility at your fingertips can make complying with data privacy regulations and audits much, much more manageable. And it can be incredibly insightful in allowing you to get a baseline sense of what normal data use looks like in your company. For example, are your marketing users accessing customer emails once a week for relevant outreach? Once you have that insight, setting appropriate policies and identifying anomalies becomes much easier.

This is the next critical Snowflake data governance best practice: deciding what roles should have access to what data and then enforcing that policy. Some groups need unfiltered access to the most sensitive data - think HR accessing payroll data. Other groups only need access to data that is relevant and critical to doing their jobs - the marketing team might need to cross reference purchase info with data of birth and email address to send a targeted offer. But the HR team doesn't really need access to customer PII. A helpful concept to follow is the "principle of least privilege" (PoLP). This is a risk-reduction strategy of giving a user or entity access only to the specific data, resources, and applications needed to complete a required task. Snowflake data governance, then, is all about setting these access controls by Snowflake database columns or rows.

As more and more data is added to Snowflake and more and more users request access, the tasks of setting access controls for users can become both time-consuming and risky. The process becomes more onerous as additional Snowflake databases or even additional Snowflake accounts are added. Surely the roles, policies, and access controls need to be consistent across your whole Snowflake ecosystem.

See a comparison of how you might implement row-level security using Snowflake's native capabilities versus ALTR's automated solution.

A further refinement of the data access control best practice is data masking. This is the process of not completely excluding the data but obfuscating the data so it's recognizable. For example, an email address like contact@altr.com could be masked as c****t@a**r.com. Or a social security number could be shown as "***-**-1234. This allows users to run analyses on data in multiple databases by cross-referencing sensitive data like email addresses without knowing exactly what the email address is. Data masking is fundamental to Snowflake data governance programs.

See a comparison between writing data masking controls using SnowSQL in Snowflake versus automatically with ALTR.

And see how a multinational retailer used ALTR's custom masking policy to ensure the highest level of security for its customer PII data.

The next and one of the most important Snowflake best practice is to limit the amount of data even an approved user can access. Even when data should be accessible to a specific group of users, it's improbable that they would need all the data at once. Can you imagine a marketing person downloading all the personal information - first name, last name, email, DOB, etc. - for every single customer? That sounds like a threat to me. In order to ensure that no users get carried away, intentionally or unintentionally, you should set up limits for the amount of data each role can access over a specific time period. This lowers the risk to your data by stopping credentialed access threats before they do unrecoverable damage and ensuring even the most privileged users don't access data they don't need.

See how you could set data access rate limits manually in Snowflake (or not) vs. automatically in ALTR.

Read how Redwood Logistics combined data rate limiting with alerting to ensure that privileged Snowflake admins couldn't access sensitive payroll data.

One of the primary purposes for migrating data to Snowflake is to enable analytics through business intelligence tools like Tableau and Looker. Once you have your data governed and secured in Snowflake, you'll want to make it available to line-of-business users throughout your organization. But how do you make sure you know who's accessing what data and that only authorized users get the sensitive stuff? You could create a Snowflake user for every Tableau user so that there's a one-to-one relationship, and Snowflake can track the individual's query. But this causes two issues: you have to manage Tableau and Snowflake accounts for every user, which can run into the thousands at the largest companies, and you have the same data monitoring issue listed above - you're digging through query logs.

See how ALTR's Tableau user governance integration can help avoid both these issues.

There are some data governance best practices you need to implement no matter where your data resides-on-premises or in the cloud. But there are some specific quirks to Snowflake you'll need to know in order to ensure your sensitive and regulated data stays secure while your users use it. Many of these can be accomplished using SnowSQL to activate Snowflake native features, but that's often not a feasible solution when managing multiple accounts, hundreds of databases, or thousands of users. ALTR's platform can help you scale your Snowflake data governance policies across your Snowflake ecosystem.

Here at the end of Q1 2023, I’ve had a chance to look back on not just two quarters as CEO but to our decision to focus on Snowflake two years ago. And what is becoming even more crystal clear after talking to companies large and small over that time is that while they’re moving massive amounts of data to the cloud, they’re not completely moving away from existing systems. Basically, businesses have data everywhere.

We’re currently talking to a large enterprise with 17 to 20 different systems storing data: some are older legacy boxes while others are brand new micro-services. They have a lot of systems and they’re all different. What does this mean for data security? The right solution needs to support multiple and varying integration methodologies to meet data where it is and where it’s going. The end goal is for sensitive data to be classified and tokenized on ingest, travel around the business tokenized, and be de-tokenized when needed so it can be safely consumed downstream governed by logging, policy, and alerting.

And what does that mean for ALTR?

Yes, that’s a clickbait subhead. As I said, we’ve been extremely focused on Snowflake since February 2021 when we announced availability of the market's first native cloud platform delivering observability, governance, and protection of sensitive data on Snowflake. But the truth is that a number of businesses have a use for both Snowflake and Databricks and so far, they’ve really served two different purposes:

Because the work use cases are primarily different as of now, the use cases for data governance and security are different as well.

In Snowflake, we see a focus on masking sensitive data and role-based access controls to keep it from users who don’t need the full information – like a data analyst using Snowflake or a Tableau report who can do what they need to with masked email addresses or masked phone numbers.

On Databricks, data scientists need full access to plain text production data so they can fully understand the distribution of the data. This means the focus is more about privacy protection and breach prevention. We see data scientists create multiple copies of the exact same data set with a light format variation. The model may produce slightly different results from the same data. That makes data governance and security more complicated because you’re basically playing cat and mouse with data constantly being copied, moved and shared – without oversight. So, we expect the solution will be focused on sensitive data discovery and classification, then automatic access logging and reporting – a kind of “overwatch” mode with tokenization underlying all of it.

ALTR’s feature set can be applied to both to solve their specific governance needs. Because we have that SaaS hub, we can now just pull the capabilities and controls we need down to wherever policy enforcement is needed.

So, what’s ALTR focus for the rest of this year? Building out the remaining connections to all the different data sources/data stores. Because even though the data governance and security use cases might be different across different data platforms and systems, companies don’t want to add, implement and manage different vendors. No one has time for that. They’re looking for one solution to the entire data security knot – one pane of glass, one single source for data access truth.

ALTR will make this happen this year. And if you’re interested in working with us on Databricks, Amazon Redshift, BigQuery or any other database or solution integrations, get in touch with us to help drive the features we implement and roll out. We can’t wait.

Want to try ALTR today? Sign up for our Free Plan. Or get a Demo to see how ALTR’s enterprise-ready solution can handle securing your data wherever it is.

Data catalogs are an essential component of your broader data strategy. Modern data catalogs, “Make it easier for your analysts to find, understand and trust the data” within your database. Your data catalog is essential for synthesizing information about your data and making that information available across your company but isn’t the actual data. Your catalog may give users the ability to search and index meta information about your data, but it won’t go all the way to providing access control and security on your data.

This past quarter, many of our partners shared their guidance on data catalogs and the value they add to your data solution. We checked in on a what a few of our partners were saying and compiled the best in thought leadership for you here.

This blog post, written by GT Volpe, describes factors that will help your organization see immediate value in your data catalog. Volpe begins this piece by defining what it means for an organization to be data-driven and how data catalogs fit seamlessly in the push to be a data driven organization. Volpe shares considerations that you should think through prior to applying a data catalog, including focusing on planned outcomes and user skills, considering culture, industry, and regional needs, and embracing scalability and expansion.

Volpe talks of the importance of ensuring that your data catalog works for you and will continue to work for you as you scale, and as priorities may change. He writes of the importance of seeing immediate value from your data catalog, and that the one way to ensure success and accelerate time to value is to implement a data catalog plus data governance solution from day one – he writes that with the combination of a good data governance solution and a good data catalog, you will see immediate business value.

Aaron Bradshaw, Data Governance & Enablement Specialist - Solutions Engineer at Alation, wrote about the specific value adds of implementing a data catalog. Bradshaw explains the reasons one may consider implementing a data catalog and what each implementor may expect from their catalog. He goes on to describe the difference between offensive and defensive approaches to data strategy and how that may play part in the decisions your organization makes surrounding investment and implementation of your data strategy.

Bradshaw concludes this blog post by outlining the strategic decision to implement a data catalog by quantifying its monetary value. Through a study done in partnership with Forrester, Alation discovered that “Adopting data catalogs has both quantitative and qualitative advantages, including a 364% return on investment (ROI).”

.png)

This blog post, written by Paul Ewasuik, Director of Cloud Partnerships at Collibra, takes readers through the key points of a data governance plus data catalog solution. This post breaks down the often-intimidating components of data governance and data catalogs and turns it into understandable and digestible actionable items for your organization to follow. Collibra mentions a critical component of successfully implementing a data catalog:your data catalog won’t be complete unless you partner it with a data governance solution, like ALTR. “Data governance allows your data citizens — and that’s everyone in your organization — to create value from data assets.”